Microcredentials Can Make a Huge Difference in Higher Education — from newthinking.com by Shannon Riggs

The Ecampus executive director of academic programs and learning innovation at Oregon State University believes that shorter form, low-cost courses can open up colleges to more people.

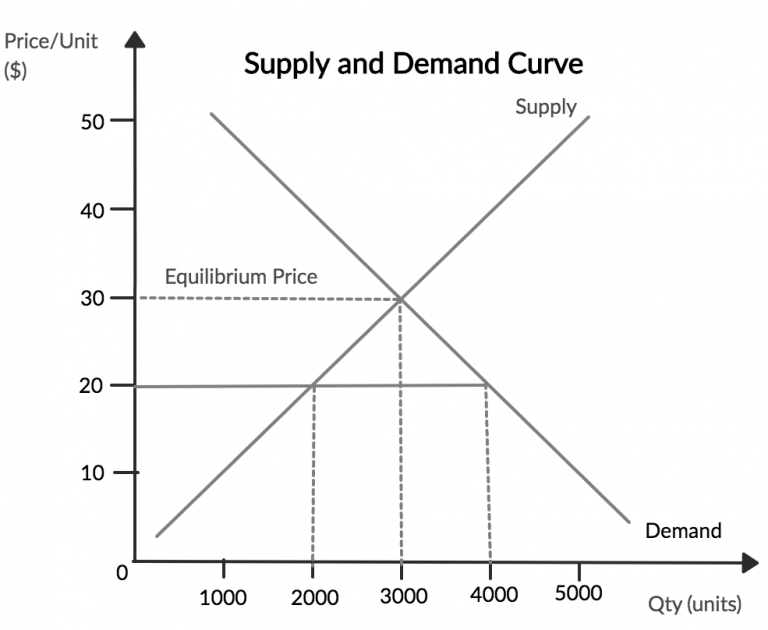

That so much student loan debt exists is a clear signal that higher education needs to innovate to reduce costs, increase access and improve students’ return on investment. Microcredentials are one way we can do this.

As the Supreme Court weighs Biden’s student loan forgiveness, education debt swells — from cnbc.com by Jessica Dickler

KEY POINTS

- As the Supreme Court weighs President Joe Biden’s student loan forgiveness plan, college tuition keeps climbing.

- This year’s incoming freshman class can expect to borrow as much as $37,000 to help cover the cost of a bachelor’s degree, according to a recent report.

College is only getting more expensive. Tuition and fees plus room and board at four-year, in-state public colleges rose more than 2% to $23,250, on average, in the 2022-23 academic year; at four-year private colleges, it increased by more than 3% to $53,430, according to the College Board, which tracks trends in college pricing and student aid.

Many students now borrow to cover the tab, which has already propelled collective student loan debt in the U.S. past $1.7 trillion.

Access, Outcomes, and Value: Envisioning the Future of Higher Education — from milkeninstitute.org with Jeff Selingo, Gene Block, Jim Gash, Eric Gertler, and Nicole Hurd

Leaders of colleges and universities face unprecedented challenges today. Tuition has more than doubled over the past two decades as state and federal funding has decreased. Renewed debates about affirmative action and legacy admissions are roiling many campuses and confusing students about what it takes to get accepted. Growing numbers of administrators are matched by declining student enrollment, placing new financial pressures on institutions of higher learning. And many prospective students and their parents are losing faith in the ROI of such an expensive investment and asking the simple question: Is it all worth it? Join distinguished leaders from public and private institutions for this panel discussion on how they are navigating these shifts and how they see the future of higher education.

What the New ‘U.S. News’ Law-School Rankings Reveal About the Rankings Enterprise — from chronicle.com by Francie Diep

Excerpt (emphasis DSC):

This year’s lists also offer a hint of how widespread the rankings revolt was. Seventeen medical schools and 62 law schools — nearly a third of the law schools U.S. News ranks — didn’t turn in data to the magazine this year. (It’s not clear what nonparticipation rates have been in the past. Reached by email to request historical context, a spokesperson for U.S. News pointed to webpages that are no longer online. U.S. News ranked law and medical schools that didn’t cooperate this year by using publicly available and past survey data.)

Report: Many borrowers who could benefit from income-driven repayment don’t know about it — from highereddive.com by Laura Spitalniak

Dive Brief:

- Student loan borrowers who would stand to benefit the most from income-driven repayment plans, or IDRs, are less likely to know about them, according to a new report from left-leaning think tank New America.

- Around 2 in 5 student-debt holders earning less than $30,000 a year reported being unfamiliar with the repayment plans. Under a proposed plan from the U.S. Education Department, IDR minimum monthly loan payments for low-income earners, such as this group, could drop to $0.

- Just under half of borrowers in default had not heard of IDRs, despite the plans offering a pathway to becoming current on their loans, the report said. Only one-third of currently defaulted borrowers had ever enrolled in IDR.

Addendum on 5/16/23:

AI-assisted cheating isn’t a temptation if students have a reason to care about their own learning.

Yesterday I happened to listen to two different podcasts that ended up resonating with one another and with an idea that’s been rattling around inside my head with all of this moral uproar about generative AI:

** If we trust students – and earn their trust in return – then they will be far less motivated to cheat with AI or in any other way. **

First, the question of motivation. On the Intentional Teaching podcast, while interviewing James Lang and Michelle Miller on the impact of generative AI, Derek Bruff points out (drawing on Lang’s Cheating Lessons book) that if students have “real motivation to get some meaning out of [an] activity, then there’s far less motivation to just have ChatGPT write it for them.” Real motivation and real meaning FOR THE STUDENT translates into an investment in doing the work themselves.

…

Then I hopped over to one of my favorite podcasts – Teaching in Higher Ed – where Bonni Stachowiak was interviewing Cate Denial about a “pedagogy of kindness,” which is predicated on trusting students and not seeing them as adversaries in the work we’re doing.

So the second key element: being kind and trusting students, which builds a culture of mutual respect and care that again diminishes the likelihood that they will cheat.

…

Again, human-centered learning design seems to address so many of the concerns and challenges of the current moment in higher ed. Maybe it’s time to actually practice it more consistently. #aiineducation #higheredteaching #inclusiveteaching