Information Age vs Generation Age Technologies for Learning — from opencontent.org by David Wiley

Remember (emphasis DSC)

- the internet eliminated time and place as barriers to education, and

- generative AI eliminates access to expertise as a barrier to education.

Just as instructional designs had to be updated to account for all the changes in affordances of online learning, they will need to be dramatically updated again to account for the new affordances of generative AI.

The Curious Educator’s Guide to AI | Strategies and Exercises for Meaningful Use in Higher Ed — from ecampusontario.pressbooks.pub by Kyle Mackie and Erin Aspenlieder; via Stephen Downes

This guide is designed to help educators and researchers better understand the evolving role of Artificial Intelligence (AI) in higher education. This openly-licensed resource contains strategies and exercises to help foster an understanding of AI’s potential benefits and challenges. We start with a foundational approach, providing you with prompts on aligning AI with your curiosities and goals.

The middle section of this guide encourages you to explore AI tools and offers some insights into potential applications in teaching and research. Along with exposure to the tools, we’ll discuss when and how to effectively build AI into your practice.

The final section of this guide includes strategies for evaluating and reflecting on your use of AI. Throughout, we aim to promote use that is effective, responsible, and aligned with your educational objectives. We hope this resource will be a helpful guide in making informed and strategic decisions about using AI-powered tools to enhance teaching and learning and research.

Annual Provosts’ Survey Shows Need for AI Policies, Worries Over Campus Speech — from insidehighered.com by Ryan Quinn

Many institutions are not yet prepared to help their faculty members and students navigate artificial intelligence. That’s just one of multiple findings from Inside Higher Ed’s annual survey of chief academic officers.

Only about one in seven provosts said their colleges or universities had reviewed the curriculum to ensure it will prepare students for AI in their careers. Thuswaldner said that number needs to rise. “AI is here to stay, and we cannot put our heads in the sand,” he said. “Our world will be completely dominated by AI and, at this point, we ain’t seen nothing yet.”

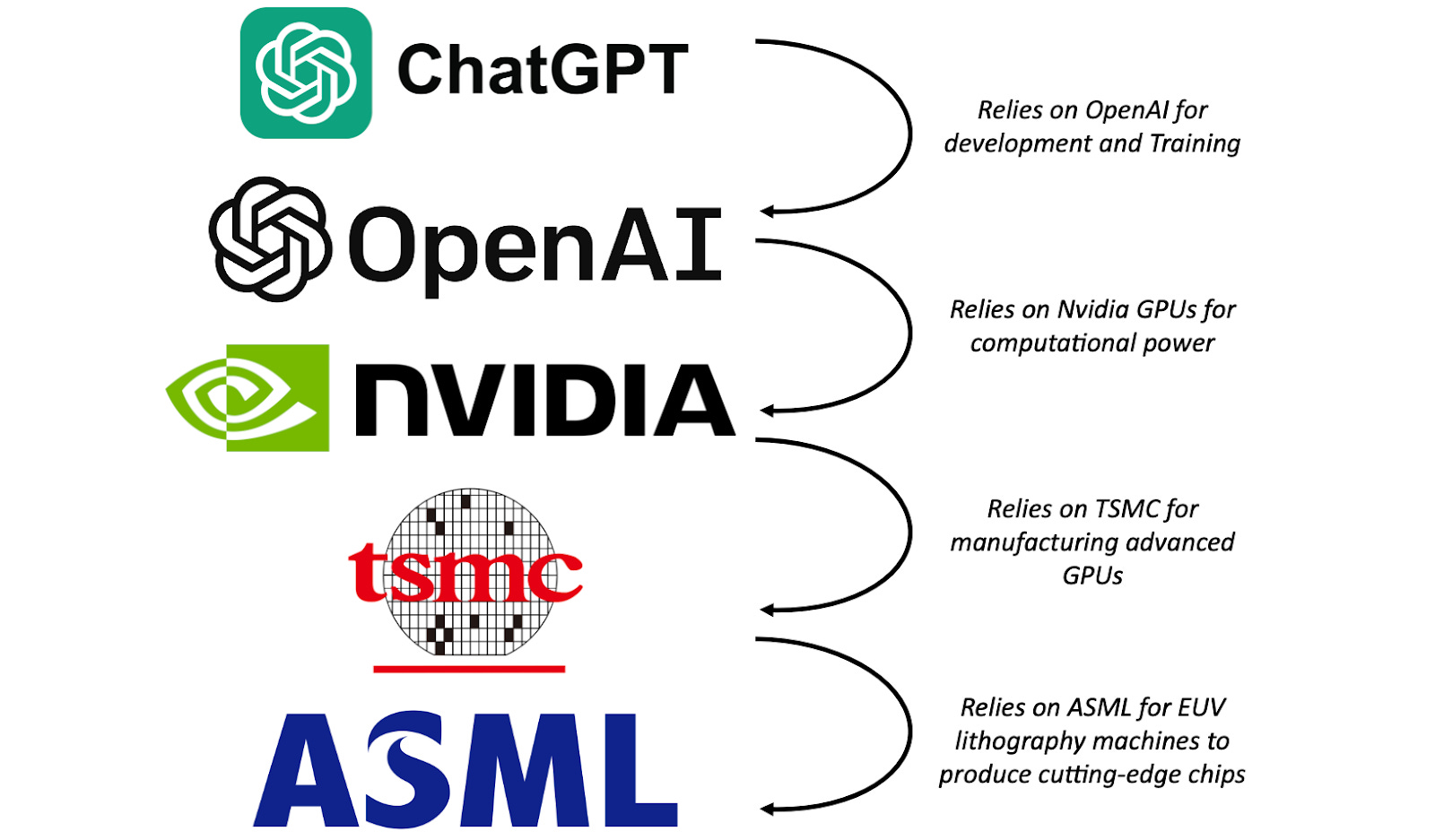

Is GenAI in education more of a Blackberry or iPhone? — from futureofbeinghuman.com by Andrew Maynard

There’s been a rush to incorporate generative AI into every aspect of education, from K-12 to university courses. But is the technology mature enough to support the tools that rely on it?

In other words, it’s going to mean investing in concepts, not products.

This, to me, is at the heart of an “iPhone mindset” as opposed to a “Blackberry mindset” when it comes to AI in education — an approach that avoids hard wiring in constantly changing technologies, and that builds experimentation and innovation into the very DNA of learning.

…

For all my concerns here though, maybe there is something to being inspired by the Blackberry/iPhone analogy — not as a playbook for developing and using AI in education, but as a mindset that embraces innovation while avoiding becoming locked in to apps that are detrimentally unreliable and that ultimately lead to dead ends.

Do teachers spot AI? Evaluating the detectability of AI-generated texts among student essays — from sciencedirect.com by Johanna Fleckenstein, Jennifer Meyer, Thorben Jansen, Stefan D. Keller, Olaf Köller, and Jens Möller

Highlights

- Randomized-controlled experiments investigating novice and experienced teachers’ ability to identify AI-generated texts.

- Generative AI can simulate student essay writing in a way that is undetectable for teachers.

- Teachers are overconfident in their source identification.

- AI-generated essays tend to be assessed more positively than student-written texts.

Can Using a Grammar Checker Set Off AI-Detection Software? — from edsurge.com by Jeffrey R. Young

A college student says she was falsely accused of cheating, and her story has gone viral. Where is the line between acceptable help and cheating with AI?

Use artificial intelligence to get your students thinking critically — from timeshighereducation.com by Urbi Ghosh

When crafting online courses, teaching critical thinking skills is crucial. Urbi Ghosh shows how generative AI can shape how educators can approach this

ChatGPT shaming is a thing – and it shouldn’t be — from futureofbeinghuman.com by Andrew Maynard

There’s a growing tension between early and creative adopters of text based generative AI and those who equate its use with cheating. And when this leads to shaming, it’s a problem.

Excerpt (emphasis DSC):

This will sound familiar to anyone who’s incorporating generative AI into their professional workflows. But there are still many people who haven’t used apps like ChatGPT, are largely unaware of what they do, and are suspicious of them. And yet they’ve nevertheless developed strong opinions around how they should and should not be used.

From DSC:

Yes…that sounds like how many faculty members viewed online learning, even though they had never taught online before.

.webp)