How to Stanch Enrollment Loss — from chronicle.com by Jeff Selingo

It’s time to stop pretending the problem will fix itself.

Excerpt:

The latest enrollment numbers from the National Student Clearinghouse Research Center, for the fall of 2022, paint an ominous picture for higher education coming out of the pandemic. Even in what many college leaders have called a “normal” fall on campuses, enrollment was down 1.1 percent across all sectors. And while the drop was smaller than the past two Covid-stricken fall semesters, colleges across every sector still have lost more than a million students since the fall of 2019.

At some point, colleges need to stop blaming the students who sat out the pandemic or the economic factors and social forces buffeting higher education for enrollment losses. Instead, institutions should look at whether the student experience they’re offering and the outcomes they’re promising provide students with a sense of belonging in the classroom and on campus and ultimately a purpose for their education.

The Key Podcast | Ep.91: The Pros and Cons of HyFlex Instruction — from insidehighered.com with Doug Lederman, Enilda Romero-Hall and Alanna Gillis

Excerpt:

During the pandemic, many colleges and universities embraced a form of blended learning called HyFlex, to mixed reviews. Is it likely to be part of colleges’ instructional strategy going forward?

This week’s episode of The Key explores HyFlex, in which students in a classroom learn synchronously alongside a cohort of peers studying remotely. HyFlex moved from a fringe phenomenon to the mainstream during the COVID-19 pandemic, and the experience was imperfect at best, for professors and students alike.

This conversation about the teaching modality features two professors who have both taught in the HyFlex format and done research on its impact.

From DSC:

When I worked for a law school, we had a Weekend Blended Learning Program. Student evaluations of these courses constantly mentioned that these WBLP-based courses saved many students hundreds of dollars for each particular class that we offered online (i.e., cost savings in flights, hotels, meals, rental cars, parking fees, etc.).

Another thought/idea:

- What if traditional institutions of higher education were to offer tiered pricing? That is, perhaps students participating remotely could listen in and even audit classes, but pay less.

Colleges should use K-12 performance assessments for course placement, report says — from highereddive.com by Jeremy Bauer-Wolf

Dive Brief:

- Colleges should use K-12 performance assessments like capstone papers or portfolios for student course placements and advising, according to a recent report.

- Typical methods of determining students’ placement in early college classes — like standardized tests — don’t fully illustrate their interests and academic potential, according to the report, which was published by postsecondary education access group Complete College America. Conversely, K-12 performance assessments ask students to demonstrate real-world skills, often in a way that ends with a tangible product.

- The organizations recommend colleges and K-12 schools mesh their processes, such as by mutually developing a high school graduation requirement around performance assessments. This would help strengthen the K-12 school-college relationship and ease students’ transition from high school to college, the report states.

From DSC:

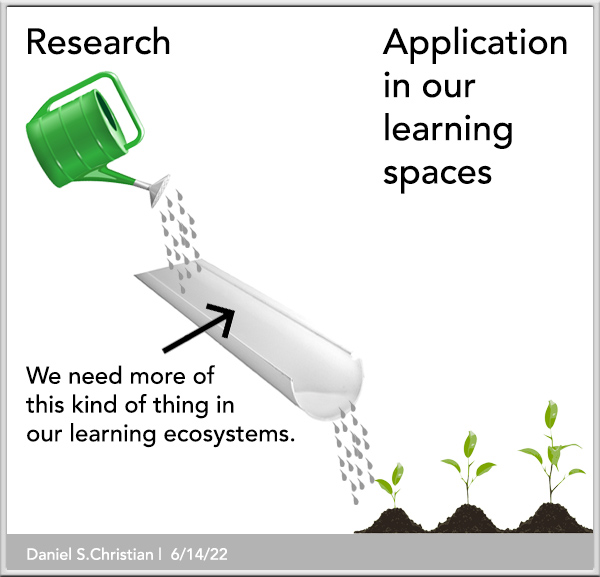

I post this particular item because I like the tighter integration that’s being recommended between K12 and higher education. It seems like better overall learning ecosystems design, design thinking, and on-ramping.

Along these lines, also see:

How Higher Ed Can Help Remedy K-12 Learning Losses — from insidehighered.com by Johanna Alonso

Low national scores have spurred discussion of how K-12 schools can improve student performance. Experts think institutions of higher education can help.

Excerpt:

Now educators at all levels are talking about ways to reverse the declines. Higher education leaders have already added supports for college students who suffered pandemic-related learning losses; many now aim to expand their efforts to help K-12 students who will eventually arrive on their campuses potentially with even more ground to make up.

It’s hard to tell yet what these supports will look like, but some anticipate they will involve strengthening the developmental education infrastructure that already exists for underprepared students. Others believe universities must play a role in the interventions currently ongoing at the K-12 level.

Also see:

CIN EdTech Student Survey | October 2022 — from wgulabs.org

Excerpt:

Our report shares three key takeaways:

- Students’ experiences with technology-enabled learning have improved since 2021.

- Students want online learning but institutions must overcome perceptions of lower learning quality.

- Students feel generally positive about an online-enabled future for higher education, but less so for themselves..

5 things colleges can do to help save the planet from climate change — from highereddive.com by Anthony Knerr

A strategy consultant explores ways colleges can improve sustainability.

Overwhelming demand for online classes is reshaping California’s community colleges — from latimes.com by Debbie Truongs; with thanks to Ray Schroeder out on LinkedIn for this resource

Excerpt:

Gallegos is among the thousands of California community college students who have changed the way they are pursuing higher education by opting for online classes in eye-popping numbers. The demand for virtual classes represents a dramatic shift in how instruction is delivered in one of the nation’s largest systems of public higher education and stands as an unexpected legacy of the pandemic.

Labster Hits Milestone of 300 Virtual Science Lab Simulations — from businesswire.com

Award-winning edtech pioneer adds new STEM titles and extensive product enhancements for interactive courseware for universities, colleges, and high schools

Excerpt:

Labster provides educators with the ability to digitally explore and enhance their science offerings and supplement their in-classroom activities. Labster virtual simulations in fields such as biology, biochemistry, genetics, biotechnology, chemistry, and physics are especially useful for pre- and post-lab assignments, so science department leads can fully optimize the time students spend on-site in high-demand physical laboratories.

AAA partners with universities to develop tech talent — from ciodive.com by Lindsey Wilkinson

Through tech internships and for-credit opportunities, the auto club established a talent pipeline that has led to new feature development.

5 enrollment trends to keep an eye on for fall 2022 — from highereddive.com by Natalie Schwartz

Although undergraduate and graduate enrollment are both down, some types of institutions saw notable increases, including HBCUs and online colleges.