YouTube tests AI-generated quizzes on educational videos — from techcrunch.com by Lauren Forristal

YouTube is experimenting with AI-generated quizzes on its mobile app for iOS and Android devices, which are designed to help viewers learn more about a subject featured in an educational video. The feature will also help the video-sharing platform get a better understanding of how well each video covers a certain topic.

Incorporating AI in Teaching: Practical Examples for Busy Instructors — from danielstanford.substack.com by Daniel Stanford; with thanks to Derek Bruff on LinkedIn for the resource

Since January 2023, I’ve talked with hundreds of instructors at dozens of institutions about how they might incorporate AI into their teaching. Through these conversations, I’ve noticed a few common issues:

- Faculty and staff are overwhelmed and burned out. Even those on the cutting edge often feel they’re behind the curve.

- It’s hard to know where to begin.

- It can be difficult to find practical examples of AI use that are applicable across a variety of disciplines.

To help address these challenges, I’ve been working on a list of AI-infused learning activities that encourage experimentation in (relatively) small, manageable ways.

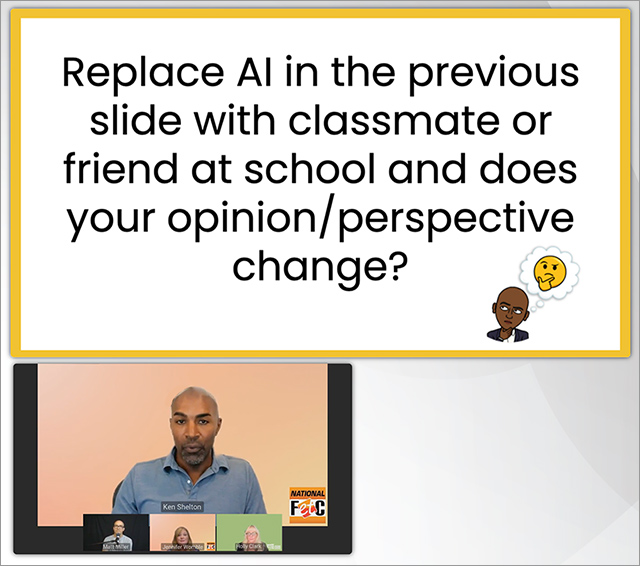

September 2023: The Secret Intelligent Beings on Campus — from stefanbauschard.substack.com by Stefan Bauschard

Many of your students this fall will be enhanced by artificial intelligence, even if they don’t look like actual cyborgs. Do you want all of them to be enhanced, or just the highest SES students?

How to report better on artificial intelligence — from cjr.org (Columbia Journalism Review) by Syash Kapoor, Hilke Schellmann, and Ari Sen

In the past few months we have been deluged with headlines about new AI tools and how much they are going to change society.

Some reporters have done amazing work holding the companies developing AI accountable, but many struggle to report on this new technology in a fair and accurate way.

We—an investigative reporter, a data journalist, and a computer scientist—have firsthand experience investigating AI. We’ve seen the tremendous potential these tools can have—but also their tremendous risks.

As their adoption grows, we believe that, soon enough, many reporters will encounter AI tools on their beat, so we wanted to put together a short guide to what we have learned.

.

DSC:

Something I created via Adobe Firefly (Beta version)

The 5 reasons L&D is going to embrace ChatGPT — from chieflearningoffice.com by Josh Bersin

Does this mean it will do away with the L&D job? Not at all — these tools give you superhuman powers to find content faster, put it in front of employees in a more useful way and more creatively craft character simulations, assessments, learning in the flow of work and more.

And it’s about time. We really haven’t had a massive innovation in L&D since the early days of the learning experience platform market, so we may be entering the most exciting era in a long time.

Let me give you the five most significant use cases I see. And more will come.

AI and Tech with Scenarios: ID Links 7/11/23 — from christytuckerlearning.com by Christy Tucker

As I read online, I bookmark resources I find interesting and useful. I share these links periodically here on my blog. This post includes links on using tech with scenarios: AI, xAPI, and VR. I’ll also share some other AI tools and links on usability, resume tips for teachers, visual language, and a scenario sample.

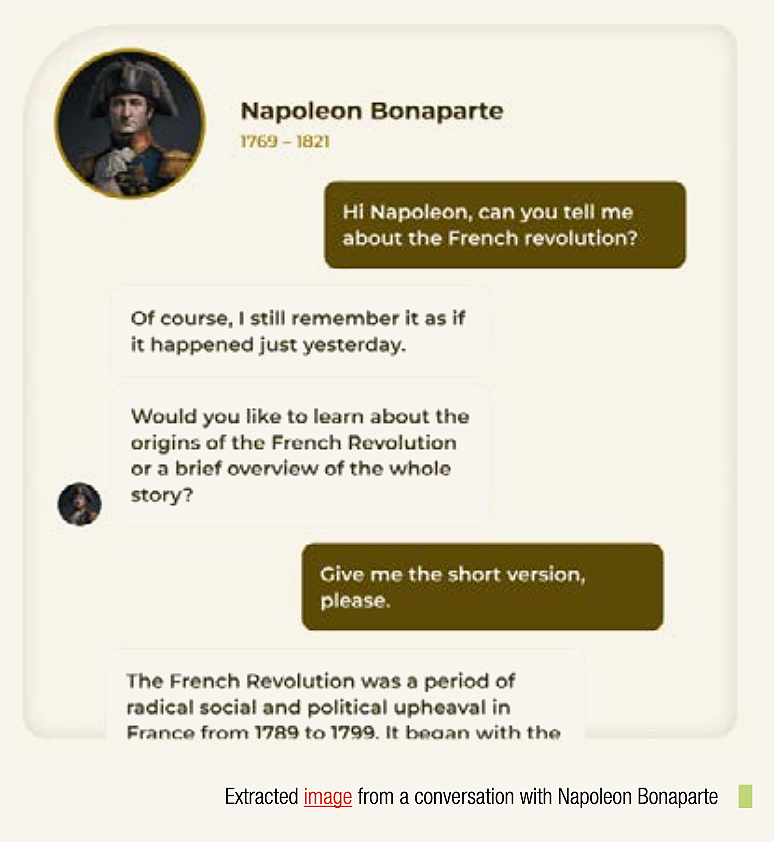

It’s only a matter of time before A.I. chatbots are teaching in primary schools — from cnbc.com by Mikaela Cohen

Key Points

- Microsoft co-founder Bill Gates saying generative AI chatbots can teach kids to read in 18 months rather than years.

- Artificial intelligence is beginning to prove that it can accelerate the impact teachers have on students and help solve a stubborn teacher shortage.

- Chatbots backed by large language models can help students, from primary education to certification programs, self-guide through voluminous materials and tailor their education to specific learning styles [preferences].

The Rise of AI: New Rules for Super T Professionals and Next Steps for EdLeaders — from gettingsmart.com by Tom Vander Ark

Key Points

- The rise of artificial intelligence, especially generative AI, boosts productivity in content creation–text, code, images and increasingly video.

- Here are six preliminary conclusions about the nature of work and learning.

The Future Of Education: Embracing AI For Student Success — from forbes.com by Dr. Michael Horowitz

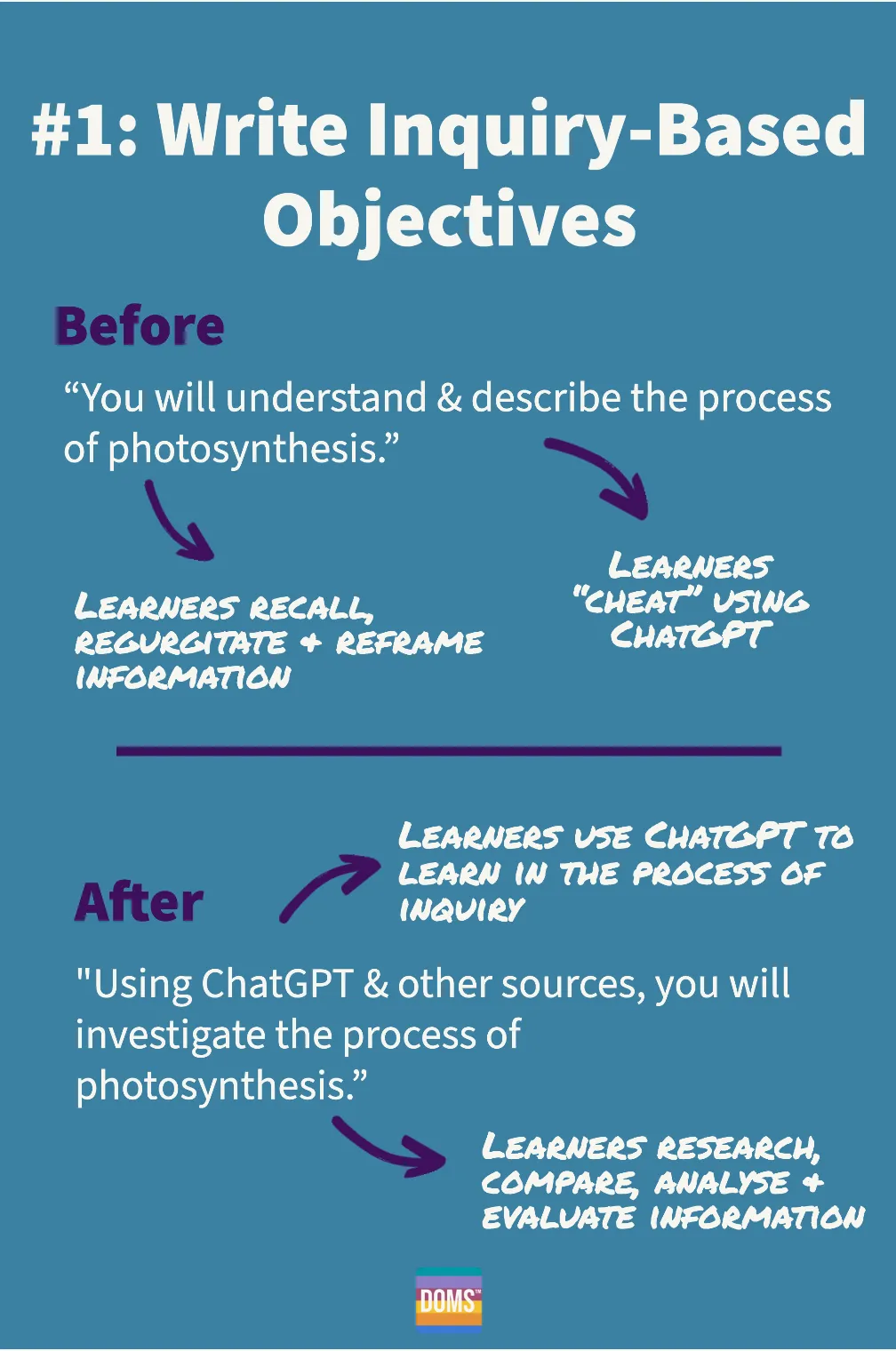

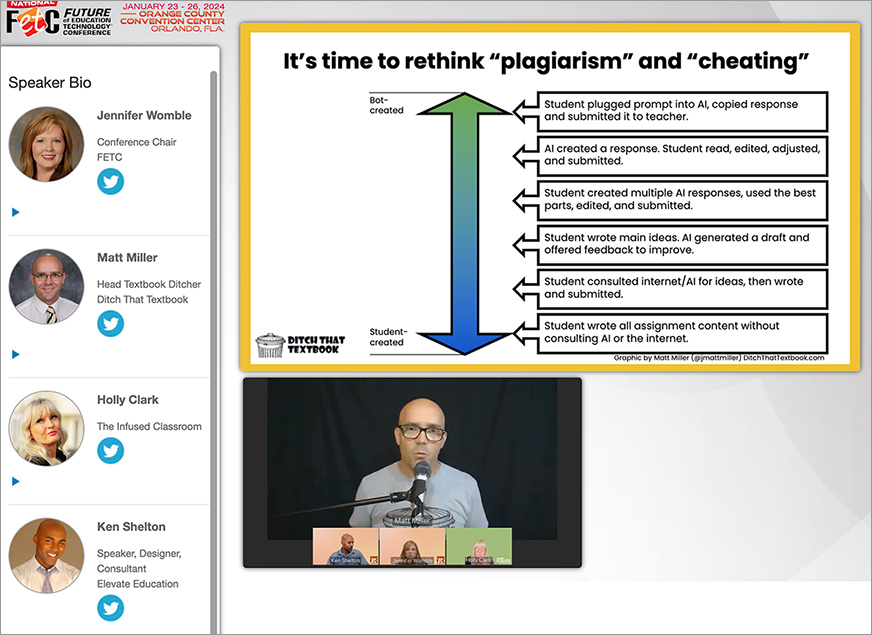

Unfortunately, too often attention is focused on the problems of AI—that it allows students to cheat and can undermine the value of what teachers bring to the learning equation. This viewpoint ignores the immense possibilities that AI can bring to education and across every industry.

The fact is that students have already embraced this new technology, which is neither a new story nor a surprising one in education. Leaders should accept this and understand that people, not robots, must ultimately create the path forward. It is only by deploying resources, training and policies at every level of our institutions that we can begin to realize the vast potential of what AI can offer.

AI Tools in Education: Doing Less While Learning More — from campustechnology.com by Mary Grush

A Q&A with Mark Frydenberg

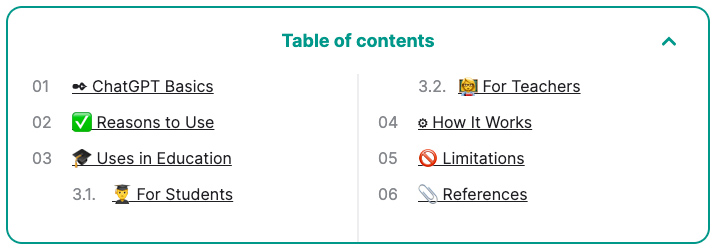

Why Students & Teachers Should Get Excited about ChatGPT — from ivypanda.com with thanks to Ruth Kinloch for this resource

Excerpt re: Uses of ChatGPT for Teachers

- Diverse assignments.

- Individualized approach.

- Interesting classes.

- Debates.

- Critical thinking.

- Grammar and vocabulary.

- Homework review.

SAIL: State of Research: AI & Education — from buttondown.email by George Siemens

Information re: current AI and Learning Labs, education updates, and technology

Why ethical AI requires a future-ready and inclusive education system — from weforum.org

A specter is haunting higher education — from aiandacademia.substack.com by Bryan Alexander

Fall semester after the generative AI revolution

In this post I’d like to explore that apocalyptic model. For reasons of space, I’ll leave off analyzing student cheating motivations or questioning the entire edifice of grade-based assessment. I’ll save potential solutions for another post.

Let’s dive into the practical aspects of teaching to see why Mollick and Bogost foresee such a dire semester ahead.

Items re: Code Interpreter

Code Interpreter becoming available for all ChatGPT Plus users over the next week. Really amazing for any data science use case: https://t.co/hpel8xKyEg pic.twitter.com/Fd3SnPvVmT

— Greg Brockman (@gdb) July 6, 2023

I put together an initial prompt to set up Code Interpreter to create useful data visualizations. It gives it some basic principles of good chart design & also reminds it that it can output many kinds of files.

It is a first draft, feel free to improve: https://t.co/m4yAdKROiJ pic.twitter.com/r5A7PznqSC

— Ethan Mollick (@emollick) July 10, 2023

- What AI can do with a toolbox… Getting started with Code Interpreter — from oneusefulthing.org by Ethan Mollick

Democratizing data analysis with AI

Code Interpreter continues OpenAI’s long tradition of giving terrible names to things, because it might be most useful for those who do not code at all. It essentially allows the most advanced AI available, GPT-4, to upload and download information, and to write and execute programs for you in a persistent workspace. That allows the AI to do all sorts of things it couldn’t do before, and be useful in ways that were impossible with ChatGPT.

.

BREAKING: Code Interpreter is FINALLY rolling out to all ChatGPT Plus users.

It’s the most powerful feature OpenAI has released since GPT-4. It makes everyone a data analyst.

Here are 15 mind-blowing use cases of Code Interpreter: pic.twitter.com/qX0txynENS

— Aakash Gupta ? Product Growth Guy (@aakashg0) July 7, 2023

Legal items

- 2 authors say OpenAI ‘ingested’ their books to train ChatGPT. Now they’re suing, and a ‘wave’ of similar court cases may follow. — from businessinsider.com by Gabriel Rivera

- Authors Are Suing OpenAI & Meta For Copyright Infringement — from theneurondaily.com by Noah Edelman

MISC items

- America’s first law regulating AI bias in hiring takes effect this week — from downes.ca by Stephen Downes

- Eric Schmidt: This is how AI will transform the way science gets done — from technologyreview.com by Eric Schmidt

Science is about to become much more exciting—and that will affect us all, argues Google’s former CEO. - Microsoft unveils first professional certificate for generative AI skills — from zdnet.com/ by Lance Whitney

With the new AI Skills Initiative, people can take free online training via LinkedIn to learn concepts of AI good toward a Career Essentials certificate. - What is Claude 2? How to access this ChatGPT competitor. — from mashable.com by Cecily Mauran

It’s a decent alternative, and it’s easy to use. - Claude 2 — from anthropic.com