2024-11-22: The Race to the TopDario Amodei on AGI, Risks, and the Future of Anthropic — from emergentbehavior.co by Prakash (Ate-a-Pi)

Risks on the Horizon: ASL Levels

The two key risks Dario is concerned about are:

a) cyber, bio, radiological, nuclear (CBRN)

b) model autonomy

These risks are captured in Anthropic’s framework for understanding AI Safety Levels (ASL):

1. ASL-1: Narrow-task AI like Deep Blue (no autonomy, minimal risk).

2. ASL-2: Current systems like ChatGPT/Claude, which lack autonomy and don’t pose significant risks beyond information already accessible via search engines.

3. ASL-3: Agents arriving soon (potentially next year) that can meaningfully assist non-state actors in dangerous activities like cyber or CBRN (chemical, biological, radiological, nuclear) attacks. Security and filtering are critical at this stage to prevent misuse.

4. ASL-4: AI smart enough to evade detection, deceive testers, and assist state actors with dangerous projects. AI will be strong enough that you would want to use the model to do anything dangerous. Mechanistic interpretability becomes crucial for verifying AI behavior.

5. ASL-5: AGI surpassing human intelligence in all domains, posing unprecedented challenges.

Anthropic’s if/then framework ensures proactive responses: if a model demonstrates danger, the team clamps down hard, enforcing strict controls.

Should You Still Learn to Code in an A.I. World? — from nytimes.com by

Coding boot camps once looked like the golden ticket to an economically secure future. But as that promise fades, what should you do? Keep learning, until further notice.

Compared with five years ago, the number of active job postings for software developers has dropped 56 percent, according to data compiled by CompTIA. For inexperienced developers, the plunge is an even worse 67 percent.

“I would say this is the worst environment for entry-level jobs in tech, period, that I’ve seen in 25 years,” said Venky Ganesan, a partner at the venture capital firm Menlo Ventures.

For years, the career advice from everyone who mattered — the Apple chief executive Tim Cook, your mother — was “learn to code.” It felt like an immutable equation: Coding skills + hard work = job.

Now the math doesn’t look so simple.

Also see:

AI builds apps in 2 mins flat — where the Neuron mentions this excerpt about Lovable:

There’s a new coding startup in town, and it just MIGHT have everybody else shaking in their boots (we’ll qualify that in a sec, don’t worry).

It’s called Lovable, the “world’s first AI fullstack engineer.”

…

Lovable does all of that by itself. Tell it what you want to build in plain English, and it creates everything you need. Want users to be able to log in? One click. Need to store data? One click. Want to accept payments? You get the idea.

Early users are backing up these claims. One person even launched a startup that made Product Hunt’s top 10 using just Lovable.

As for us, we made a Wordle clone in 2 minutes with one prompt. Only edit needed? More words in the dictionary. It’s like, really easy y’all.

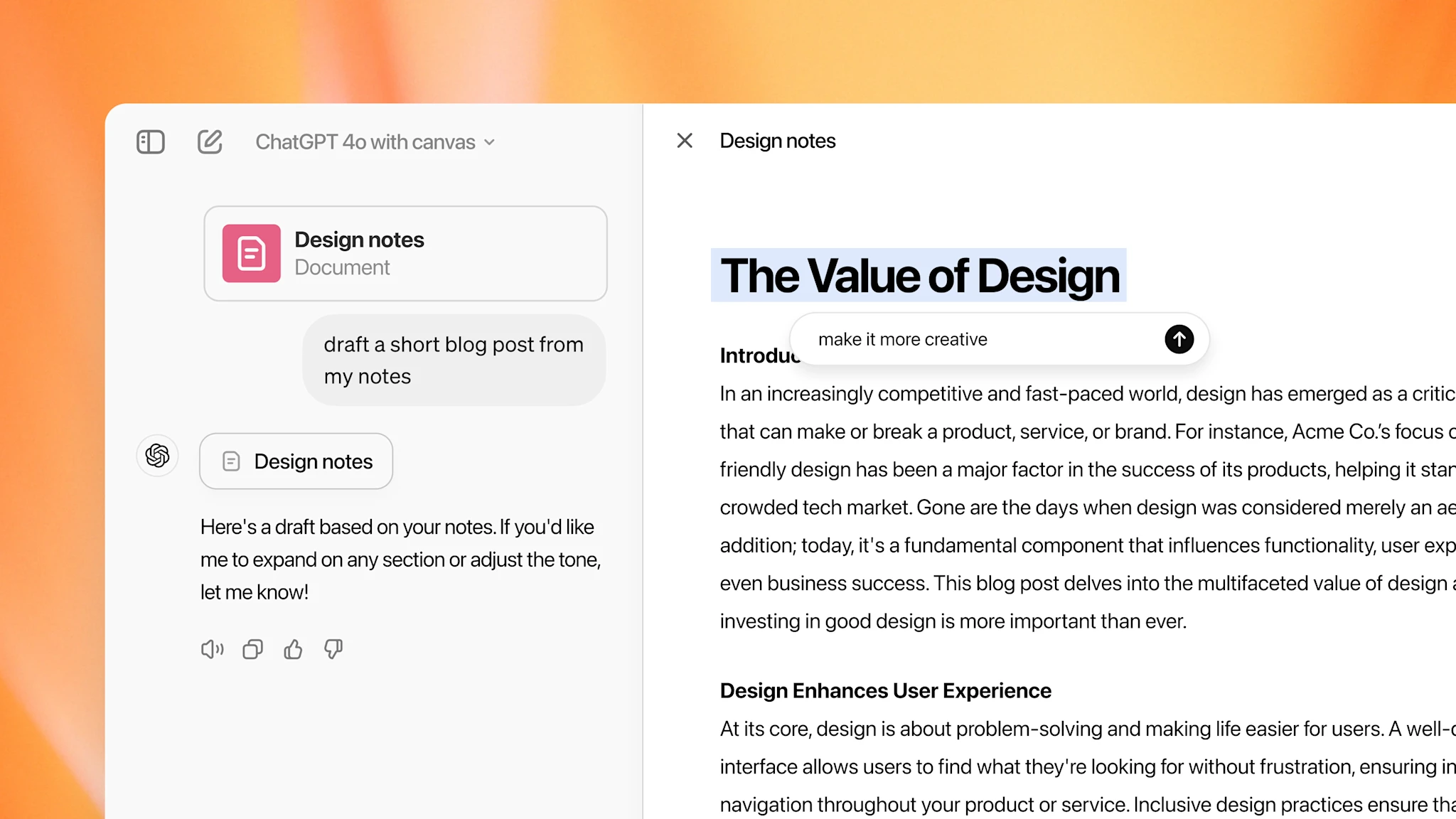

When to chat with AI (and when to let it work) — from aiwithallie.beehiiv.com by Allie K. Miller

Re: some ideas on how to use Notebook LM:

- Turn your company’s annual report into an engaging podcast

- Create an interactive FAQ for your product manual

- Generate a timeline of your industry’s history from multiple sources

- Produce a study guide for your online course content

- Develop a Q&A system for your company’s knowledge base

- Synthesize research papers into digestible summaries

- Create an executive content briefing from multiple competitor blog posts

- Generate a podcast discussing the key points of a long-form research paper

Introducing conversation practice: AI-powered simulations to build soft skills — from codesignal.com by Albert Sahakyan

From DSC:

I have to admit I’m a bit suspicious here, as the “conversation practice” product seems a bit too scripted at times, but I post it because the idea of using AI to practice soft skills development makes a great deal of sense:

This is mind-blowing!

NVIDIA has introduced Edify 3D, a 3D AI generator that lets us create high-quality 3D scenes using just a simple prompt. And all the assets are fully editable!

This is going to revolutionise the way we create!

5 Examples: pic.twitter.com/5yWlvL1cWD

— el.cine (@EHuanglu) November 24, 2024