San Diego’s Nanome Inc. releases collaborative VR-STEM software for free — from vrscout.com by Becca Loux

Excerpt:

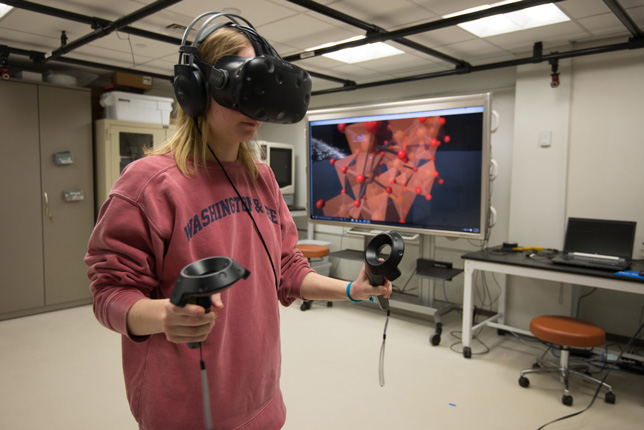

The first collaborative VR molecular modeling application was released August 29 to encourage hands-on chemistry experimentation.

The open-source tool is free for download now on Oculus and Steam.

Nanome Inc., the San Diego-based start-up that built the intuitive application, comprises UCSD professors and researchers, web developers and top-level pharmaceutical executives.

“With our tool, anyone can reach out and experience science at the nanoscale as if it is right in front of them. At Nanome, we are bringing the craftsmanship and natural intuition from interacting with these nanoscale structures at room scale to everyone,” McCloskey said.

10 ways VR will change life in the near future — from forbes.com

Excerpts:

- Virtual shops

- Real estate

- Dangerous jobs

- Health care industry

- Training to create VR content

- Education

- Emergency response

- Distraction simulation

- New hire training

- Exercise

From DSC:

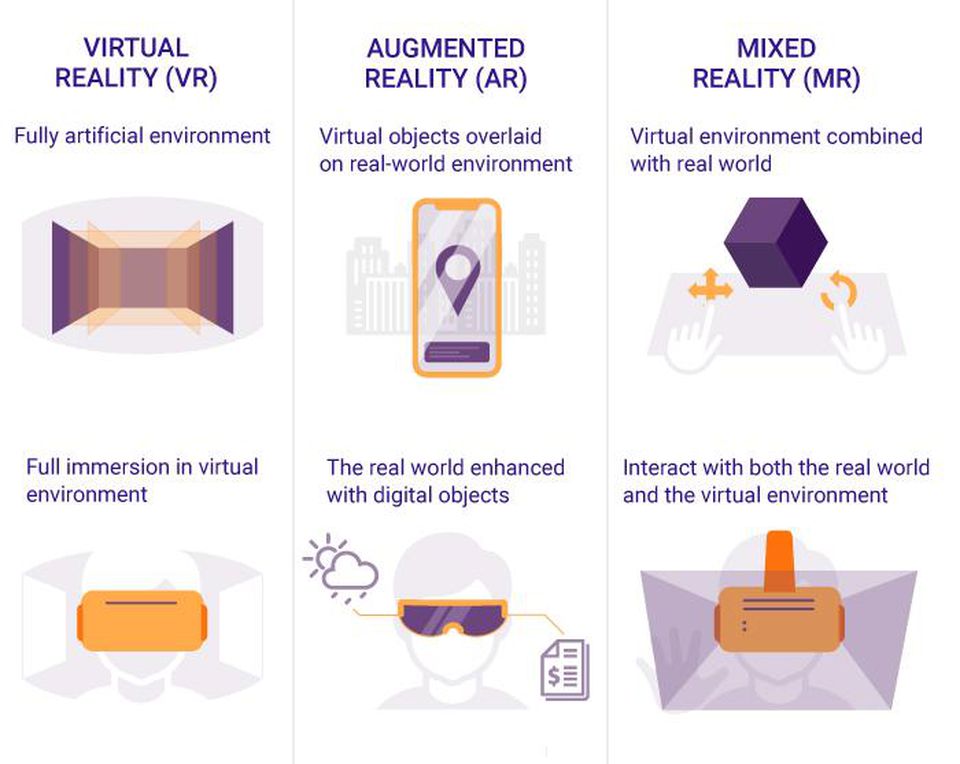

While VR will have its place — especially for timeswhen you need to completely immerse yourself into another environment — I think AR and MR will be much larger and have a greater variety of applications. For example, I could see where instructions on how to put something together in the future could use AR and/or MR to assist with that process. The system could highlight the next part that I’m looking for and then highlight the corresponding parts where it goes — and, if requested, can show me a clip on how it fits into what I’m trying to put together.

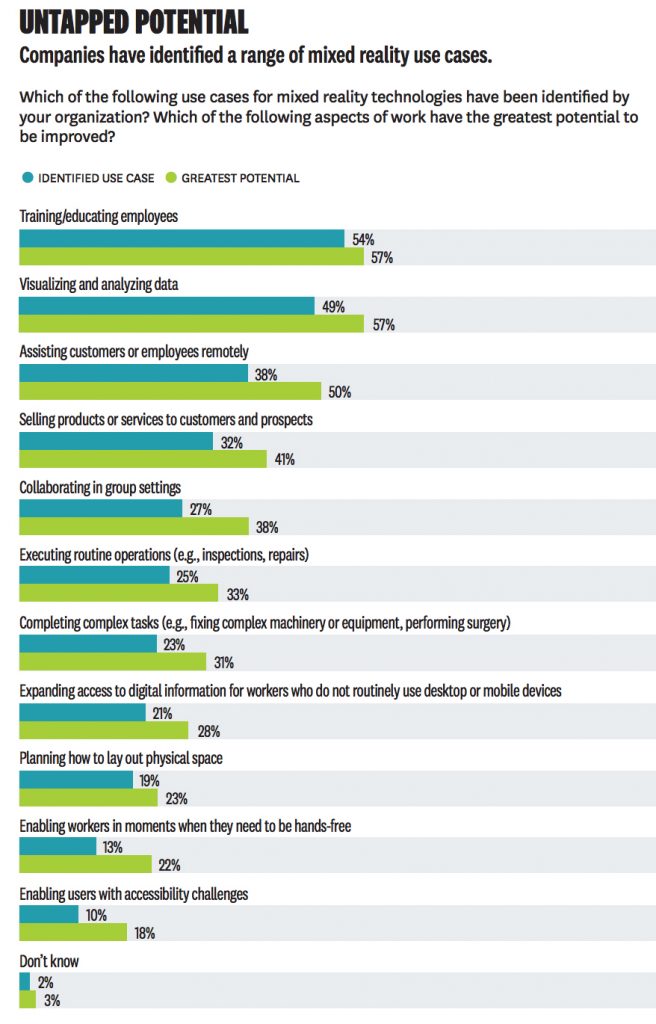

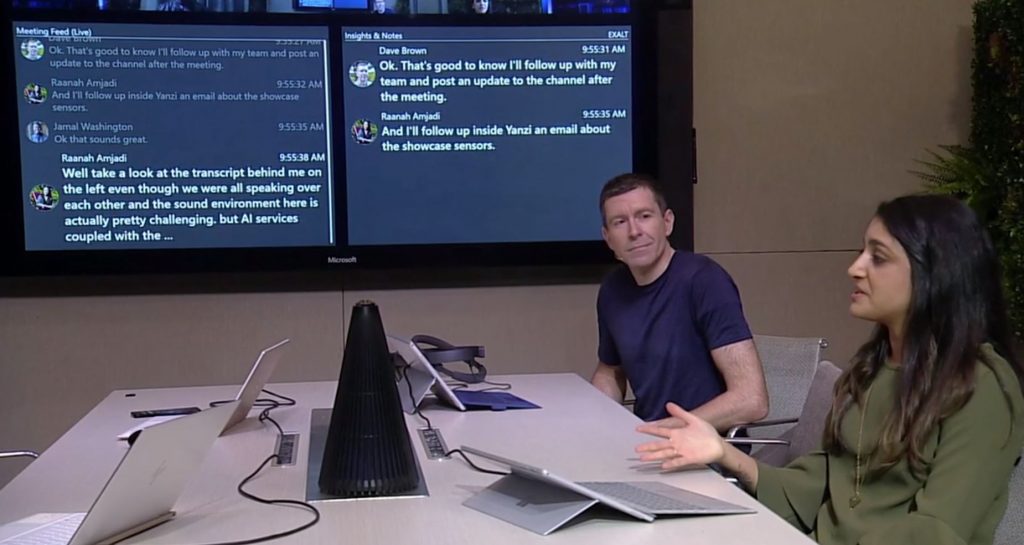

How MR turns firstline workers into change agents — from virtualrealitypop.com by Charlie Finkand

Mixed Reality, a new dimension of work — from Microsoft and Harvard Business Review

Excerpts:

Workers with mixed-reality solutions that enable remote assistance, spatial planning, environmentally contextual data, and much more,” Bardeen told me. With the HoloLens Firstline Workers workers conduct their usual, day-to-day activities with the added benefit of a heads-up, hands-free, display that gives them immediate access to valuable, contextual information. Microsoft says speech services like Cortana will be critical to control along with gesture, according to the unique needs of each situation.

Expect new worker roles. What constitutes an “information worker” could change because mixed reality will allow everyone to be involved in the collection and use of information. Many more types of information will become available to any worker in a compelling, easy-to-understand way.

Let’s Speak: VR language meetups — from account.altvr.com

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)