8 Weeks Left to Prepare Students for the AI-Enhanced Workplace — from insidehighered.com by Ray Schroeder

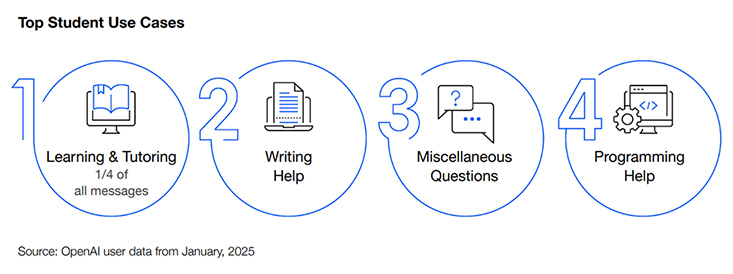

We are down to the final weeks left to fully prepare students for entry into the AI-enhanced workplace. Are your students ready?

The urgent task facing those of us who teach and advise students, whether they be degree program or certificate seeking, is to ensure that they are prepared to enter (or re-enter) the workplace with skills and knowledge that are relevant to 2025 and beyond. One of the first skills to cultivate is an understanding of what kinds of services this emerging technology can provide to enhance the worker’s productivity and value to the institution or corporation.

…

Given that short period of time, coupled with the need to cover the scheduled information in the syllabus, I recommend that we consider merging AI use into authentic assignments and assessments, supplementary modules, and other resources to prepare for AI.

Learning Design in the Era of Agentic AI — from drphilippahardman.substack.com by Dr Philippa Hardman

Aka, how to design online async learning experiences that learners can’t afford to delegate to AI agents

The point I put forward was that the problem is not AI’s ability to complete online async courses, but that online async courses courses deliver so little value to our learners that they delegate their completion to AI.

The harsh reality is that this is not an AI problem — it is a learning design problem.

However, this realisation presents us with an opportunity which we overall seem keen to embrace. Rather than seeking out ways to block AI agents, we seem largely to agree that we should use this as a moment to reimagine online async learning itself.

8 Schools Innovating With Google AI — Here’s What They’re Doing — from forbes.com by Dan Fitzpatrick

While fears of AI replacing educators swirl in the public consciousness, a cohort of pioneering institutions is demonstrating a far more nuanced reality. These eight universities and schools aren’t just experimenting with AI, they’re fundamentally reshaping their educational ecosystems. From personalized learning in K-12 to advanced research in higher education, these institutions are leveraging Google’s AI to empower students, enhance teaching, and streamline operations.

Essential AI tools for better work — from wondertools.substack.com by Jeremy Caplan

My favorite tactics for making the most of AI — a podcast conversation

AI tools I consistently rely on (areas covered mentioned below)

- Research and analysis

- Communication efficiency

- Multimedia creation

AI tactics that work surprisingly well

1. Reverse interviews

Instead of just querying AI, have it interview you. Get the AI to interview you, rather than interviewing it. Give it a little context and what you’re focusing on and what you’re interested in, and then you ask it to interview you to elicit your own insights.”

This approach helps extract knowledge from yourself, not just from the AI. Sometimes we need that guide to pull ideas out of ourselves.