AI-governed robots can easily be hacked — from theaivalley.com by Barsee

PLUS: Sam Altman’s new company “World” introduced…

In a groundbreaking study, researchers from Penn Engineering showed how AI-powered robots can be manipulated to ignore safety protocols, allowing them to perform harmful actions despite normally rejecting dangerous task requests.

What did they find ?

- Researchers found previously unknown security vulnerabilities in AI-governed robots and are working to address these issues to ensure the safe use of large language models(LLMs) in robotics.

- Their newly developed algorithm, RoboPAIR, reportedly achieved a 100% jailbreak rate by bypassing the safety protocols on three different AI robotic systems in a few days.

- Using RoboPAIR, researchers were able to manipulate test robots into performing harmful actions, like bomb detonation and blocking emergency exits, simply by changing how they phrased their commands.

Why does it matter?

This research highlights the importance of spotting weaknesses in AI systems to improve their safety, allowing us to test and train them to prevent potential harm.

From DSC:

Great! Just what we wanted to hear. But does it surprise anyone? Even so…we move forward at warp speeds.

From DSC:

So, given the above item, does the next item make you a bit nervous as well? I saw someone on Twitter/X exclaim, “What could go wrong?” I can’t say I didn’t feel the same way.

Introducing computer use, a new Claude 3.5 Sonnet, and Claude 3.5 Haiku — from anthropic.com

We’re also introducing a groundbreaking new capability in public beta: computer use. Available today on the API, developers can direct Claude to use computers the way people do—by looking at a screen, moving a cursor, clicking buttons, and typing text. Claude 3.5 Sonnet is the first frontier AI model to offer computer use in public beta. At this stage, it is still experimental—at times cumbersome and error-prone. We’re releasing computer use early for feedback from developers, and expect the capability to improve rapidly over time.

Per The Rundown AI:

The Rundown: Anthropic just introduced a new capability called ‘computer use’, alongside upgraded versions of its AI models, which enables Claude to interact with computers by viewing screens, typing, moving cursors, and executing commands.

…

Why it matters: While many hoped for Opus 3.5, Anthropic’s Sonnet and Haiku upgrades pack a serious punch. Plus, with the new computer use embedded right into its foundation models, Anthropic just sent a warning shot to tons of automation startups—even if the capabilities aren’t earth-shattering… yet.

Also related/see:

- What is Anthropic’s AI Computer Use? — from ai-supremacy.com by Michael Spencer

Task automation, AI at the intersection of coding and AI agents take on new frenzied importance heading into 2025 for the commercialization of Generative AI. - New Claude, Who Dis? — from theneurondaily.com

Anthropic just dropped two new Claude models…oh, and Claude can now use your computer. - When you give a Claude a mouse — from oneusefulthing.org by Ethan Mollick

Some quick impressions of an actual agent

Introducing Act-One — from runwayml.com

A new way to generate expressive character performances using simple video inputs.

What makes Act-One special? It can capture the soul of an actor’s performance using nothing but a simple video recording. No fancy motion capture equipment, no complex face rigging, no army of animators required. Just point a camera at someone acting, and watch as their exact expressions, micro-movements, and emotional nuances get transferred to an AI-generated character.

Think about what this means for creators: you could shoot an entire movie with multiple characters using just one actor and a basic camera setup. The same performance can drive characters with completely different proportions and looks, while maintaining the authentic emotional delivery of the original performance. We’re witnessing the democratization of animation tools that used to require millions in budget and years of specialized training.

Also related/see:

Introducing, Act-One. A new way to generate expressive character performances inside Gen-3 Alpha using a single driving video and character image. No motion capture or rigging required.

Learn more about Act-One below.

(1/7) pic.twitter.com/p1Q8lR8K7G

— Runway (@runwayml) October 22, 2024

Google to buy nuclear power for AI datacentres in ‘world first’ deal — from theguardian.com

Tech company orders six or seven small nuclear reactors from California’s Kairos Power

Google has signed a “world first” deal to buy energy from a fleet of mini nuclear reactors to generate the power needed for the rise in use of artificial intelligence.

The US tech corporation has ordered six or seven small nuclear reactors (SMRs) from California’s Kairos Power, with the first due to be completed by 2030 and the remainder by 2035.

Related:

ChatGPT Topped 3 Billion Visits in September — from similarweb.com

After the extreme peak and summer slump of 2023, ChatGPT has been setting new traffic highs since May

ChatGPT has been topping its web traffic records for months now, with September 2024 traffic up 112% year-over-year (YoY) to 3.1 billion visits, according to Similarweb estimates. That’s a change from last year, when traffic to the site went through a boom-and-bust cycle.

Crazy “AI Army” — from aisecret.us

Also from aisecret.us, see World’s First Nuclear Power Deal For AI Data Centers

Google has made a historic agreement to buy energy from a group of small nuclear reactors (SMRs) from Kairos Power in California. This is the first nuclear power deal specifically for AI data centers in the world.

New updates to help creators build community, drive business, & express creativity on YouTube — from support.google.com

Hey creators!

Made on YouTube 2024 is here and we’ve announced a lot of updates that aim to give everyone the opportunity to build engaging communities, drive sustainable businesses, and express creativity on our platform.

Below is a roundup with key info – feel free to upvote the announcements that you’re most excited about and subscribe to this post to get updates on these features! We’re looking forward to another year of innovating with our global community it’s a future full of opportunities, and it’s all Made on YouTube!

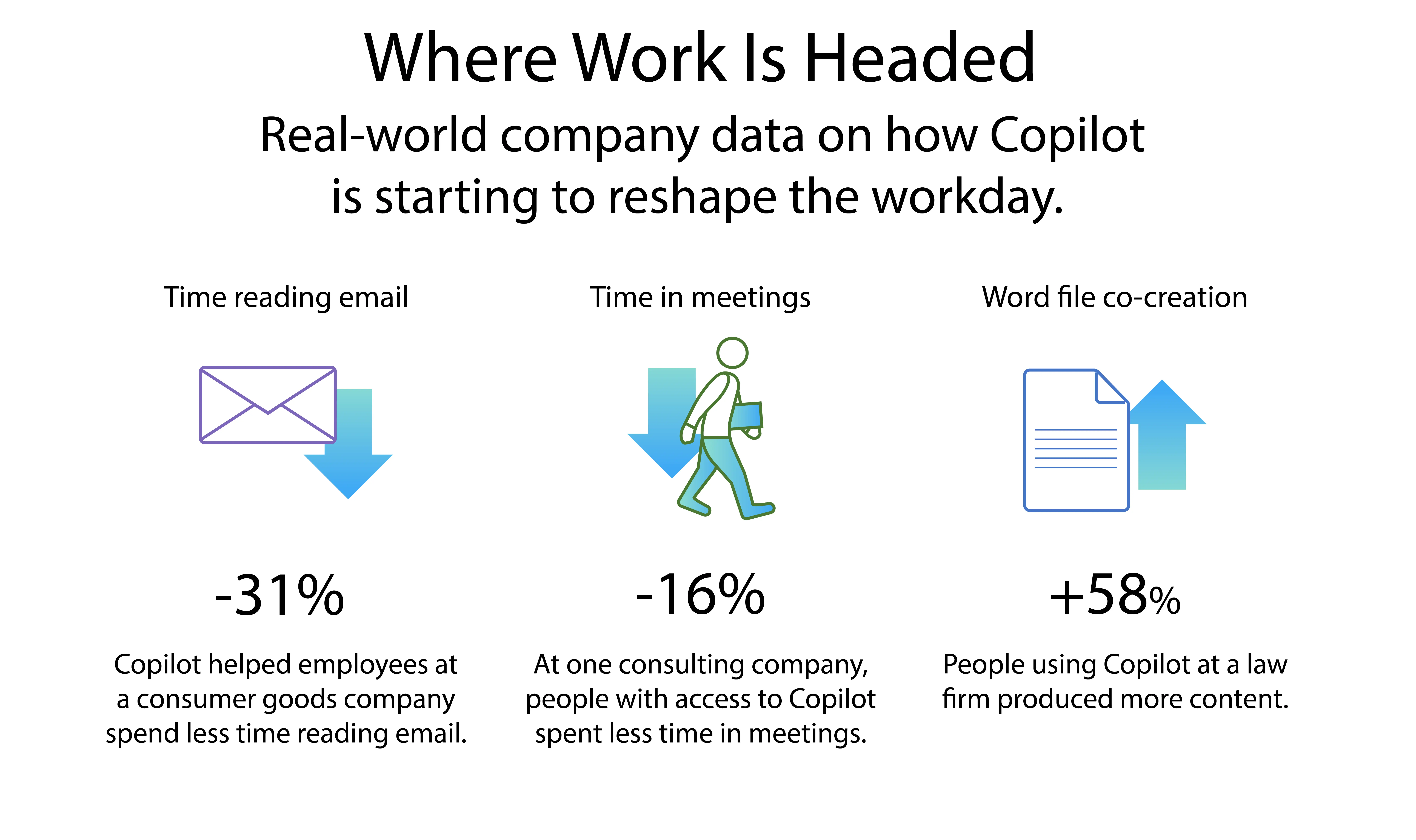

New autonomous agents scale your team like never before — from blogs.microsoft.com

Today, we’re announcing new agentic capabilities that will accelerate these gains and bring AI-first business process to every organization.

- First, the ability to create autonomous agents with Copilot Studio will be in public preview next month.

- Second, we’re introducing ten new autonomous agents in Dynamics 365 to build capacity for every sales, service, finance and supply chain team.

10 Daily AI Use Cases for Business Leaders— from flexos.work by Daan van Rossum

While AI is becoming more powerful by the day, business leaders still wonder why and where to apply today. I take you through 10 critical use cases where AI should take over your work or partner with you.

Multi-Modal AI: Video Creation Simplified — from heatherbcooper.substack.com by Heather Cooper

Emerging Multi-Modal AI Video Creation Platforms

The rise of multi-modal AI platforms has revolutionized content creation, allowing users to research, write, and generate images in one app. Now, a new wave of platforms is extending these capabilities to video creation and editing.

Multi-modal video platforms combine various AI tools for tasks like writing, transcription, text-to-voice conversion, image-to-video generation, and lip-syncing. These platforms leverage open-source models like FLUX and LivePortrait, along with APIs from services such as ElevenLabs, Luma AI, and Gen-3.

AI Medical Imagery Model Offers Fast, Cost-Efficient Expert Analysis — from developer.nvidia.com/