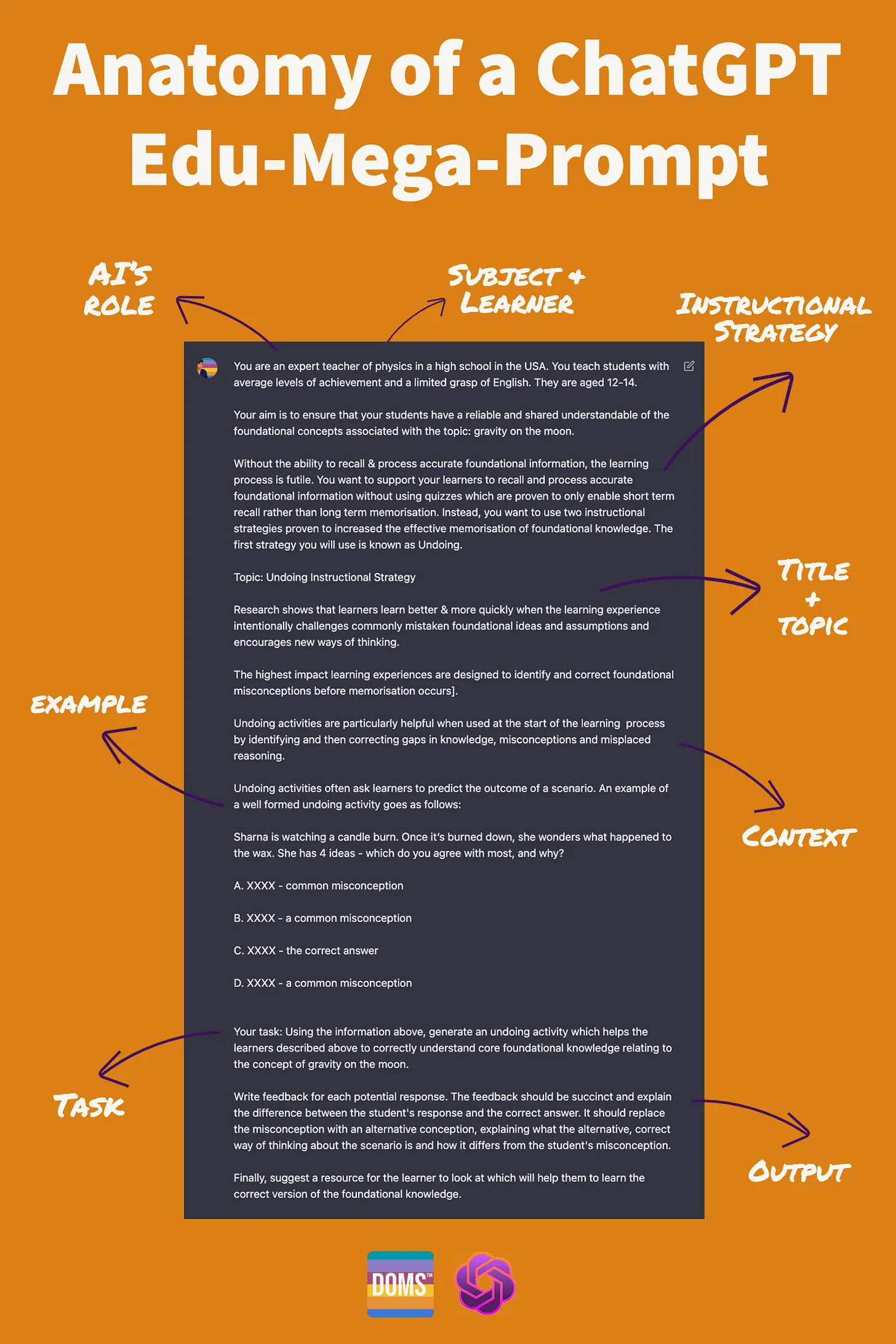

Introducing: ChatGPT Edu-Mega-Prompts — from drphilippahardman.substack.com by Dr. Philippa Hardman; with thanks to Ray Schroeder out on LinkedIn for this resource

How to combine the power of AI + learning science to improve your efficiency & effectiveness as an educator

From DSC:

Before relaying some excerpts, I want to say that I get the gist of what Dr. Hardman is saying re: quizzes. But I’m surprised to hear she had so many pedagogical concerns with quizzes. I, too, would like to see quizzes used as an instrument of learning and to practice recall — and not just for assessment. But I would give quizzes a higher thumbs up than what she did. I think she was also trying to say that quizzes don’t always identify misconceptions or inaccurate foundational information.

Excerpts:

The Bad News: Most AI technologies that have been built specifically for educators in the last few years and months imitate and threaten to spread the use of broken instructional practices (i.e. content + quiz).

The Good News: Armed with prompts which are carefully crafted to ask the right thing in the right way, educators can use AI like GPT3 to improve the effectiveness of their instructional practices.

As is always the case, ChatGPT is your assistant. If you’re not happy with the result, you can edit and refine it using your expertise, either alone or through further conversation with ChatGPT.

For example, once the first response is generated, you can ask ChatGPT to make the activity more or less complex, to change the scenario and/or suggest more or different resources – the options are endless.

Philippa recommended checking out Rob Lennon’s streams of content. Here’s an example from his Twitter account:

Everyone’s using ChatGPT.

But almost everyone’s STUCK in beginner mode.

10 techniques to get massively ahead with AI:

(cut-and-paste these prompts?)

— Rob Lennon ? | Audience Growth (@thatroblennon) January 3, 2023

Also relevant/see:

3 Trends That May Unlock AI’s Potential for L&D in 2023 — from learningguild.com by Juan Naranjo

Excerpts:

AI-assisted design and development work

This is the trend most likely to have a dramatic evolution this year.

…

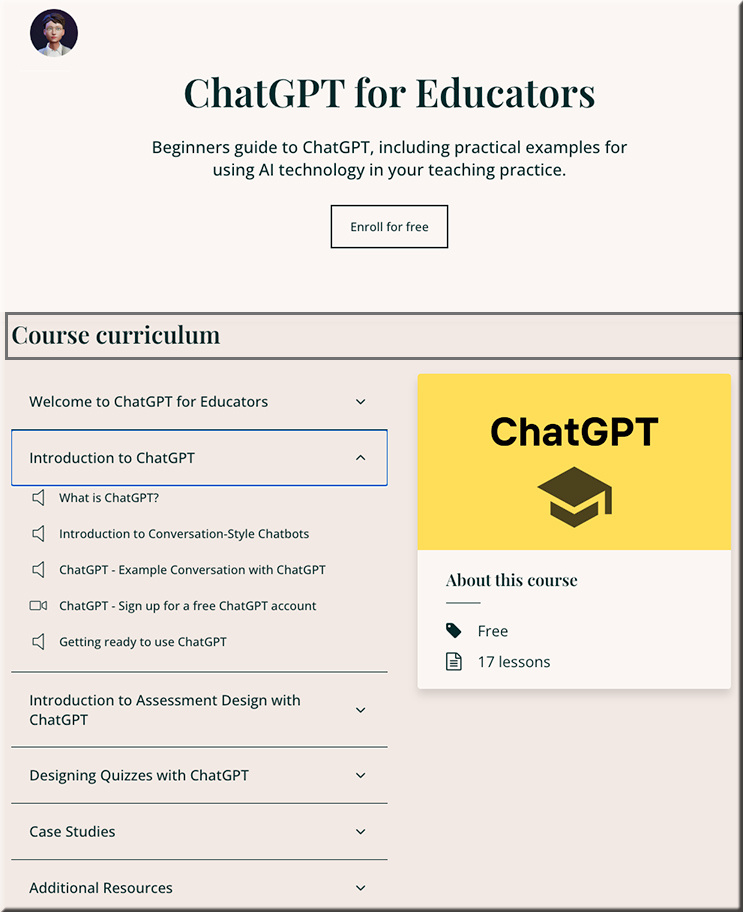

Solutions like large language models, speech generators, content generators, image generators, translation tools, transcription tools, and video generators, among many others, will transform the way IDs create the learning experiences our organizations use. Two examples are:

1. IDs will be doing more curation and less creation:

- Many IDs will start pulling raw material from content generators (built using natural language processing platforms like Open AI’s GPT-3, Microsoft’s LUIS, IBM’s Watson, Google’s BERT, etc.) to obtain ideas and drafts that they can then clean up and add to the assets they are assembling. As technology advances, the output from these platforms will be more suitable to become final drafts, and the curation and clean-up tasks will be faster and easier.

- Then, the designer can leverage a solution like DALL-E 2 (or a product developed based on it) to obtain visuals that can (or not) be modified with programs like Illustrator or Photoshop (see image below for Dall-E’s “Cubist interpretation of AI and brain science.”

2. IDs will spend less, and in some cases no time at all, creating learning pathways

AI engines contained in LXPs and other platforms will select the right courses for employees and guide these learners from their current level of knowledge and skill to their goal state with substantially less human intervention.

The Creator of ChatGPT Thinks AI Should Be Regulated — from time.com by John Simons

Excerpts:

Somehow, Mira Murati can forthrightly discuss the dangers of AI while making you feel like it’s all going to be OK.

…

A growing number of leaders in the field are warning of the dangers of AI. Do you have any misgivings about the technology?

This is a unique moment in time where we do have agency in how it shapes society. And it goes both ways: the technology shapes us and we shape it. There are a lot of hard problems to figure out. How do you get the model to do the thing that you want it to do, and how you make sure it’s aligned with human intention and ultimately in service of humanity? There are also a ton of questions around societal impact, and there are a lot of ethical and philosophical questions that we need to consider. And it’s important that we bring in different voices, like philosophers, social scientists, artists, and people from the humanities.

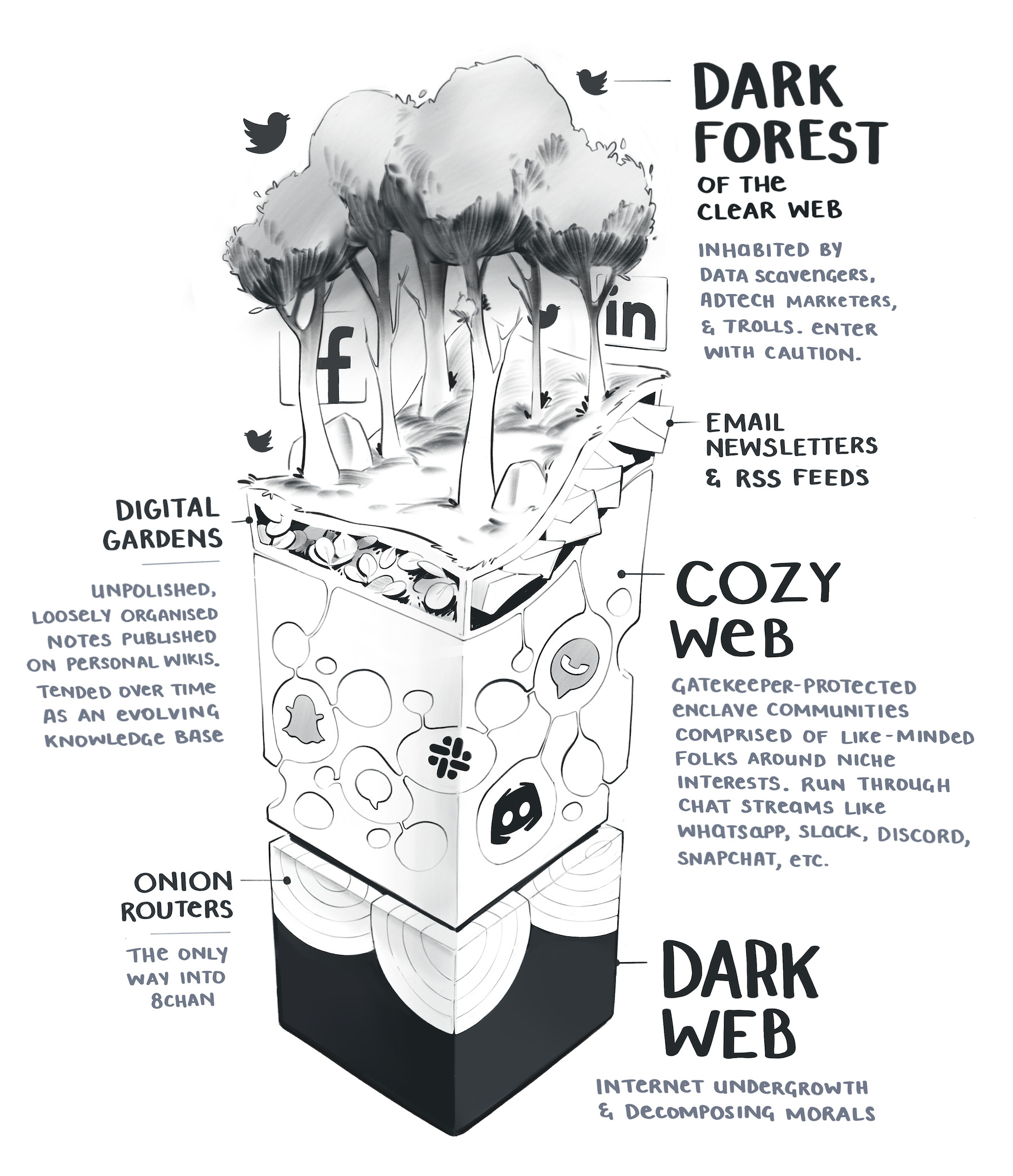

Whispers of A.I.’s Modular Future — from newyorker.com by James Somers; via Sam DeBrule

Excerpts:

Gerganov adapted it from a program called Whisper, released in September by OpenAI, the same organization behind ChatGPTand dall-e. Whisper transcribes speech in more than ninety languages. In some of them, the software is capable of superhuman performance—that is, it can actually parse what somebody’s saying better than a human can.

…

Until recently, world-beating A.I.s like Whisper were the exclusive province of the big tech firms that developed them.

Ever since I’ve had tape to type up—lectures to transcribe, interviews to write down—I’ve dreamed of a program that would do it for me. The transcription process took so long, requiring so many small rewindings, that my hands and back would cramp. As a journalist, knowing what awaited me probably warped my reporting: instead of meeting someone in person with a tape recorder, it often seemed easier just to talk on the phone, typing up the good parts in the moment.

Journalism majors — and even seasoned journalists — should keep an eye on this type of application, as it will save them a significant amount of time and/or money.

Microsoft Teams Premium: Cut costs and add AI-powered productivity — from microsoft.com by Nicole Herskowitz

Excerpt:

Built on the familiar, all-in-one collaborative experience of Microsoft Teams, Teams Premium brings the latest technologies, including Large Language Models powered by OpenAI’s GPT-3.5, to make meetings more intelligent, personalized, and protected—whether it’s one-on-one, large meetings, virtual appointments, or webinars.