6 basic Youtube tips everyone should know — from hongkiat.com by Kelvon Yeezy

Example tips:

1. Share video starting at a specific point . <– A brief insert from DSC: This is especially helpful to teachers, trainers, and professors

If you want to share a YouTube video in a way that it starts from a certain point, you can do so in a couple of simple steps.

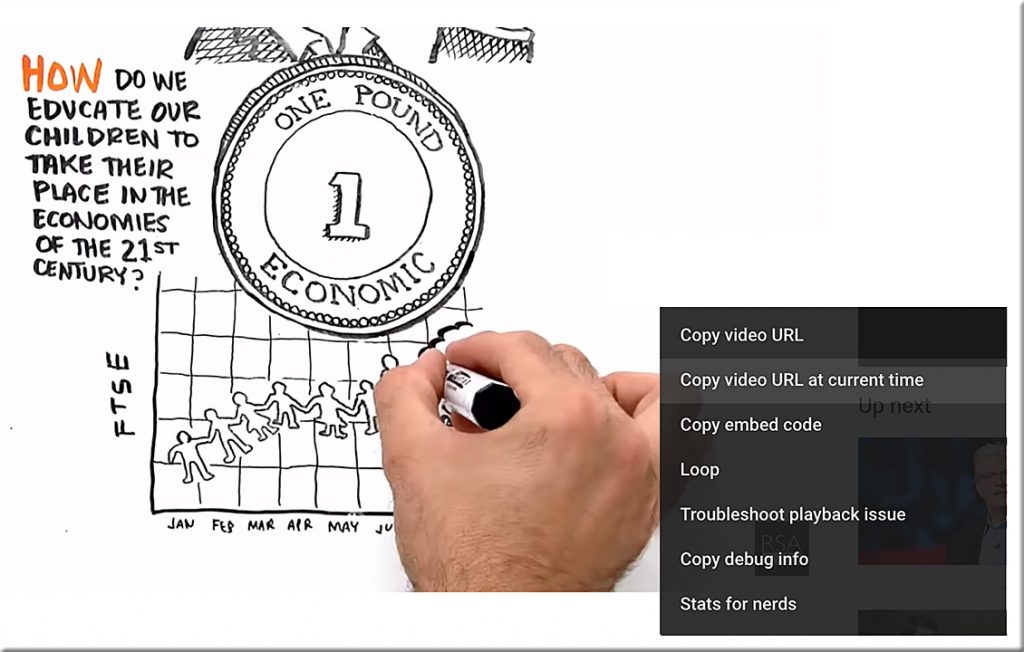

Just pause the video at the point from where you want the other user to start watching it and right-click on the video screen. A menu will appear from which you can choose Copy video URL at the current time. The copied link will open the video starting from that specific time.

4. More accurate video search

There are millions of videos on Youtube. So trying to find that specific Youtube video you want to watch is an adventure in itself. In this quest, you might find yourself crawling through dozens of pages hoping to find the video you actually want to watch.

If you don’t want to go through all this hassle, then simply add allintitle: before the keywords you are using to search for the video. This basically gives you only those videos that include the chosen keywords.