Law Firms Are Recruiting More AI Experts as Clients Demand ‘More for Less’ — from bloomberg.com by Irina Anghel

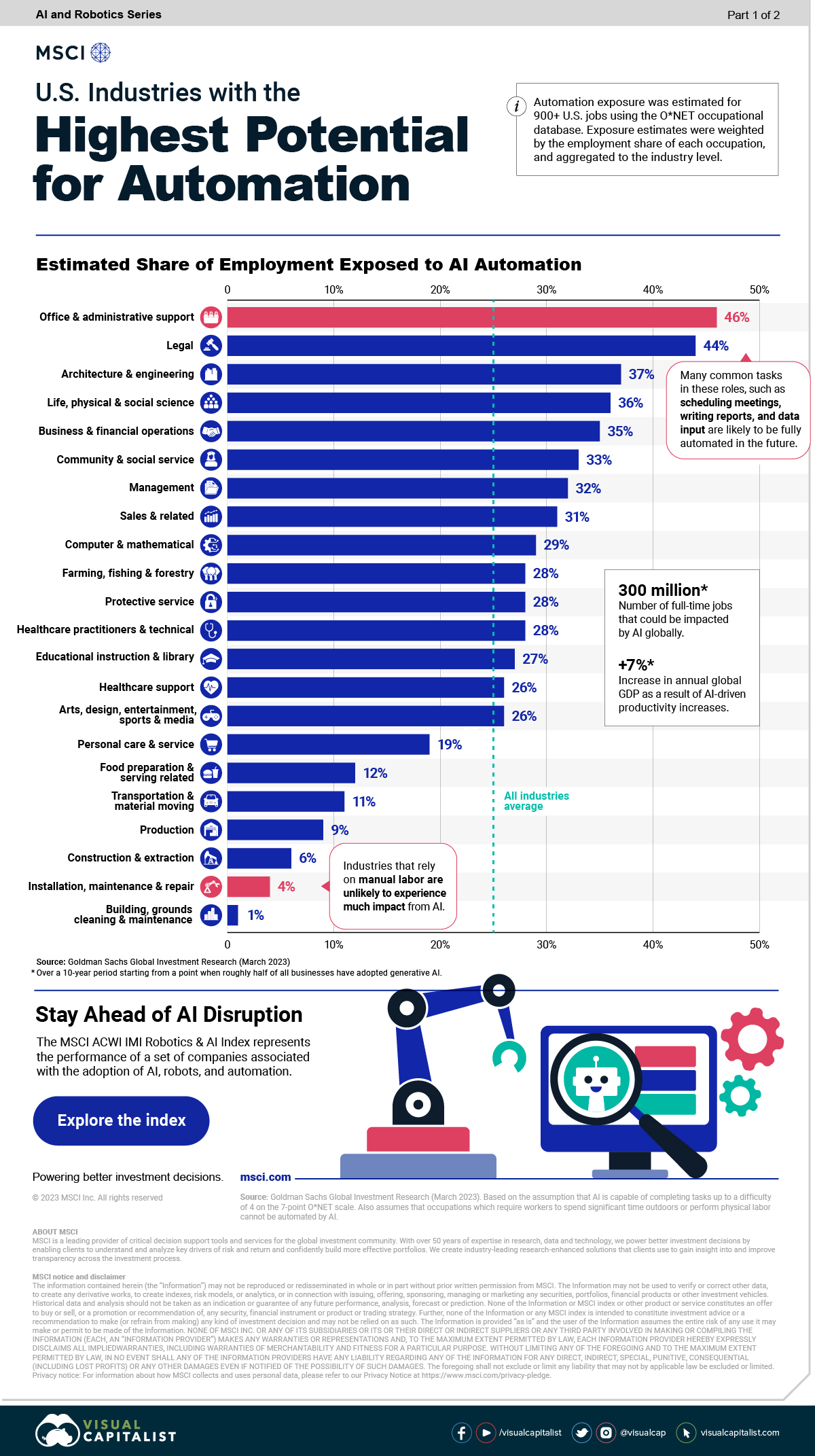

Data scientists, software engineers among roles being sought | Legal services seen as vulnerable to ChatGPT-type software

Excerpt (emphasis DSC):

Chatbots, data scientists, software engineers. As clients demand more for less, law firms are hiring growing numbers of staff who’ve studied technology not tort law to try and stand out from their rivals.

Law firms are advertising for experts in artificial intelligence “more than ever before,” says Chris Tart-Roberts, head of the legal technology practice at Macfarlanes, describing a trend he says began about six months ago.

.

AI Will Threaten Law Firm Jobs, But Innovators Will Thrive — from law.com

Excerpts:

What You Need to Know

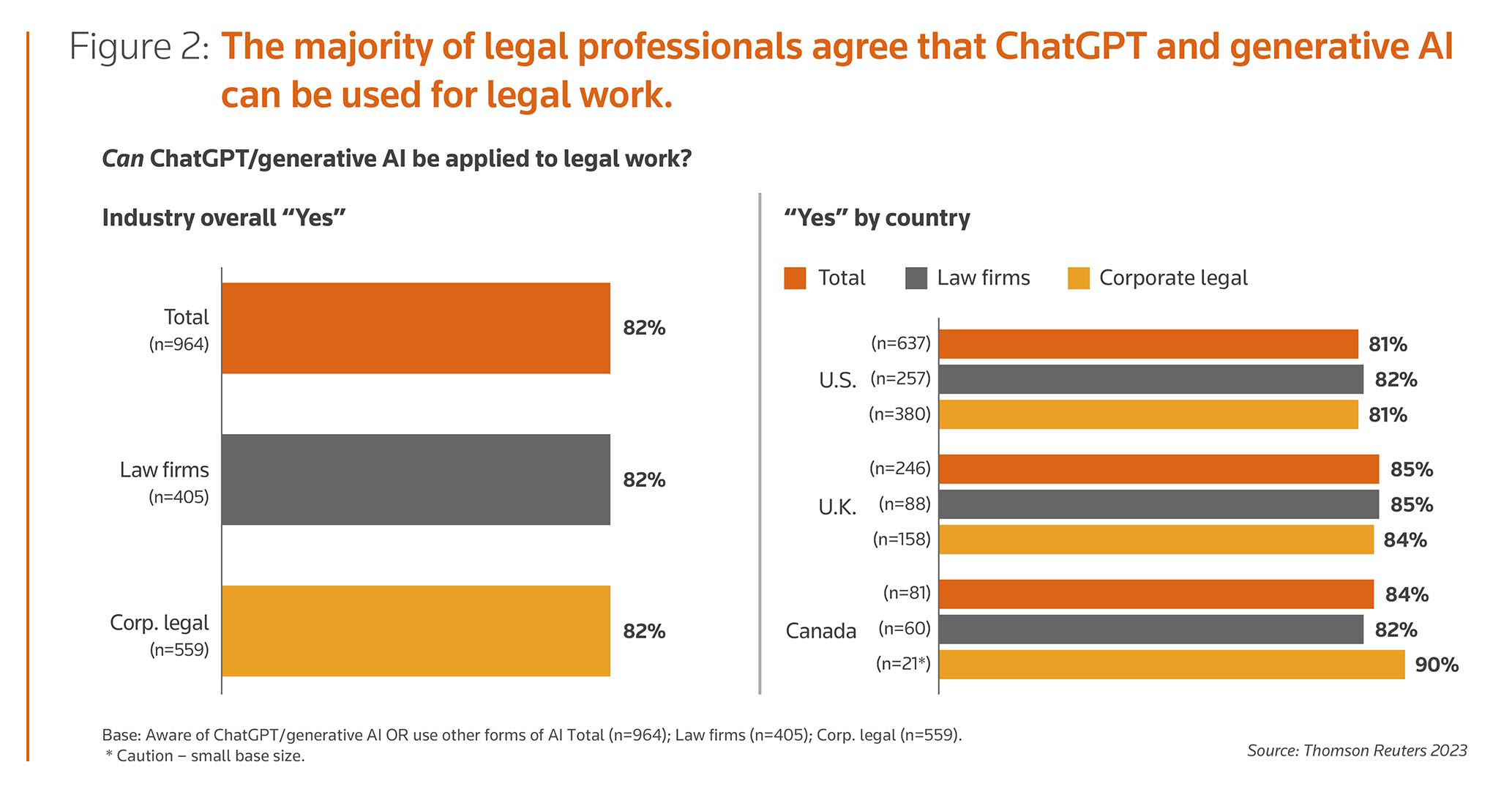

- Law firm leaders and consultants are unsure of how AI use will ultimately impact the legal workforce.

- Consults are advising law firms and attorneys alike to adapt to the use of generative AI, viewing this as an opportunity for attorneys to learn new skills and law firms to take a look at their business models.

Split between foreseeing job cuts and opportunities to introduce new skills and additional efficiencies into the office, firm leaders and consultants remain uncertain about the impact of artificial intelligence on the legal workforce.

However, one thing is certain: law firms and attorneys need to adapt and learn how to integrate this new technology in their business models, according to consultants.

AI Lawyer — A personal AI lawyer at your fingertips — from ailawyer.pro

From DSC:

I hope that we will see a lot more of this kind of thing!

I’m counting on it.

.

Revolutionize Your Legal Education with Law School AI — from law-school-ai.vercel.app

Your Ultimate Study Partner

Are you overwhelmed by countless cases, complex legal concepts, and endless readings? Law School AI is here to help. Our cutting-edge AI chatbot is designed to provide law students with an accessible, efficient, and engaging way to learn the law. Our chatbot simplifies complex legal topics, delivers personalized study guidance, and answers your questions in real-time – making your law school journey a whole lot easier.

Job title of the future: metaverse lawyer — from technologyreview.com by Amanda Smith

Madaline Zannes’s virtual offices come with breakout rooms, an art gallery… and a bar.

.

Excerpt:

Lot #651 on Somnium Space belongs to Zannes Law, a Toronto-based law firm. In this seven-level metaverse office, principal lawyer Madaline Zannes conducts private consultations with clients, meets people wandering in with legal questions, hosts conferences, and gives guest lectures. Zannes says that her metaverse office allows for a more immersive, imaginative client experience. She hired a custom metaverse builder to create the space from scratch—with breakout rooms, presentation stages, offices to rent, an art gallery, and a rooftop bar.

A Literal Generative AI Discussion: How AI Could Reshape Law — from geeklawblog.com by Greg Lambert

Excerpt:

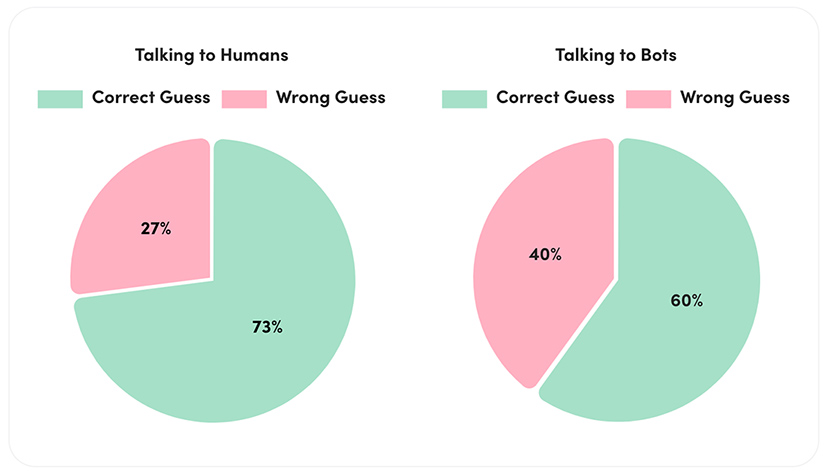

Greg spoke with an AI guest named Justis for this episode. Justis, powered by OpenAI’s GPT-4, was able to have a natural conversation with Greg and provide insightful perspectives on the use of generative AI in the legal industry, specifically in law firms.

In the first part of their discussion, Justis gave an overview of the legal industry’s interest in and uncertainty around adopting generative AI. While many law firm leaders recognize its potential, some are unsure of how it fits into legal work or worry about risks. Justis pointed to examples of firms exploring AI and said letting lawyers experiment with the tools could help identify use cases.

Robots aren’t representing us in court but here are 7 legal tech startups transforming the legal system — from tech.eu by Cate Lawrence

Legal tech startups are stepping up to the bar, using tech such as AI, teleoperations, and apps to bring justice to more people than ever before. This increases efficiency, reduces delays, and lowers costs, expanding legal access.

Putting Humans First: Solving Real-Life Problems With Legal Innovation — from abovethelaw.com by Olga Mack

Placing the end-user at the heart of the process allows innovators to identify pain points and create solutions that directly address the unique needs and challenges individuals and businesses face.