AI RESOURCES AND TEACHING (Kent State University) — from aiadvisoryboards.wordpress.com

AI Resources and Teaching | Kent State University offers valuable resources for educators interested in incorporating artificial intelligence (AI) into their teaching practices. The university recognizes that the rapid emergence of AI tools presents both challenges and opportunities in higher education.

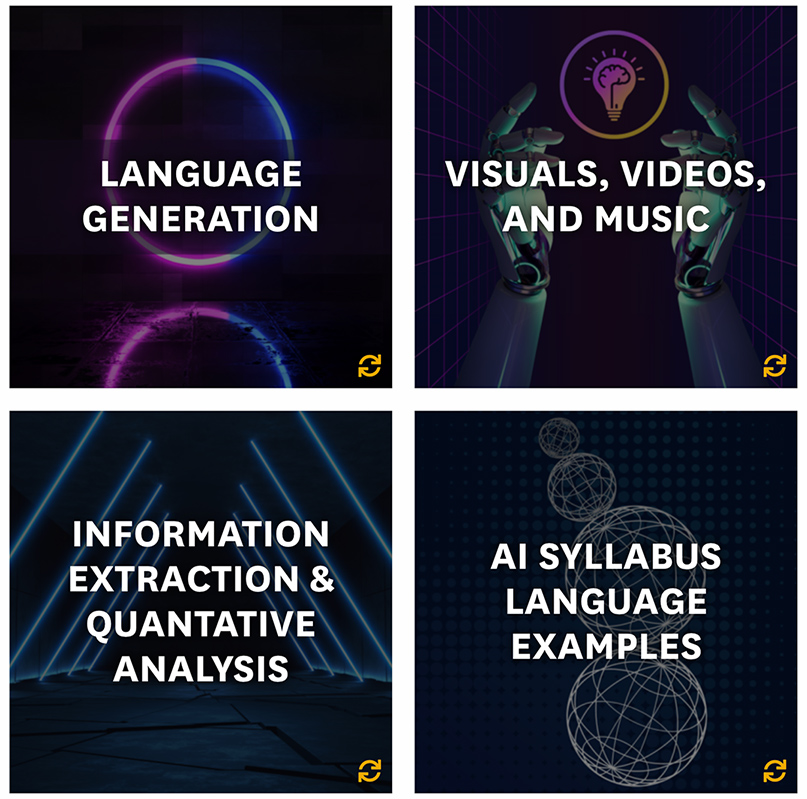

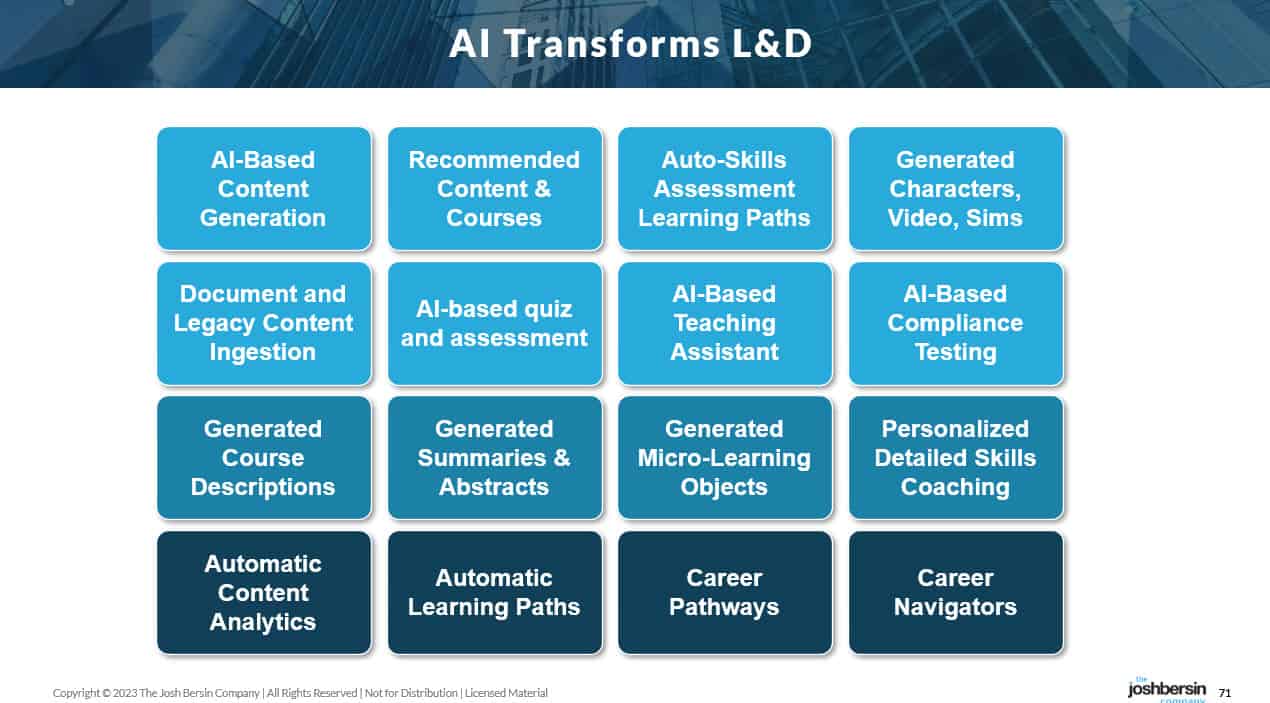

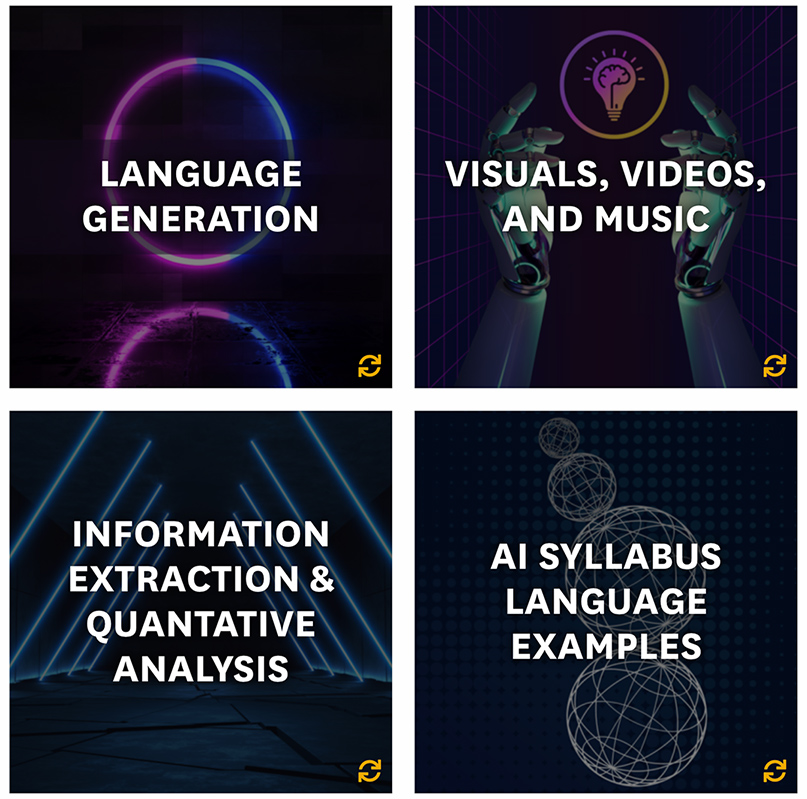

The AI Resources and Teaching page provides educators with information and guidance on various AI tools and their responsible use within and beyond the classroom. The page covers different areas of AI application, including language generation, visuals, videos, music, information extraction, quantitative analysis, and AI syllabus language examples.

A Cautionary AI Tale: Why IBM’s Dazzling Watson Supercomputer Made a Lousy Tutor — from the74million.org by Greg Toppo

With a new race underway to create the next teaching chatbot, IBM’s abandoned 5-year, $100M ed push offers lessons about AI’s promise and its limits.

For all its jaw-dropping power, Watson the computer overlord was a weak teacher. It couldn’t engage or motivate kids, inspire them to reach new heights or even keep them focused on the material — all qualities of the best mentors.

It’s a finding with some resonance to our current moment of AI-inspired doomscrolling about the future of humanity in a world of ascendant machines. “There are some things AI is actually very good for,” Nitta said, “but it’s not great as a replacement for humans.”

His five-year journey to essentially a dead-end could also prove instructive as ChatGPT and other programs like it fuel a renewed, multimillion-dollar experiment to, in essence, prove him wrong.

…

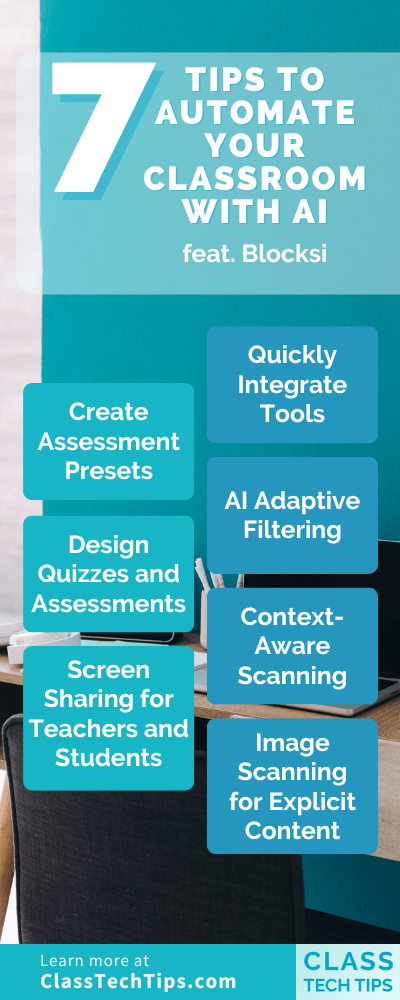

To be sure, AI can do sophisticated things such as generating quizzes from a class reading and editing student writing. But the idea that a machine or a chatbot can actually teach as a human can, he said, represents “a profound misunderstanding of what AI is actually capable of.”

Nitta, who still holds deep respect for the Watson lab, admits, “We missed something important. At the heart of education, at the heart of any learning, is engagement. And that’s kind of the Holy Grail.”

From DSC:

This is why the vision that I’ve been tracking and working on has always said that HUMAN BEINGS will be necessary — they are key to realizing this vision. Along these lines, here’s a relevant quote:

Another crucial component of a new learning theory for the age of AI would be the cultivation of “blended intelligence.” This concept recognizes that the future of learning and work will involve the seamless integration of human and machine capabilities, and that learners must develop the skills and strategies needed to effectively collaborate with AI systems. Rather than viewing AI as a threat to human intelligence, a blended intelligence approach seeks to harness the complementary strengths of humans and machines, creating a symbiotic relationship that enhances the potential of both.

Per Alexander “Sasha” Sidorkin, Head of the National Institute on AI in Society at California State University Sacramento.

.png?table=block&id=d0858648-32ad-473a-8ffb-b330fb44ffec&spaceId=870cee89-5d14-47cb-a80f-c7e4c65ae16d&width=1890&userId=&cache=v2)