When A.I.’s Output Is a Threat to A.I. Itself — from nytimes.com by Aatish Bhatia

As A.I.-generated data becomes harder to detect, it’s increasingly likely to be ingested by future A.I., leading to worse results.

All this A.I.-generated information can make it harder for us to know what’s real. And it also poses a problem for A.I. companies. As they trawl the web for new data to train their next models on — an increasingly challenging task — they’re likely to ingest some of their own A.I.-generated content, creating an unintentional feedback loop in which what was once the output from one A.I. becomes the input for another.

In the long run, this cycle may pose a threat to A.I. itself. Research has shown that when generative A.I. is trained on a lot of its own output, it can get a lot worse.

Per The Rundown AI:

The Rundown: Elon Musk’s xAI just launched “Colossus“, the world’s most powerful AI cluster powered by a whopping 100,000 Nvidia H100 GPUs, which was built in just 122 days and is planned to double in size soon.

…

Why it matters: xAI’s Grok 2 recently caught up to OpenAI’s GPT-4 in record time, and was trained on only around 15,000 GPUs. With now more than six times that amount in production, the xAI team and future versions of Grok are going to put a significant amount of pressure on OpenAI, Google, and others to deliver.

Google Meet’s automatic AI note-taking is here — from theverge.com by Joanna Nelius

Starting [on 8/28/24], some Google Workspace customers can have Google Meet be their personal note-taker.

Google Meet’s newest AI-powered feature, “take notes for me,” has started rolling out today to Google Workspace customers with the Gemini Enterprise, Gemini Education Premium, or AI Meetings & Messaging add-ons. It’s similar to Meet’s transcription tool, only instead of automatically transcribing what everyone says, it summarizes what everyone talked about. Google first announced this feature at its 2023 Cloud Next conference.

The World’s Call Center Capital Is Gripped by AI Fever — and Fear — from bloomberg.com by Saritha Rai [behind a paywall]

The experiences of staff in the Philippines’ outsourcing industry are a preview of the challenges and choices coming soon to white-collar workers around the globe.

[Claude] Artifacts are now generally available — from anthropic.com

[On 8/27/24], we’re making Artifacts available for all Claude.ai users across our Free, Pro, and Team plans. And now, you can create and view Artifacts on our iOS and Android apps.

Artifacts turn conversations with Claude into a more creative and collaborative experience. With Artifacts, you have a dedicated window to instantly see, iterate, and build on the work you create with Claude. Since launching as a feature preview in June, users have created tens of millions of Artifacts.

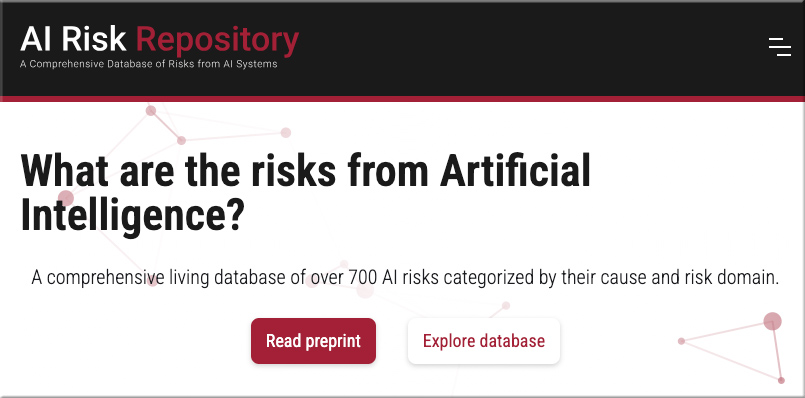

What are the risks from Artificial Intelligence?

A comprehensive living database of over 700 AI risks categorized by their cause and risk domain.

What is the AI Risk Repository?

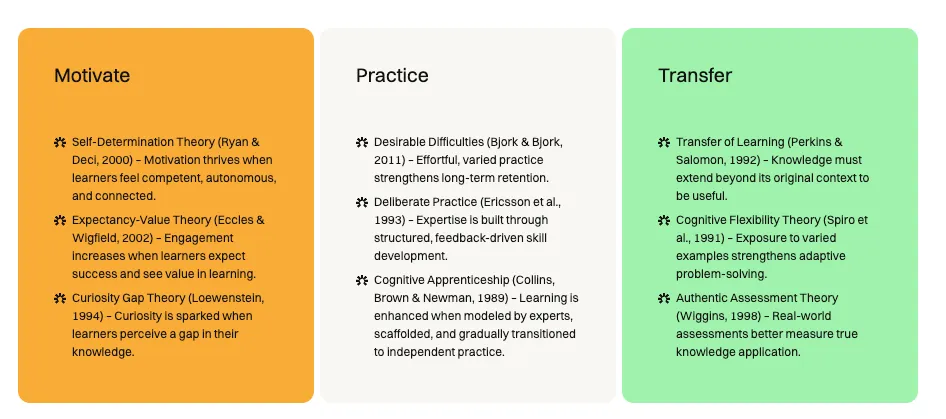

The AI Risk Repository has three parts:

- The AI Risk Database captures 700+ risks extracted from 43 existing frameworks, with quotes and page numbers.

- The Causal Taxonomy of AI Risks classifies how, when, and why these risks occur.

- The Domain Taxonomy of AI Risks classifies these risks into seven domains (e.g., “Misinformation”) and 23 subdomains (e.g., “False or misleading information”).

California lawmakers approve legislation to ban deepfakes, protect workers and regulate AI — from newsday.com by The Associated Press

SACRAMENTO, Calif. — California lawmakers approved a host of proposals this week aiming to regulate the artificial intelligence industry, combat deepfakes and protect workers from exploitation by the rapidly evolving technology.

Per Oncely:

The Details:

- Combatting Deepfakes: New laws to restrict election-related deepfakes and deepfake pornography, especially of minors, requiring social media to remove such content promptly.

- Setting Safety Guardrails: California is poised to set comprehensive safety standards for AI, including transparency in AI model training and pre-emptive safety protocols.

- Protecting Workers: Legislation to prevent the replacement of workers, like voice actors and call center employees, with AI technologies.

New in Gemini: Custom Gems and improved image generation with Imagen 3 — from blog.google

The ability to create custom Gems is coming to Gemini Advanced subscribers, and updated image generation capabilities with our latest Imagen 3 model are coming to everyone.

We have new features rolling out, [that started on 8/28/24], that we previewed at Google I/O. Gems, a new feature that lets you customize Gemini to create your own personal AI experts on any topic you want, are now available for Gemini Advanced, Business and Enterprise users. And our new image generation model, Imagen 3, will be rolling out across Gemini, Gemini Advanced, Business and Enterprise in the coming days.

Cut the Chatter, Here Comes Agentic AI — from trendmicro.com

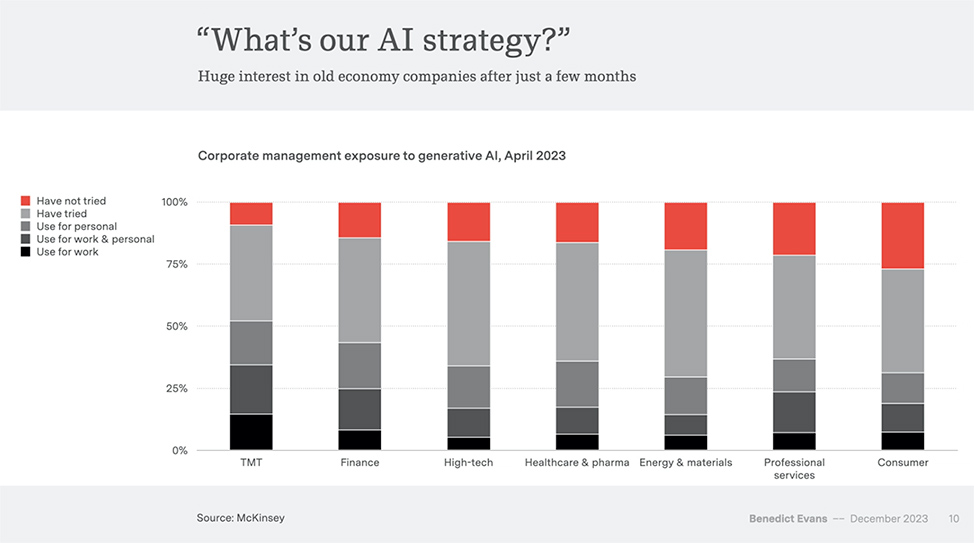

Major AI players caught heat in August over big bills and weak returns on AI investments, but it would be premature to think AI has failed to deliver. The real question is what’s next, and if industry buzz and pop-sci pontification hold any clues, the answer isn’t “more chatbots”, it’s agentic AI.

Agentic AI transforms the user experience from application-oriented information synthesis to goal-oriented problem solving. It’s what people have always thought AI would do—and while it’s not here yet, its horizon is getting closer every day.

In this issue of AI Pulse, we take a deep dive into agentic AI, what’s required to make it a reality, and how to prevent ‘self-thinking’ AI agents from potentially going rogue.

…

Citing AWS guidance, ZDNET counts six different potential types of AI agents:

-

- Simple reflex agents for tasks like resetting passwords

- Model-based reflex agents for pro vs. con decision making

- Goal-/rule-based agents that compare options and select the most efficient pathways

- Utility-based agents that compare for value

- Learning agents

- Hierarchical agents that manage and assign subtasks to other agents

Ask Claude: Amazon turns to Anthropic’s AI for Alexa revamp — from reuters.com by Greg Bensinger

Summary:

- Amazon developing new version of Alexa with generative AI

- Retailer hopes to generate revenue by charging for its use

- Concerns about in-house AI prompt Amazon to turn to Anthropic’s Claude, sources say

- Amazon says it uses many different technologies to power Alexa

Alibaba releases new AI model Qwen2-VL that can analyze videos more than 20 minutes long — from venturebeat.com by Carl Franzen

Hobbyists discover how to insert custom fonts into AI-generated images — from arstechnica.com by Benj Edwards

Like adding custom art styles or characters, in-world typefaces come to Flux.

200 million people use ChatGPT every week – up from 100 million last fall, says OpenAI — from zdnet.com by Sabrina Ortiz

Nearly two years after launching, ChatGPT continues to draw new users. Here’s why.