Student loan debt: Averages and other statistics in 2023 — from usatoday.com by Rebecca Safier and Ashley Harrison; via GSV

Excerpt:

The cost of college has more than doubled over the past four decades — and student loan borrowing has risen along with it. The student loan debt balance in the U.S. has increased by 66% over the past decade, and it now totals more than $1.77 trillion, according to the Federal Reserve.

Here’s a closer look at student loan debt statistics in the U.S. today, broken down by age, race, gender and other demographics.

In the 2020-2021 academic year, 54% of bachelor’s degree students who attended public and private four-year schools graduated with student loans, according to the College Board. These students left school with an average balance of $29,100 in education debt.

From DSC:

With significant monthly payments, many graduates HAVE TO HAVE good jobs that pay decent salaries. This is an undercurrent flowing through the higher ed learning ecosystem — with ramifications for what students/families/guardians expect from their investments.

‘Pracademics,’ professors who work outside the academy, win new respect — from washingtonpost.com by Jon Marcus

What’s in a word? A way to help impatient college students better connect to jobs.

Excerpts (emphasis DSC):

Among its approaches, the university focuses on having students learn from people like Taylor, who work or have worked in the fields about which they teach. Sheffield Hallam even has a catchy word to describe these practical academics: “pracademics.”

…

American universities have pracademics, too, of course. They’re among the more than 710,000 part-time and non-tenure-track faculty members who now make up some 61 percent of all faculty, according to the American Association of University Professors. Other adjectives for them include “adjunct,” “casual,” “contingent,” “external” and “occasional.”

From DSC:

For several years now I’ve thought that adjuncts are the best bet for our current traditional institutions of higher education to remain relevant and have healthier enrollments (i.e., sales) as well as offer better ROI’s that the students are looking for. Why? Because adjuncts bring current, real-world expertise to the classroom.

But the problem here is that many of these same institutions have treated adjunct faculty members poorly. Adjunct faculty members are often viewed as second-class citizens in many colleges and universities — even though they provide the lion’s share of the teaching, grading, and assessing of students’ work. They don’t get benefits, they are paid far less than tenured faculty members, and they often don’t know if they will actually be teaching a course or not. Chances are they don’t get to vote or have a say within faculty senates and such. They are often without power…without a voice.

I’m not sure many adjunct faculty members in the U.S. will stay with these institutions if something better comes around in the way of other alternatives.

Colgate Adds Trade School to Higher Education Employee Benefit — from colgate.edu by Daniel DeVries; via Brandon Busteed on LinkedIn

Excerpt (emphasis DSC):

One of Colgate University’s most important employee benefits has been expanded to support employee children as they seek trade or vocational education.

Colgate, like many leading universities, offers financial support for employee children who attend an accredited college or university in pursuit of an undergraduate degree. Now, at the University, this benefit has been expanded to include employee children who enroll in trade or vocational schools.

Coursera’s degree and certificate offerings help drive Q2 revenue growth — from highereddive.com by Natalie Schwartz

The MOOC platform’s CEO touted the company’s strategy of allowing students to stack short-term credentials into longer offerings.

Dive Brief:

- Coursera’s revenue increased to $153.7 million in the second quarter of 2023, up 23% compared to the same period last year, according to the company’s latest financial results.

- The increases were partly driven by strong demand for the MOOC platform’s entry-level professional certificates and rising enrollment in its degree programs.

- During a call with analysts Thursday, Coursera CEO Jeff Maggioncalda attributed some of that enrollment growth to new offerings, which include a cybersecurity analyst certificate from Microsoft and artificial intelligence degree programs from universities in India and Colombia.

Are ‘quick wins’ possible in assessment and feedback? Yes, and here’s how — from timeshighereducation.com by Beverley Hawkins, Eleanor Hodgson, Oli Young

It takes coordination, communication, and credibility to implement quick improvements in assessment and feedback, as a team from the University of Exeter explain

One way to establish this is to form an “assessment and feedback expert group”. Bringing together assessment expertise from educators and academic development specialists, and student participants across the institution establishes a community of practice beyond those in formal leadership roles, who can share their experience and bring opportunities for improvement back into their local networks.

Focusing the group on “quick wins” can encourage discussion to address specific tips and tricks that educators can use without changing their assessment briefs and without significant preparation.

Also re: providing feedback see:

Five common misconceptions on writing feedback — from timeshighereducation.com by Rolf Norgaard , Stephanie Foster

Misapprehensions about responding to and grading writing can prevent educators using writing as an effective pedagogical tool. Rolf Norgaard and Stephanie Foster set out to dispel them

Writing is essential for developing higher-order skills such as critical thinking, enquiry and metacognition. Common misconceptions about responding to and grading writing can get in the way of using writing as an effective pedagogical tool. Here, we attempt to dispel these myths and provide recommendations for effective teaching.

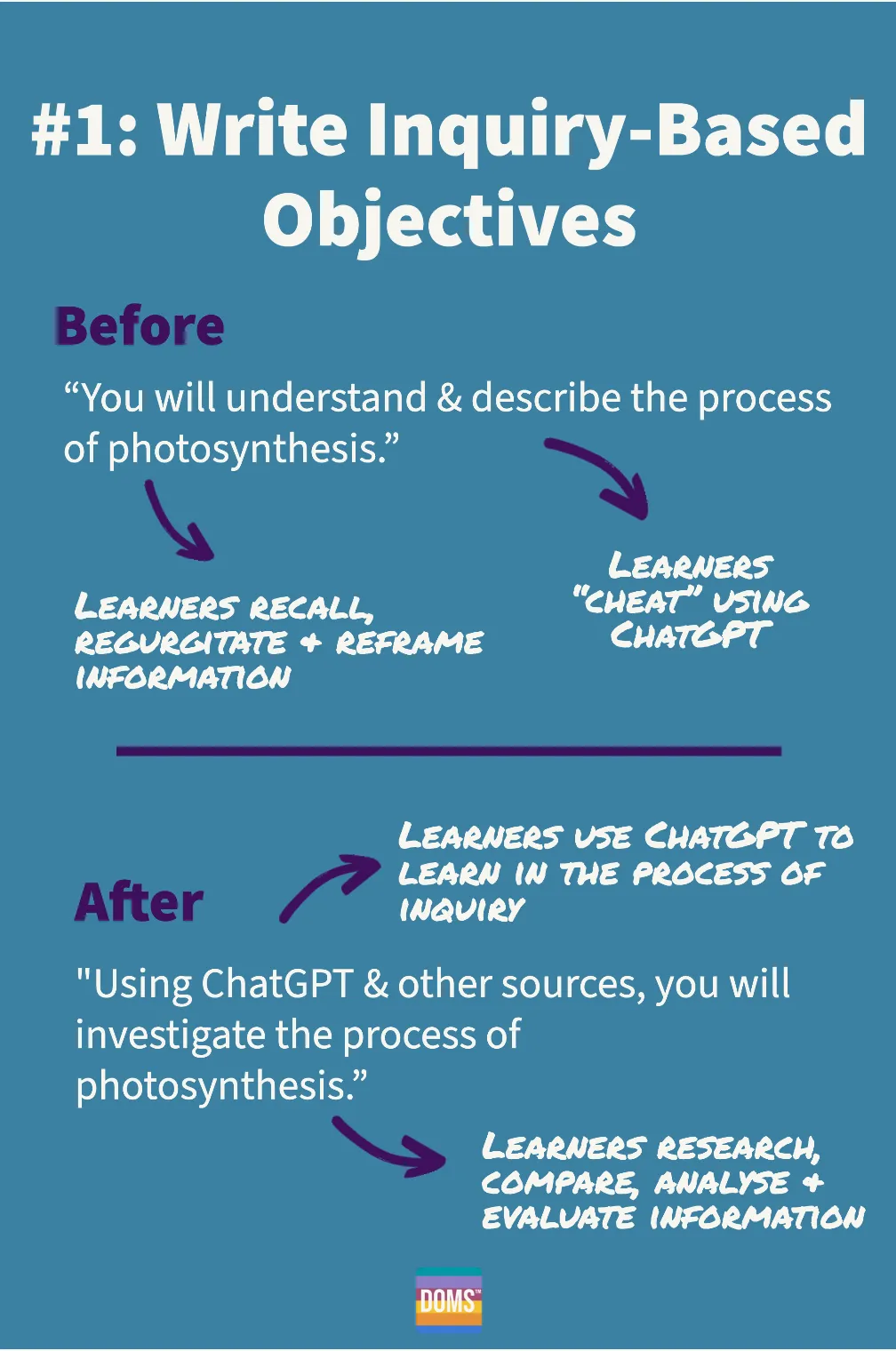

How generative AI like ChatGPT is pushing assessment reform — from timeshighereducation.com by Amir Ghapanchi

AI has brought assessment and academic integrity in higher education to the fore. Here, Amir Ghapanchi offers seven ways to evaluate student learning that mitigate the impact of AI writers

Recommended assessment types to mitigate AI use

These assessment types can help universities to minimise the adverse effects of GAI:

- Staged assignments

- In-class presentations followed by questions

- Group projects

- Personal reflection essays

- Class discussion

- In-class handwritten exams

- Performance-based assessments

Instructors Rush to Do ‘Assignment Makeovers’ to Respond to ChatGPT — from edsurge.com by Jeffrey R. Young

(Referring to rubrics) But, Bruff says, “the more transparent I am in the assignment description, the easier it is to paste that description into ChatGPT to have it do the work for you. There’s a deep irony there.”

Bruff, the teaching consultant, says his advice to any teacher is not to have an “us against them mentality” with students. Instead, he suggests, instructors should admit that they are still figuring out strategies and boundaries for new AI tools as well, and should work with students to develop ground rules for how much or how little tools like ChatGPT can be used to complete homework.

Nearly 90% of staff report major barriers between traditional and emerging academic programs — from universitybusiness.com by Alcino Donadel

Only 53% of respondents recognized an existing strategic initiative at their institution with regard to PCE units; 17% indicated none existed, and 30% were not sure.

In the American Association of Collegiate Registrars and Admissions Officers’ (AACRAO) new survey on how institutions are mediating PCE units’ coexistence with the academic registrar, they found that once-siloed PCE units that are now converging with the academic registrar are causing internal tension and confusion.

“Because the two units have been organically grown for years to be separate institutions and to offer different things, it is difficult to grow together without knowing the goals of each or having a relationship,” one anonymized respondent said in the report.