How Easy Is It/Will It Be to Use AI to Design a Course? — from wallyboston.com by Wally Boston

Excerpt:

Last week I received a text message from a friend to check out a March 29th Campus Technology article about French AI startup, Nolej. Nolej (pronounced “Knowledge”) has developed an OpenAI-based instructional content generator for educators called NolejAI.

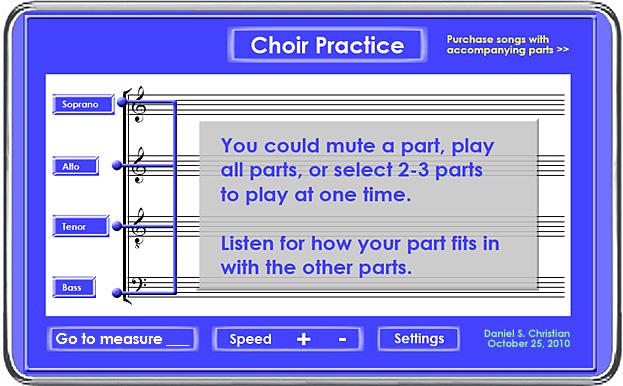

Access to NolejAI is through a browser. Users can upload video, audio, text documents, or a website url. NolejAI will generate an interactive micro-learning package which is a standalone digital lesson including content transcript, summaries, a glossary of terms, flashcards, and quizzes. All the lesson materials generated is based upon the uploaded materials.

From DSC:

I wonder if this will turn out to be the case:

I am sure it’s only a matter of time before NolejAI or another product becomes capable of generating a standard three credit hour college course. Whether that is six months or two years, it’s likely sooner than we think.

Also relevant/see: