In the 2030 and beyond world, employers will no longer be a separate entity from the education establishment. Pressures from both the supply and demand side are so large that employers and learners will end up, by default, co-designing new learning experiences, where all learning counts.

OBJECTIVES FOR CONVENINGS

- Identify the skills everyone will need to navigate the changing relationship between machine intelligence and people over the next 10-12 years.

- Develop implications for work, workers, students, working learners, employers, and policymakers.

- Identify a preliminary set of actions that need to be taken now to best prepare for the changing work + learn ecosystem.

Three key questions guided the discussions:

- What are the LEAST and MOST essential skills needed for the future?

- Where and how will tomorrow’s workers and learners acquire the skills they really need?

- Who is accountable for making sure individuals can thrive in this new economy?

This report summarizes the experts’ views on what skills will likely be needed to navigate the work + learn ecosystem over the next 10–15 years—and their suggested steps for better serving the nation’s future needs.

In a new world of work, driven especially by AI, institutionally-sanctioned curricula could give way to AI-personalized learning. This would drastically change the nature of existing social contracts between employers and employees, teachers and students, and governments and citizens. Traditional social contracts would need to be renegotiated or revamped entirely. In the process, institutional assessment and evaluation could well shift from top-down to new bottom-up tools and processes for developing capacities, valuing skills, and managing performance through new kinds of reputation or accomplishment scores.

…

In October 2017, Chris Wanstrath, CEO of Github, the foremost code-sharing and social networking resource for programmers today, made a bold statement: “The future of coding is no coding at all.” He believes that the writing of code will be automated in the near future, leaving humans to focus on “higher-level strategy and design of software.” Many of the experts at the convenings agreed. Even creating the AI systems of tomorrow, they asserted, will likely require less human coding than is needed today, with graphic interfaces turning AI programming into a drag-and-drop operation.

Digital fluency does not mean knowing coding languages. Experts at both convenings contended that effectively “befriending the machine” will be less about teaching people to code and more about being able to empathize with AIs and machines, understanding how they “see the world” and “think” and “make decisions.” Machines will create languages to talk to one another.

…

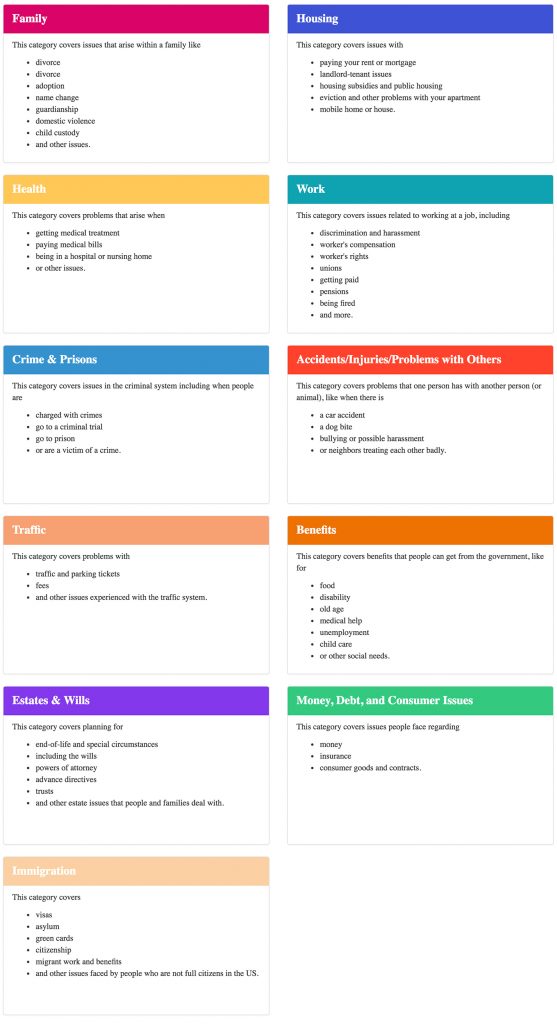

Here’s a list of many skills the experts do not expect to see much of—if at all—in the future:

- Coding. Systems will be self-programming.

- Building AI systems. Graphic interfaces will turn AI programming into drag-and-drop operations.

- Calendaring, scheduling, and organizing. There won’t be need for email triage.

- Planning and even decision-making. AI assistants will pick this up.

- Creating more personalized curricula. Learners may design more of their own personalized learning adventure.

- Writing and reviewing resumes. Digital portfolios, personal branding, and performance reputation will replace resumes.

- Language translation and localization. This will happen in real time using translator apps.

- Legal research and writing. Many of our legal systems will be automated.

- Validation skills. Machines will check people’s work to validate their skills.

- Driving. Driverless vehicles will replace the need to learn how to drive.

Here’s a list of the most essential skills needed for the future:

- Quantitative and algorithmic thinking.

- Managing reputation.

- Storytelling and interpretive skills.

- First principles thinking.

- Communicating with machines as machines.

- Augmenting high-skilled physical tasks with AI.

- Optimization and debugging frame of mind.

- Creativity and growth mindset.

- Adaptability.

- Emotional intelligence.

- Truth seeking.

- Cybersecurity.

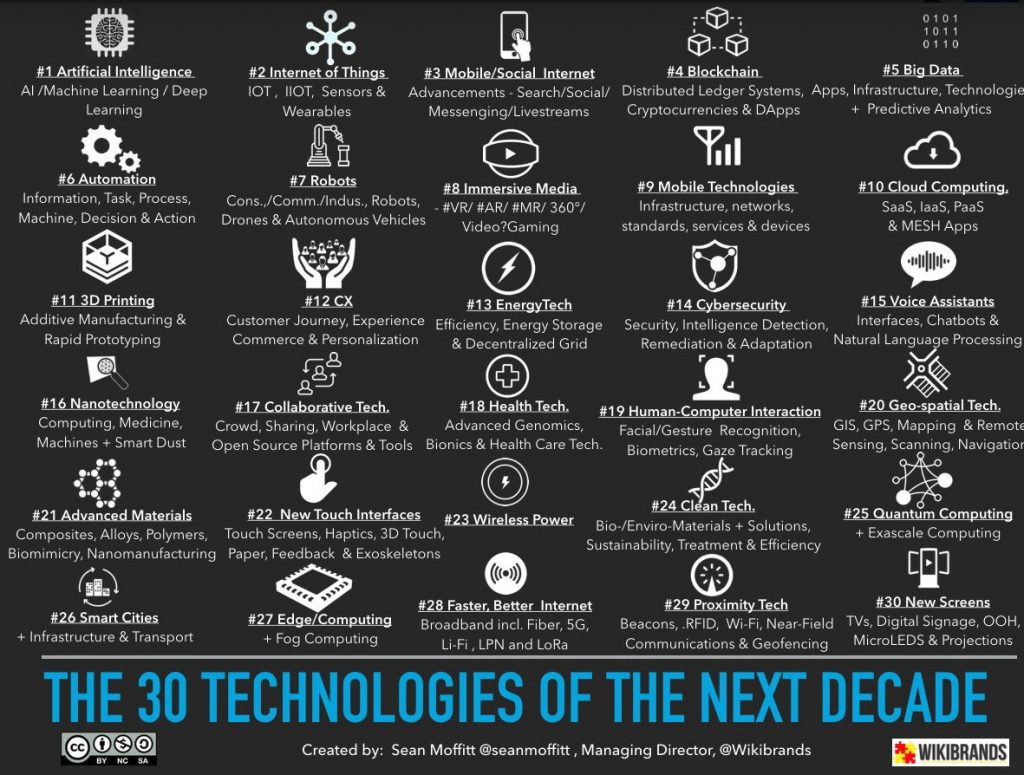

The rise of machine intelligence is just one of the many powerful social, technological, economic, environmental, and political forces that are rapidly and disruptively changing the way everyone will work and learn in the future. Because this largely tech-driven force is so interconnected with other drivers of change, it is nearly impossible to understand the impact of intelligent agents on how we will work and learn without also imagining the ways in which these new tools will reshape how we live.