Complete Guide to Virtual Reality Careers — from vudream.com by Mark Metry

Excerpt:

So you want to jump in the illustrious intricate pool of Virtual Reality?

Come on in my friend. The water is warm with confusion and camaraderie. To be honest, few people have any idea what’s going on in the industry.

VR is a brand new industry, hardly anyone has experience.

That’s a good thing for you.

…

Marxent Labs reports that there are 5 virtual reality jobs.

UX/UI Designers:

UX/UI Designers create roadmaps demonstrating how the app should flow and design the look and feel of the app, in order to ensure user-friendly experiences.

Unity Developers:

Specializing in Unity 3D software, Unity Developers create the foundation of the experience.

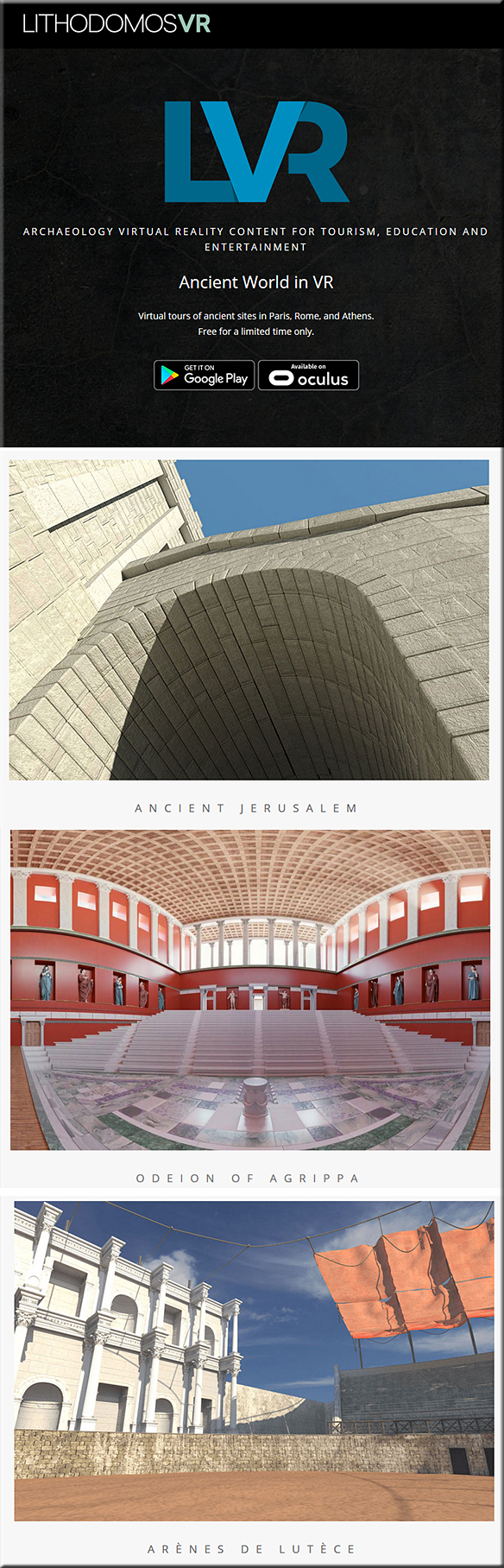

3D Modelers:

3D artists render lifelike digital imagery.

Animators:

Animators bring the 3D models to life. Many 3D modelers are cross-trained in animation, which is a highly recommended combination a 3D candidate to possess.

Project Manager:

The Project Manager is responsible for communicating deadlines, budgets, requirements, roadblocks, and more between the client and the internal team.

Videographer:

Each project is captured and edited into clips to make showcase videos for marketing and entertainment.

Virtual Reality (VR) jobs jump in the job market — from forbes.com by Karsten Strauss

Excerpt:

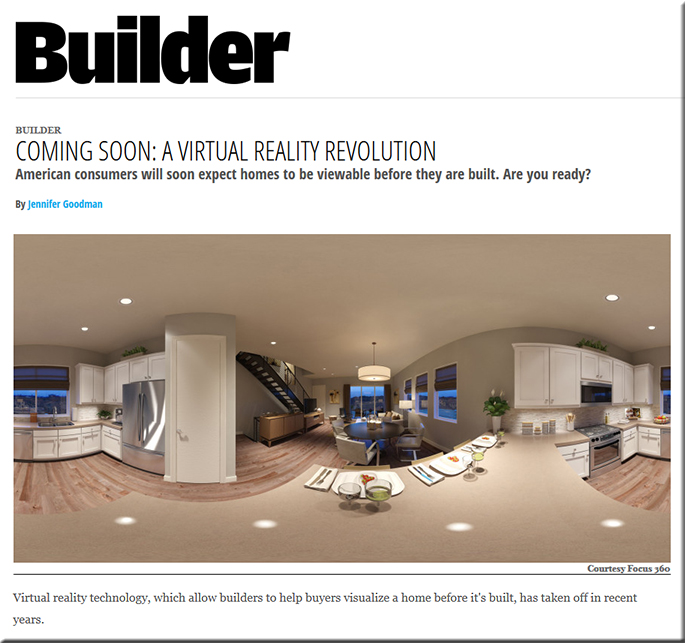

One of the more vibrant, up-and-coming sectors of the tech industry these days is virtual reality. From the added dimension it brings to gaming and media consumption to the level of immersion the technology can bring to marketing, VR is expected to see a bump in the near future.

And major players have not been blind to that potential. Most famously, Facebook’s Mark Zuckerberg laid down a $2 billion bet on the technology in the spring of 2014 when his company acquired virtual reality firm, Oculus Rift. That investment put a stamp of confidence on the space and it’s grown ever since.

So it makes sense, then, that tech-facing companies are scanning for developers and coders who can help them build out their VR capabilities. Though still early, some in the job-search industry are noticing a trend in the hiring market.

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)

:no_upscale()/cdn0.vox-cdn.com/uploads/chorus_image/image/54320231/facebook_spaces_drawing.0.gif)