Amazon pledges $700 million to teach its workers to code — from wired.com by Louise Matsakis

Excerpt:

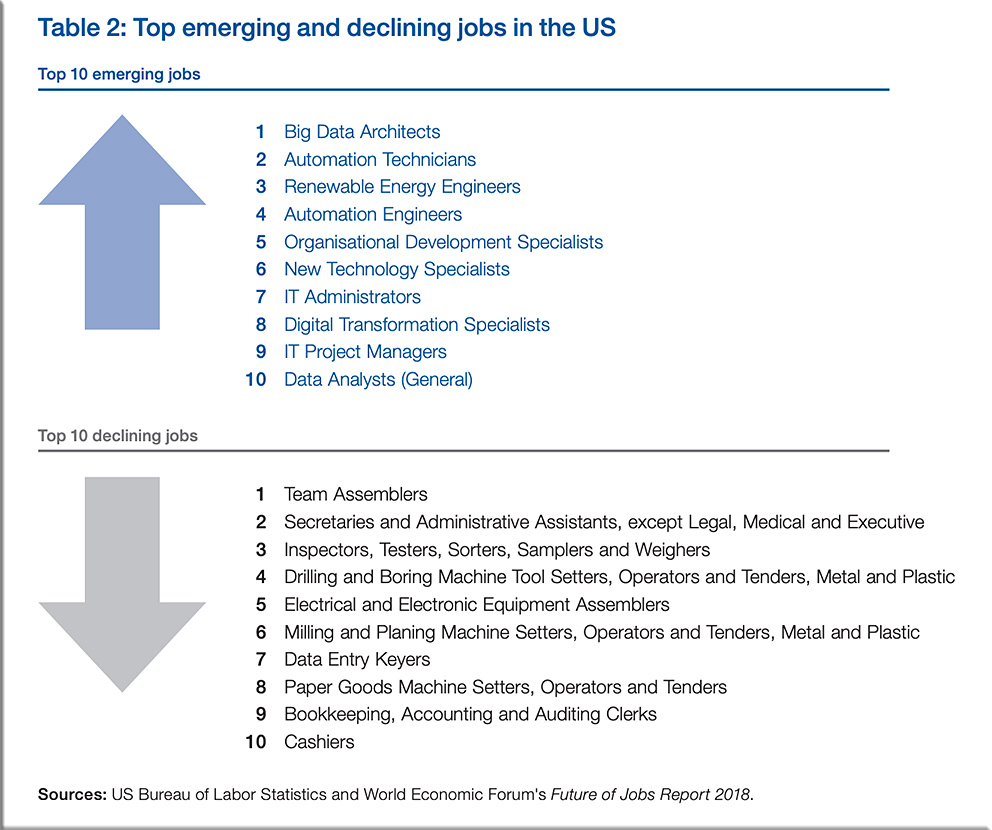

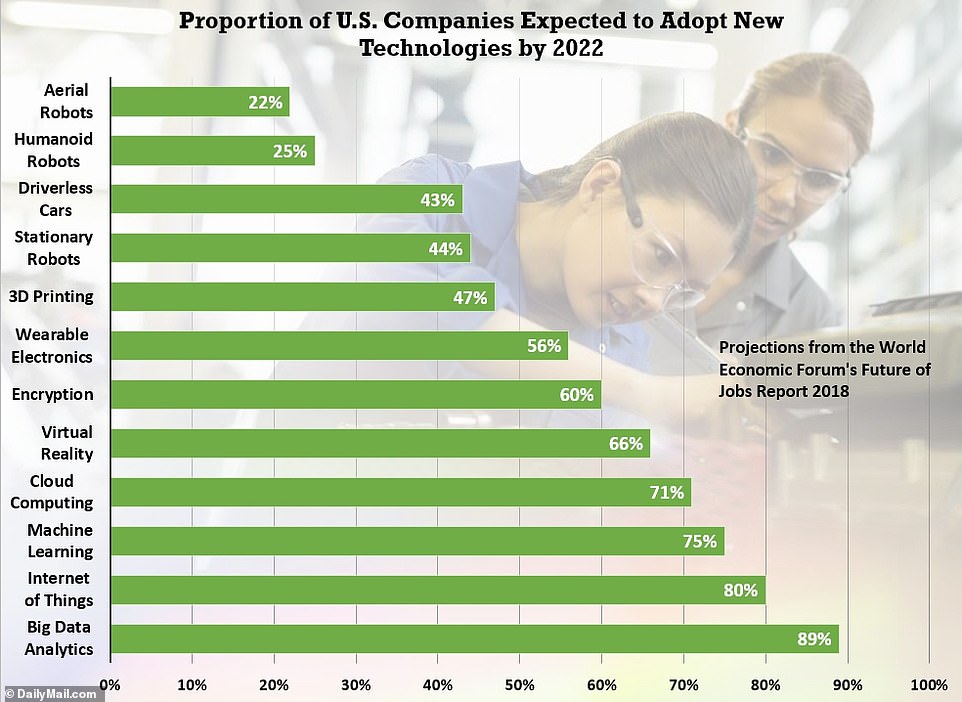

Amazon announced Thursday that it will spend up to $700 million over the next six years retraining 100,000 of its US employees, mostly in technical skills like software engineering and IT support. Amazon is already one of the largest employers in the country, with almost 300,000 workers (and many more contractors) and it’s particularly hungry for more new talent. The company currently has more than 20,000 vacant US roles, over half of which are at its headquarters in Seattle. Meanwhile, the US economy is booming, and there are now more open jobs than there are unemployed people who can fill them, according to the Bureau of Labor Statistics.

Against that backdrop, Amazon’s jobs skills efforts provide some reassurance that—in theory at least—you could be retrained into a new role when the robots arrive.

From the announcement:

Based on a review of its workforce and analysis of U.S. hiring, Amazon’s fastest growing highly skilled jobs over the last five years include data mapping specialist, data scientist, solutions architect and business analyst, as well as logistics coordinator, process improvement manager and transportation specialist within our customer fulfillment network.

Also see:

- Amazon to Invest $700M to Retrain 100,000 Workers for New Jobs — from pcmag.com by Michael Kan Icon

‘There is a greater need for technical skills in the workplace than ever before. Amazon is no exception,’ the company said. The goal is to ‘upskill’ one third of Amazon’s total work force by 2025 through free retraining programs.