Robots will take jobs, but not as fast as some fear, new report says — from nytimes.com by Steve Lohr

Excerpt:

The robots are coming, but the march of automation will displace jobs more gradually than some alarming forecasts suggest.

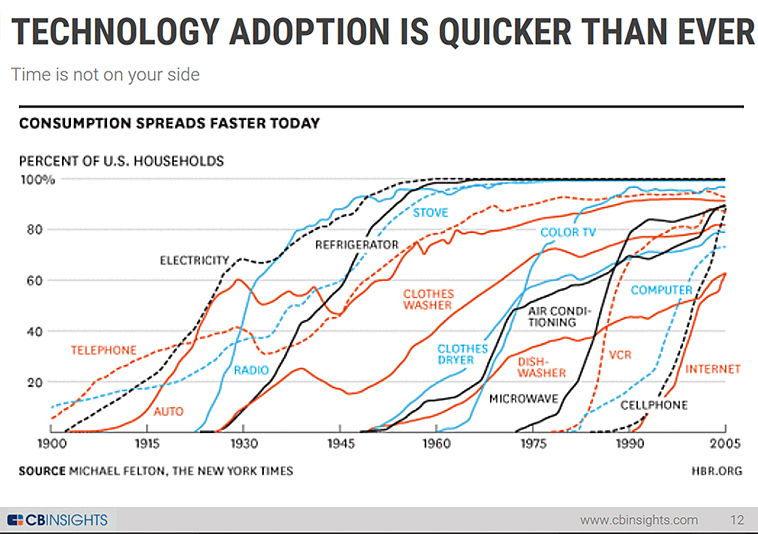

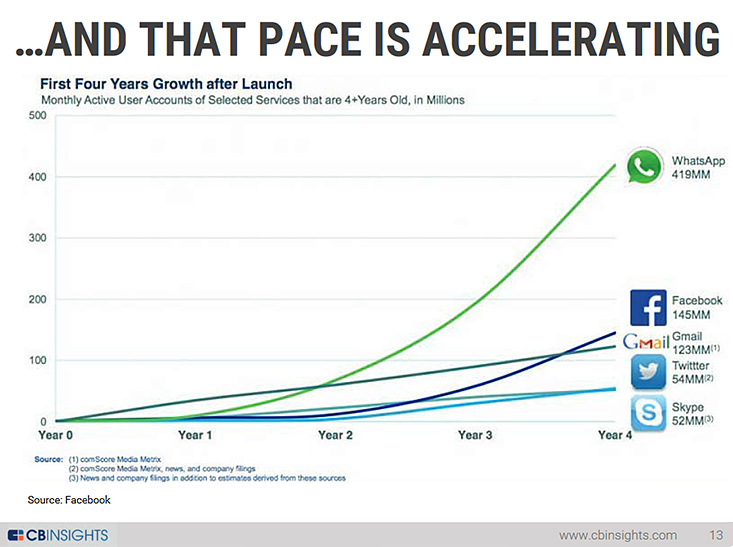

A measured pace is likely because what is technically possible is only one factor in determining how quickly new technology is adopted, according to a new study by the McKinsey Global Institute. Other crucial ingredients include economics, labor markets, regulations and social attitudes.

The report, which was released Thursday, breaks jobs down by work tasks — more than 2,000 activities across 800 occupations, from stock clerk to company boss. The institute, the research arm of the consulting firm McKinsey & Company, concludes that many tasks can be automated and that most jobs have activities ripe for automation. But the near-term impact, the report says, will be to transform work more than to eliminate jobs.

So while further automation is inevitable, McKinsey’s research suggests that it will be a relentless advance rather than an economic tidal wave.

Harnessing automation for a future that works — from mckinsey.com by James Manyika, Michael Chui, Mehdi Miremadi, Jacques Bughin, Katy George, Paul Willmott, and Martin Dewhurst

Automation is happening, and it will bring substantial benefits to businesses and economies worldwide, but it won’t arrive overnight. A new McKinsey Global Institute report finds realizing automation’s full potential requires people and technology to work hand in hand.

Excerpt:

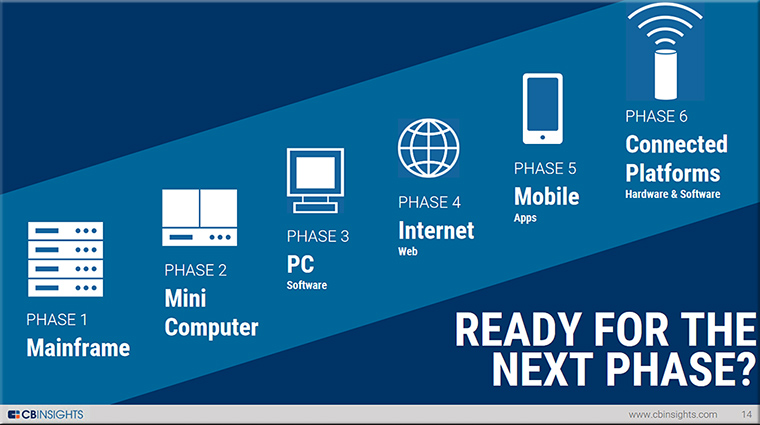

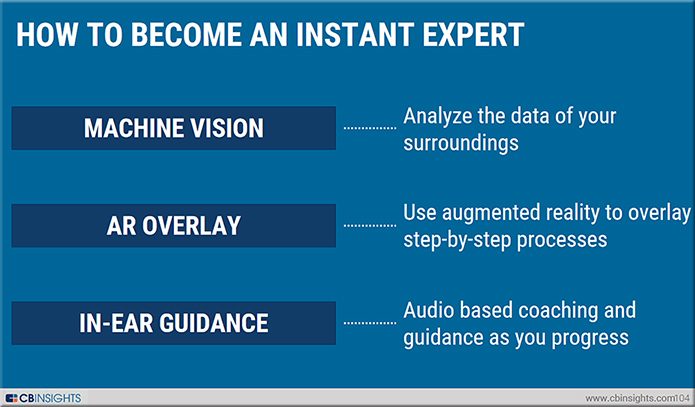

Recent developments in robotics, artificial intelligence, and machine learning have put us on the cusp of a new automation age. Robots and computers can not only perform a range of routine physical work activities better and more cheaply than humans, but they are also increasingly capable of accomplishing activities that include cognitive capabilities once considered too difficult to automate successfully, such as making tacit judgments, sensing emotion, or even driving. Automation will change the daily work activities of everyone, from miners and landscapers to commercial bankers, fashion designers, welders, and CEOs. But how quickly will these automation technologies become a reality in the workplace? And what will their impact be on employment and productivity in the global economy?

The McKinsey Global Institute has been conducting an ongoing research program on automation technologies and their potential effects. A new MGI report, A future that works: Automation, employment, and productivity, highlights several key findings.

Also related/see:

This Japanese Company Is Replacing Its Staff With Artificial Intelligence — from fortune.com by Kevin Lui

Excerpt:

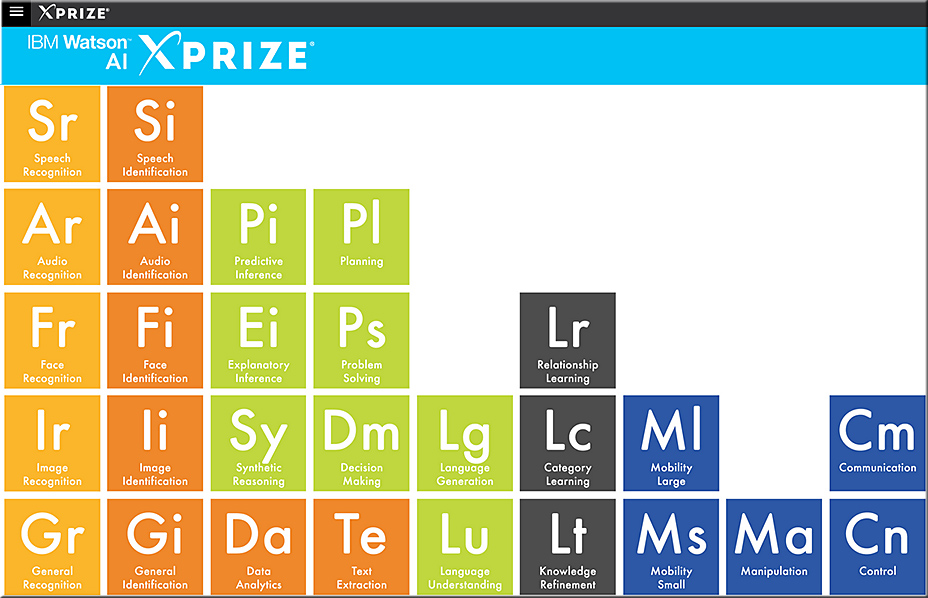

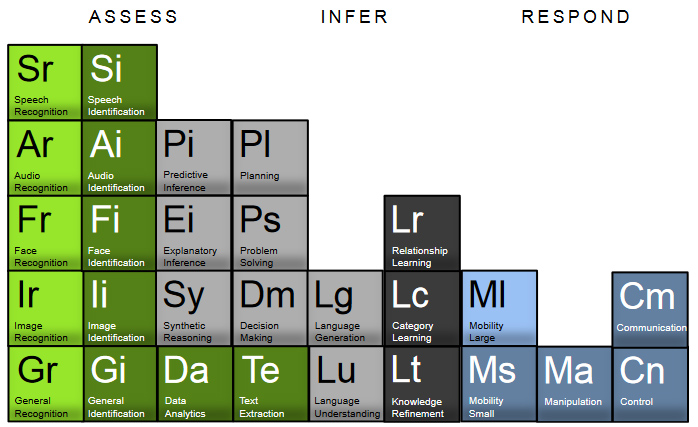

The year of AI has well and truly begun, it seems. An insurance company in Japan announced that it will lay off more than 30 employees and replace them with an artificial intelligence system. The technology will be based on IBM’s Watson Explorer, which is described as having “cognitive technology that can think like a human,” reports the Guardian. Japan’s Fukoku Mutual Life Insurance said the new system will take over from its human counterparts by calculating policy payouts. The company said it hopes the AI will be 30% more productive and aims to see investment costs recouped within two years. Fukoku Mutual Life said it expects the $1.73 million smart system—which costs around $129,000 each year to maintain—to save the company about $1.21 million each year. The 34 staff members will officially be replaced in March.

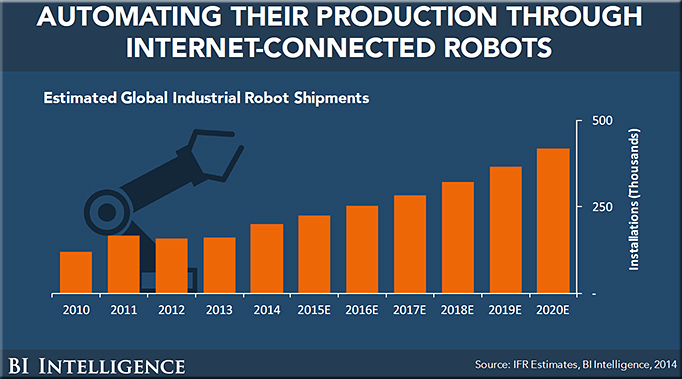

Also from “The Internet of Everything” report in 2016 by BI Intelligence:

A Darker Theme in Obama’s Farewell: Automation Can Divide Us — from nytimes.com by Claire Cain Miller

Excerpt:

Underneath the nostalgia and hope in President Obama’s farewell address Tuesday night was a darker theme: the struggle to help the people on the losing end of technological change.

“The next wave of economic dislocations won’t come from overseas,” Mr. Obama said. “It will come from the relentless pace of automation that makes a lot of good, middle-class jobs obsolete.”

Artificial Intelligence, Automation, and the Economy — from whitehouse.gov by Kristin Lee

Summary:

[On 12/20/16], the White House released a new report on the ways that artificial intelligence will transform our economy over the coming years and decades.

Although it is difficult to predict these economic effects precisely, the report suggests that policymakers should prepare for five primary economic effects:

Positive contributions to aggregate productivity growth;

Changes in the skills demanded by the job market, including greater demand for higher-level technical skills;

Uneven distribution of impact, across sectors, wage levels, education levels, job types, and locations;

Churning of the job market as some jobs disappear while others are created; and

The loss of jobs for some workers in the short-run, and possibly longer depending on policy responses.