Police across the US are training crime-predicting AIs on falsified data — from technologyreview.com by Karen Hao

A new report shows how supposedly objective systems can perpetuate corrupt policing practices.

Excerpts (emphasis DSC):

Despite the disturbing findings, the city entered a secret partnership only a year later with data-mining firm Palantir to deploy a predictive policing system. The system used historical data, including arrest records and electronic police reports, to forecast crime and help shape public safety strategies, according to company and city government materials. At no point did those materials suggest any effort to clean or amend the data to address the violations revealed by the DOJ. In all likelihood, the corrupted data was fed directly into the system, reinforcing the department’s discriminatory practices.

…

But new research suggests it’s not just New Orleans that has trained these systems with “dirty data.” In a paper released today, to be published in the NYU Law Review, researchers at the AI Now Institute, a research center that studies the social impact of artificial intelligence, found the problem to be pervasive among the jurisdictions it studied. This has significant implications for the efficacy of predictive policing and other algorithms used in the criminal justice system.

“Your system is only as good as the data that you use to train it on,” says Kate Crawford, cofounder and co-director of AI Now and an author on the study.

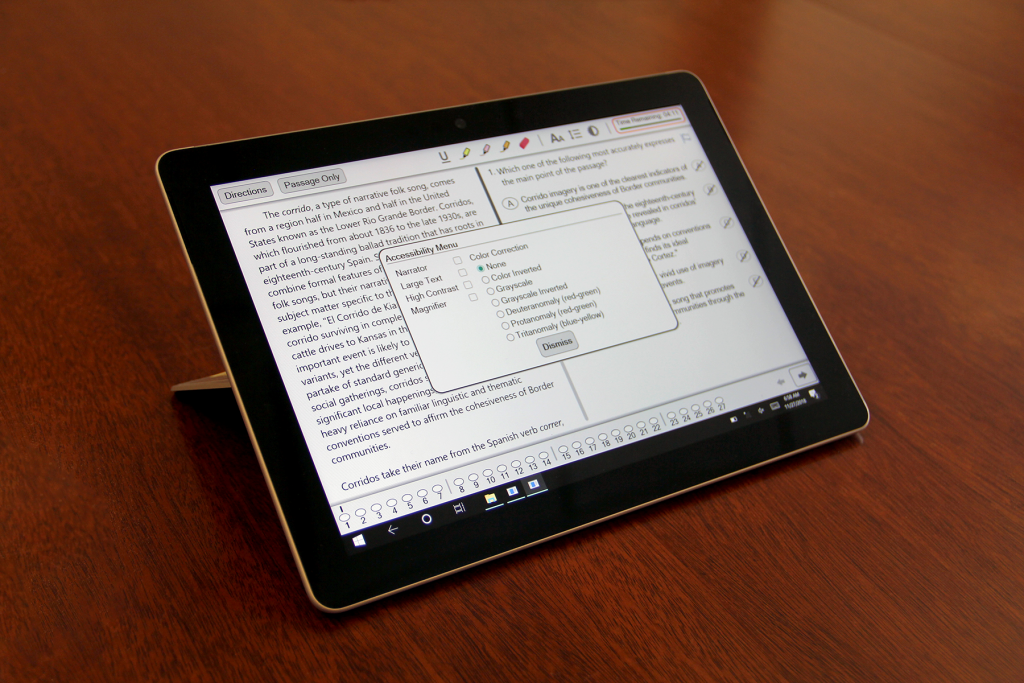

How AI is enhancing wearables — from techopedia.com by Claudio Butticev

Takeaway: Wearable devices have been helping people for years now, but the addition of AI to these wearables is giving them capabilities beyond anything seen before.

Excerpt:

Restoring Lost Sight and Hearing – Is That Really Possible?

People with sight or hearing loss must face a lot of challenges every day to perform many basic activities. From crossing the street to ordering food on the phone, even the simplest chore can quickly become a struggle. Things may change for these struggling with sight or hearing loss, however, as some companies have started developing machine learning-based systems to help the blind and visually impaired find their way across cities, and the deaf and hearing impaired enjoy some good music.

German AI company AiServe combined computer vision and wearable hardware (camera, microphone and earphones) with AI and location services to design a system that is able to acquire data over time to help people navigate through neighborhoods and city blocks. Sort of like a car navigation system, but in a much more adaptable form which can “learn how to walk like a human” by identifying all the visual cues needed to avoid common obstacles such as light posts, curbs, benches and parked cars.

From DSC:

So once again we see the pluses and minuses of a given emerging technology. In fact, most technologies can be used for good or for ill. But I’m left with asking the following questions:

- As citizens, what do we do if we don’t like a direction that’s being taken on a given technology or on a given set of technologies? Or on a particular feature, use, process, or development involved with an emerging technology?

One other reflection here…it’s the combination of some of these emerging technologies that will be really interesting to see what happens in the future…again, for good or for ill.

The question is:

How can we weigh in?

Also relevant/see:

AI Now Report 2018 — from ainowinstitute.org, December 2018

Excerpt:

University AI programs should expand beyond computer science and engineering disciplines. AI began as an interdisciplinary field, but over the decades has narrowed to become a technical discipline. With the increasing application of AI systems to social domains, it needs to expand its disciplinary orientation. That means centering forms of expertise from the social and humanistic disciplines. AI efforts that genuinely wish to address social implications cannot stay solely within computer science and engineering departments, where faculty and students are not trained to research the social world. Expanding the disciplinary orientation of AI research will ensure deeper attention to social contexts, and more focus on potential hazards when these systems are applied to human populations.

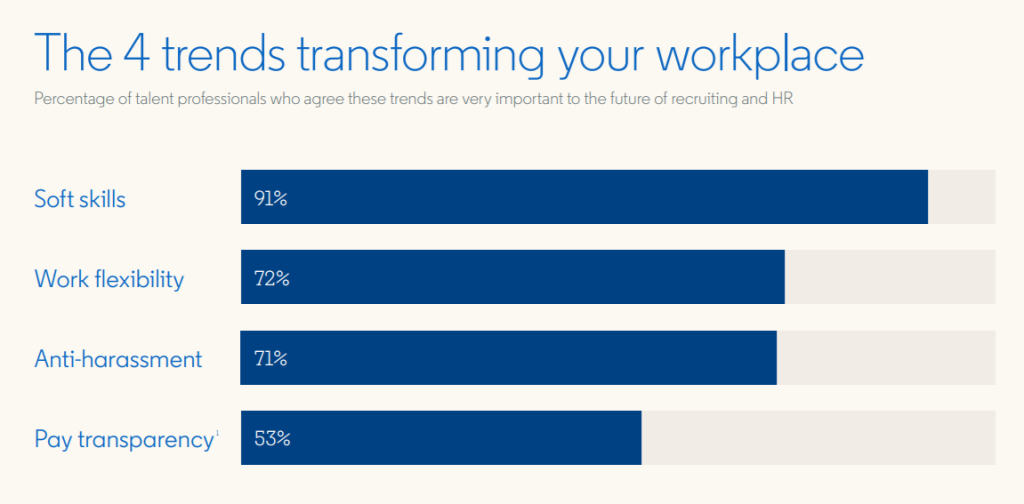

Furthermore, it is long overdue for technology companies to directly address the cultures of exclusion and discrimination in the workplace. The lack of diversity and ongoing tactics of harassment, exclusion, and unequal pay are not only deeply harmful to employees in these companies but also impacts the AI products they release, producing tools that perpetuate bias and discrimination.

The current structure within which AI development and deployment occurs works against meaningfully addressing these pressing issues. Those in a position to profit are incentivized to accelerate the development and application of systems without taking the time to build diverse teams, create safety guardrails, or test for disparate impacts. Those most exposed to harm from 42 these systems commonly lack the financial means and access to accountability mechanisms that would allow for redress or legal appeals. 233 This is why we are arguing for greater funding for public litigation, labor organizing, and community participation as more AI and algorithmic systems shift the balance of power across many institutions and workplaces.

Also relevant/see: