How chatbots and deep learning will change the future of organizations — from forbes.com by Daniel Newman

Excerpt (emphasis DSC):

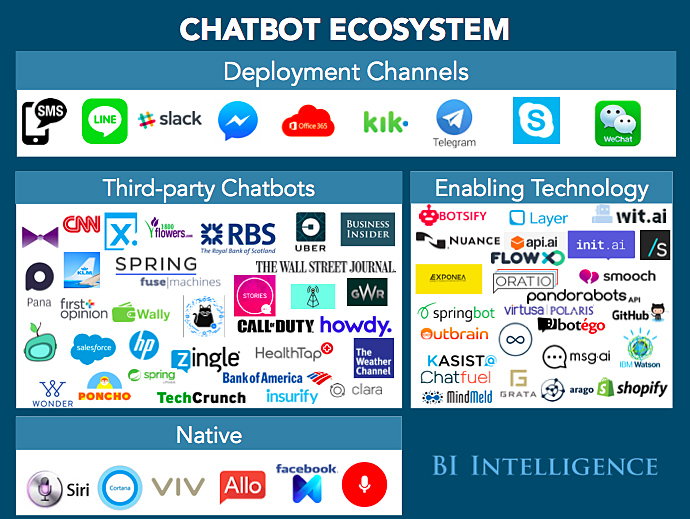

Don’t let the fun, casual name mislead you. Chatbots—software that you can “chat with”—have serious implications for the business world. Though many businesses have already considered their use for customer service purposes, a chatbot’s internal applications could be invaluable on a larger scale. For instance, chatbots could help employees break down siloes and provide targeted data to fuel every department. This digital transformation is happening, even in organizational structures that face challenges with other formats of real-time communication.

Still unclear on what chatbots are and what they do? Think of a digital assistant—such as iPhone’s Siri or Alexa, the Artificial Intelligence within the Amazon Echo. A chatbot reduces or eliminates the need for many mobile apps, as the answers are stored inside the chatbot. Need to know what the weather’s like in LA? Ask your chatbot. Is your flight running on time? Ask your chatbot. Is the package you ordered going to be delivered while you’re away? You get the gist.

From DSC:

How might institutions of higher education as well as K-12 school districts benefit from such internal applications of chatbots? How about external applications of chatbots? If done well, such applications could facilitate solid customer service (i.e., self-service knowledgebases) and aid in communications.

Hold Tight: The Chatbot Era Has Begun — from chatbotsmagazine.com by

Chatbots: What they are, and why they’re so important for businesses

Excerpt:

Imagine being able to have your own personal assistant at your fingertips, who you can chat with, give instructions and errands to, and handle the majority of your online research — both personally and professionally.

That’s essentially what a chatbot does.

Capable of being installed on any messenger platform, such as Facebook messenger or text messages, a chat bot is a basic artificial intelligence computer software program that does the legwork for you — and communicates in a way that feels like an intelligent conversation over text or voice messaging.

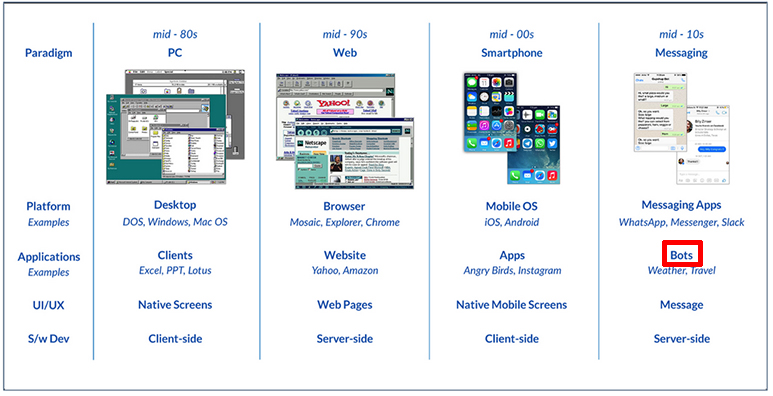

Once-in-a-decade paradigm shift: Messaging — from chatbotsmagazine.com by Beerud Sheth

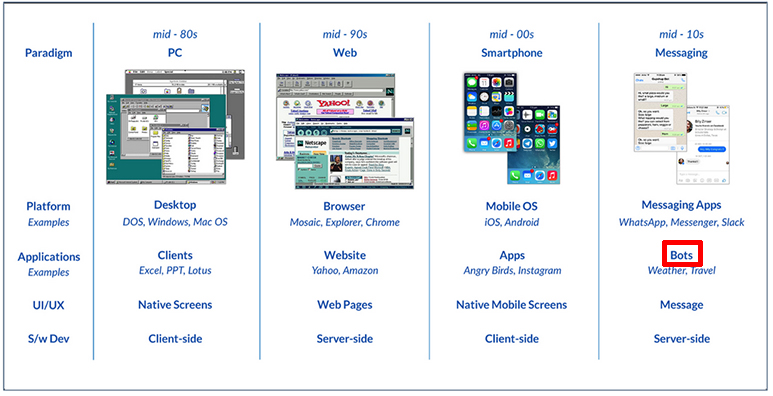

In the history of the personal computing industry, we have had a paradigm shift about once every decade.

Smart assistants and chatbots will be top consumer applications for AI over next 5 years, poll says — from venturebeat.com by Blaise Zerega

Excerpt:

Virtual agents and chatbots will be the top consumer applications of artificial intelligence over the next five years, according to a consensus poll released today by TechEmergence, a marketing research firm for AI and machine learning.

The emphasis on virtual agents and chatbots is in many ways not surprising. After all, the tech industry’s 800-pound gorillas have all made big bets: Apple with Siri, Amazon with Alexa, Facebook with M and Messenger, Google with Google Assistant, Microsoft with Cortana and Tay. However, the poll’s data also suggests that chatbots may soon be viewed as a horizontal enabling technology for many industries.

From DSC:

Might this eventually affect students’ expectations? Blackboard’s partnership with IBM and Amazon may come into play here.

Some like it bot: Why chatbots could be the start of something big — from business.sprint.com

Chatbots are on the rise. With Google, Facebook and Microsoft competing to be the caring voice your customers turn to, what does this mean for your enterprise?

Excerpt:

The most prevalent indicator that the artificial intelligence-driven future is rapidly becoming reality is the rise of the chatbot – an A.I. software program designed to chat with people. Well, more accurately, the re-emergence of the bot. Basically, robots talking to humans talking to robots is the tech vision for the future.

Maybe one of the most obvious uses for this technology would be in service. If you can incorporate your self-service knowledge base into a bot that responds directly to questions and uses customer data to provide the most relevant answers, it’s more convenient for customers…

Chatbot lawyer overturns 160,000 parking tickets in London and New York — from theguardian.com by Samuel Gibbs

Free service DoNotPay helps appeal over $4m in parking fines in just 21 months, but is just the tip of the legal AI iceberg for its 19-year-old creator

Excerpt:

An artificial-intelligence lawyer chatbot has successfully contested 160,000 parking tickets across London and New York for free, showing that chatbots can actually be useful.

Dubbed as “the world’s first robot lawyer” by its 19-year-old creator, London-born second-year Stanford University student Joshua Browder, DoNotPay helps users contest parking tickets in an easy to use chat-like interface.

Addendum on 6/29/16:

A New Chatbot Would Like to Help You With Your Bank Account — from wired.com by Cade Metz

Excerpt:

People in Asia are already using MyKai, and beginning today, you can too. Because it’s focused on banking—and banking alone—it works pretty well. But it’s also flawed. And it’s a bit weird. Unsettling, even. All of which makes it a great way of deconstructing the tech world’s ever growing obsession with “chatbots.”

…

MyKai is remarkably adept at understanding what I’m asking—and that’s largely because it’s focused solely on banking. When I ask “How much money do I have?” or “How much did I spend on food in May?,” it understands. But when I ask who won the Spain-Italy match at Euro 2016, it suggests I take another tack. The thing to realize about today’s chatbots is that they can be reasonably effective if they’ve honed to a particular task—and that they break down if the scope gets to wide.

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)