Osgoode’s new simulation-based learning tool aims to merge ethical and practical legal skills — from canadianlawyermag.com by Tim Wilbur

The designer speaks about his vision for redefining legal education through an innovative platform

The disconnection between legal education and the real world starkly contrasted with what he expected law school to be. “I thought rather naively…this would be a really interesting experience…linked to lawyers and what lawyers are doing in society…Far from it. It was solidly academic, so uninteresting, and I thought it’s got to be better than this.”

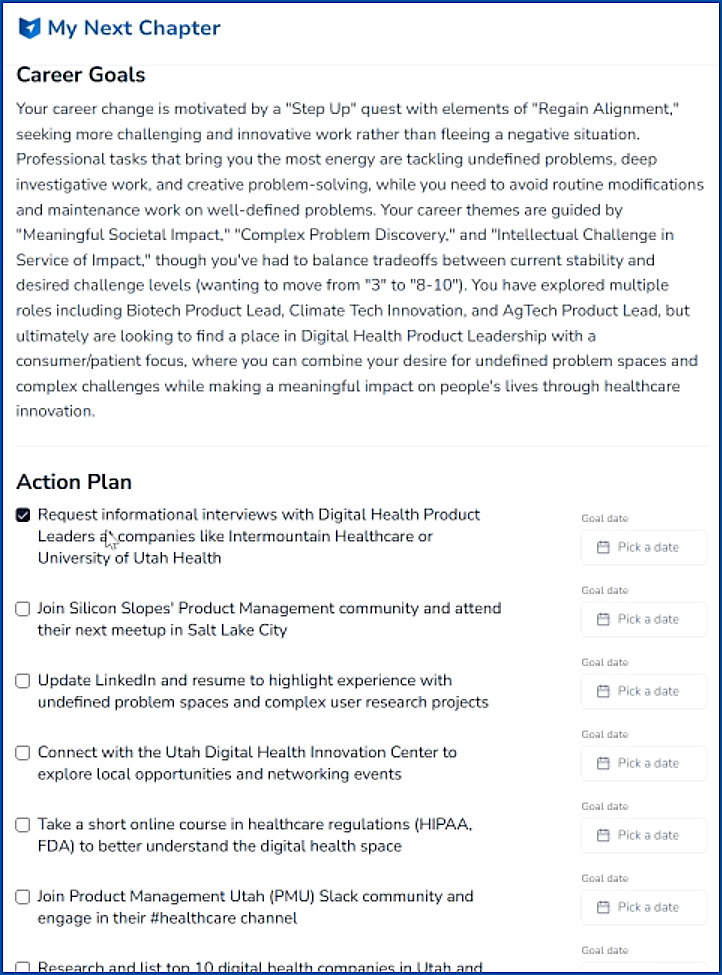

These frustrations inspired his work on simulation-based education, which seeks to produce “client-ready” lawyers and professionals who reflect deeply on their future roles. Maharg recently worked as a consultant with Osgoode Professional Development at Osgoode Hall Law School to design a platform that eschews many of the assumptions about legal education to deliver practical skills with real-world scenarios.

Osgoode’s SIMPLE platform – short for “simulated professional learning environment” – integrates case management systems and simulation engines to immerse students in practical scenarios.

“It’s actually to get them thinking hard about what they do when they act as lawyers and what they will do when they become lawyers…putting it into values and an ethical framework, as well as making it highly intensively practical,” Maharg says.

And speaking of legal training, also see:

AI in law firms should be a training tool, not a threat, for young lawyers — from canadianlawyermag.com by Tim Wilbur

Tech should free associates for deeper learning, not remove them from the process

AI is rapidly transforming legal practice. Today, tools handle document review and legal research at a pace unimaginable just a few years ago. As recent Canadian Lawyer reporting shows, legal AI adoption is outpacing expectations, especially among in-house teams, and is fundamentally reshaping how legal services are delivered.

Crucially, though, AI should not replace associates. Instead, it should relieve them of repetitive tasks and allow them to focus on developing judgment, client management, and strategic thinking. As I’ve previously discussed regarding the risks of banning AI in court, the future of law depends on blending technological fluency with the human skills clients value most.

Also, the following relates to legaltech as well:

Agentic AI in Legaltech: Proceed with Supervision! — from directory.lawnext.com by Ken Crutchfield

Semi-Autonomous agents can transform work if leaders maintain oversight

The term autonomous agents should raise some concern. I believe semi-autonomous agents is a better term. Do we really want fully autonomous agents that learn and interact independently, to find ways to accomplish tasks?

We live in a world full of cybersecurity risks. Bad actors will think of ways to use agents. Even well-intentioned systems could mishandle a task without proper guardrails.

Legal professionals will want to thoughtfully equip their agent technology with controlled access to the right services. Agents must be supervised, and training must be required for those using or benefiting from agents. Legal professionals will also want to expand the scope of AI Governance to include the oversight of agents.

…

Agentic AI will require supervision. Human review of Generative AI output is essential. Stating the obvious may be necessary, especially with agents. Controls, human review, and human monitoring must be part of the design and the requirements for any project. Leadership should not leave this to the IT department alone.