Which AI Video Tool Is Most Powerful for L&D Teams? — from by Dr. Philippa Hardman

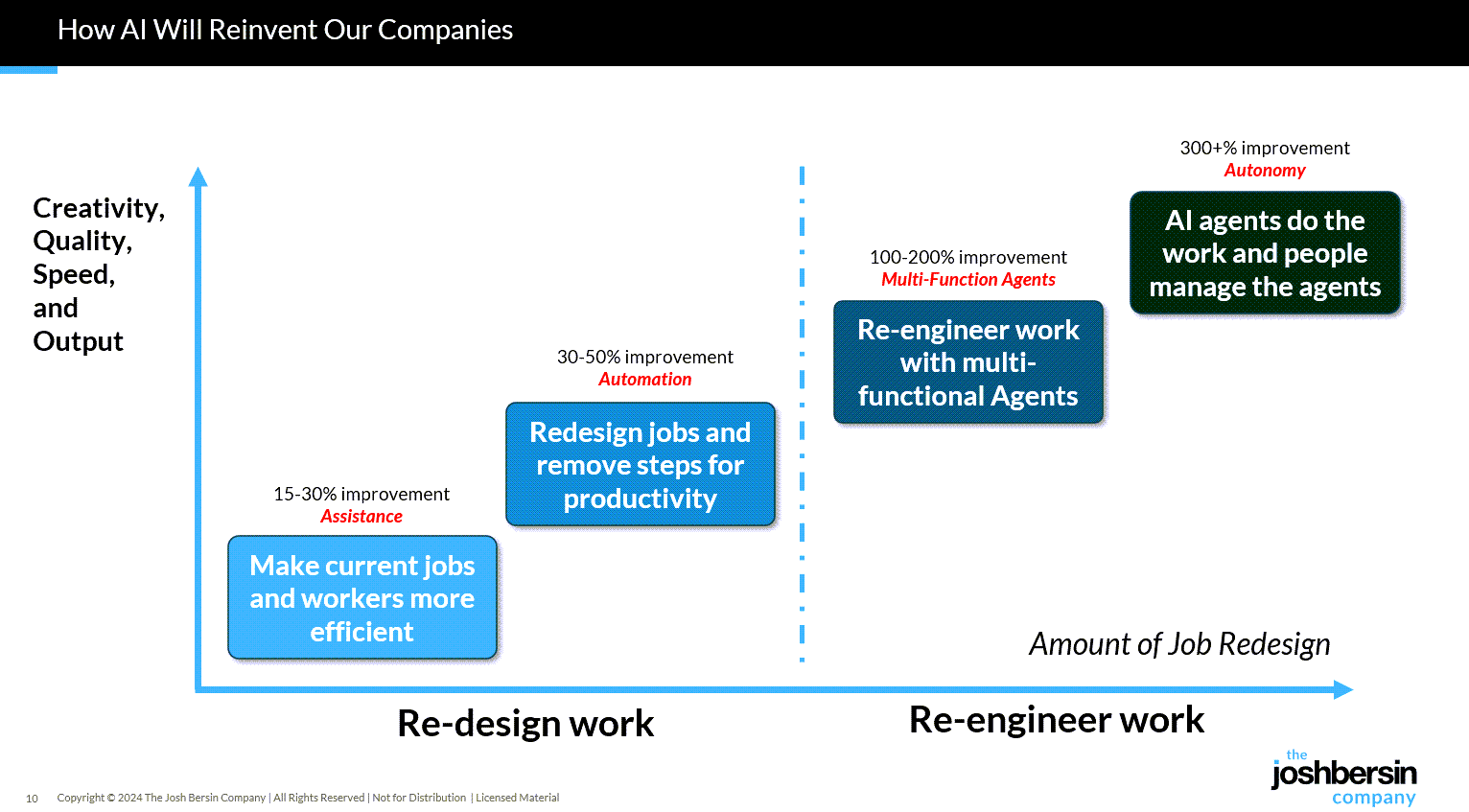

Evaluating four popular AI video generation platforms through a learning-science lens

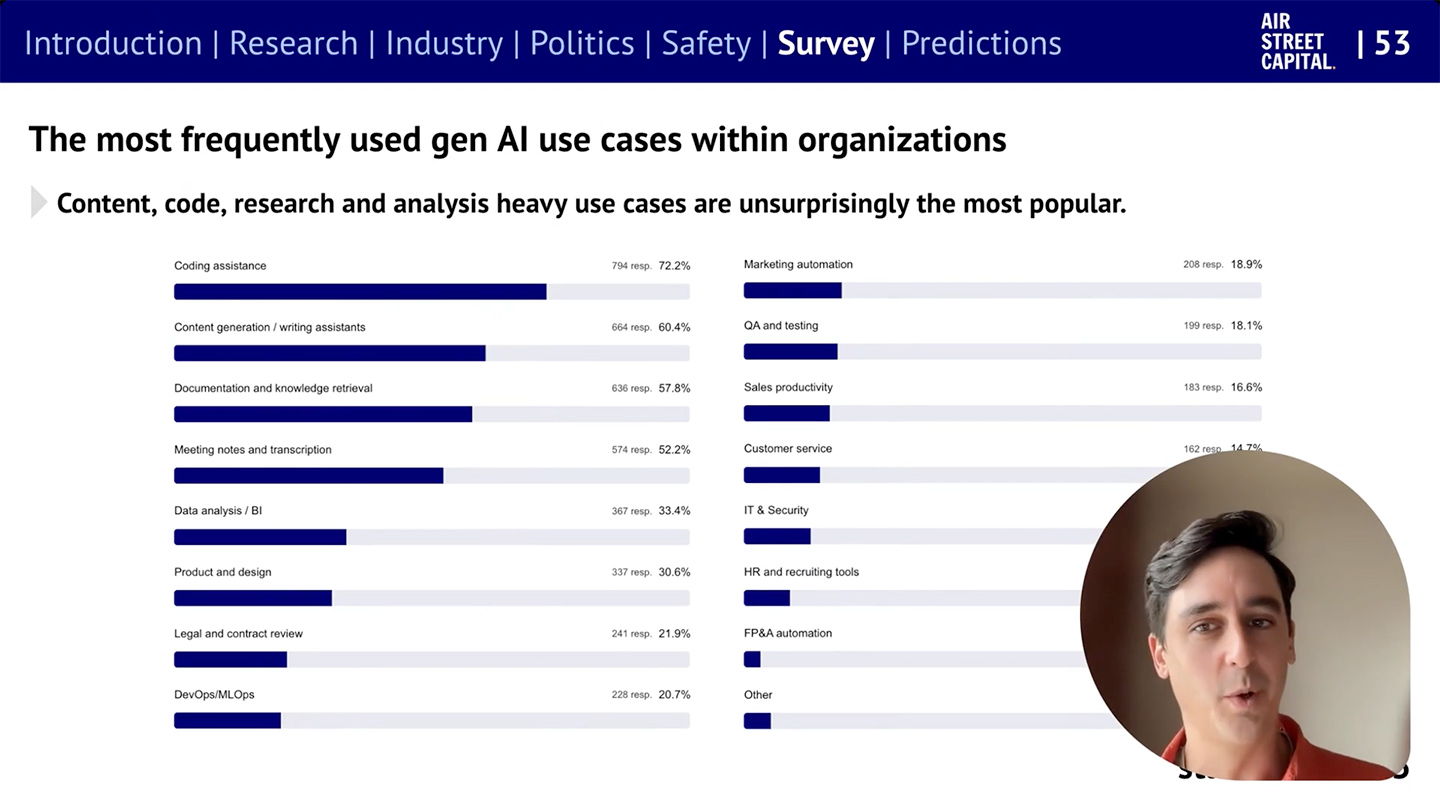

Happy new year! One of the biggest L&D stories of 2025 was the rise to fame among L&D teams of AI video generator tools. As we head into 2026, platforms like Colossyan, Synthesia, HeyGen, and NotebookLM’s video creation feature are firmly embedded in most L&D tech stacks. These tools promise rapid production and multi-language output at significantly reduced costs —and they deliver on a lot of that.

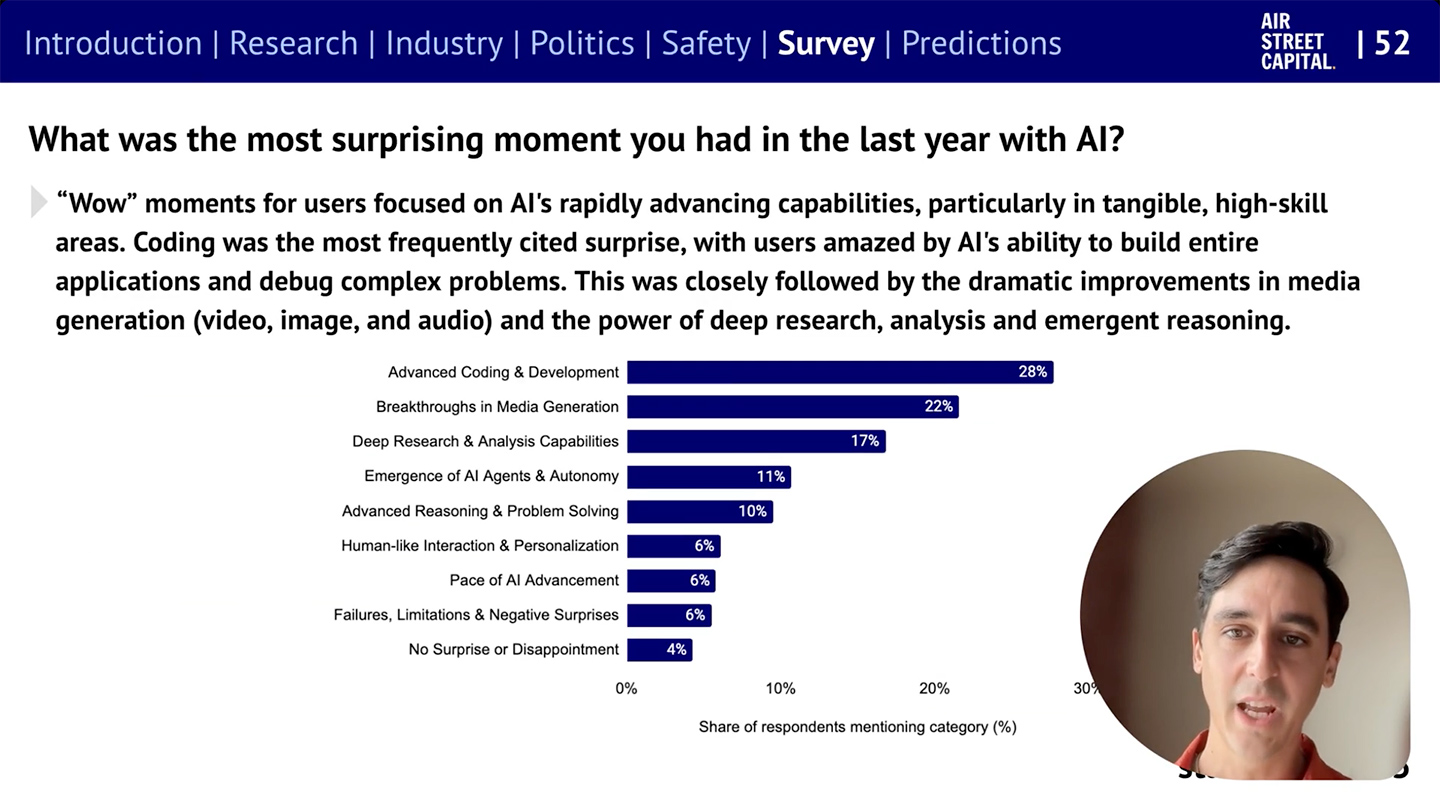

But something has been playing on my mind: we rarely evaluate these tools on what matters most for learning design—whether they enable us to build instructional content that actually enables learning.

So, I spent some time over the holiday digging into this question: do the AI video tools we use most in L&D create content that supports substantive learning?

To answer it, I took two decades of learning science research and translated it into a scoring rubric. Then I scored the four most popular AI video generation platforms among L&D professionals against the rubric.

.

For an AI-based tool or two — as they regard higher ed — see:

5 new tools worth trying — from wondertools.substack.com by Jeremy Kaplan

YouTube to NotebookLM: Import a Whole Playlist or Channel in One Click

YouTube to NotebookLM is a remarkably useful new Chrome extension that lets you bulk-add any YouTube playlists, channels, or search results into NotebookLM. for AI-powered analysis.

…

What to try

- Find or create YouTube playlists on topics of interest. Then use this extension to ingest those playlists into NotebookLM. The videos are automatically indexed, and within minutes you can create reports, slides, and infographics to enhance your learning.

- Summarize a playlist or channel with an audio or video overview. Or create quizzes, flash cards, data tables, or mind maps to explore a batch of YouTube videos. Or have a chat in NotebookLM with your favorite video channel. Check my recent post for some YouTube channels to try.