Claude is quietly becoming the go-to AI tool for learning designers. Here’s a 101 guide. — from Linkedin.com by Dr. Philippa Hardman

Report: No Foolproof Method Exists for Detecting AI-Generated Media — from campustechnology.com by Chris Paoli

Key Takeaways

- Microsoft Research warns no foolproof method exists for detecting AI-generated media reliably.

- C2PA provenance, watermarking, and fingerprinting each face security and reversal attack risks.

- Combined high-confidence authentication and public education are crucial for AI media integrity.

L&D Global Sentiment Survey 2026 — from linkedin.com by Donald H. Taylor

“But what’s happening right now is exponential.” — from linkedin.com by Josh Cavalier

Excerpt:

I need to be honest with you. I’ve been running experiments this week with Claude Code and Opus 4.6, and we have reached the precipice in the collapse of time required to produce high-quality text-based ID outputs.

This includes performance consulting reports, learning needs analyses, action mapping, scripts, storyboards, facilitator guides, rubrics, and technical specs.

I just mapped the entire performance consulting process into a multimodal AI integration architecture (diagram image). Every phase. Entry and contracting. Performance analysis. Cause analysis. Solution design. Implementation. Evaluation. Thirty files. System specifications for each. The next step is to vet out each “skill” with an expert performance consultant.

Then I attempted a learning output: an 8-module course built with a cognitive scaffold that moves beyond content delivery to facilitate deliberate practice, meaning-making, and guided reflection within the learner’s own context.

The result:

AI and human-centered learning — from linkedin.com by Patrick Blessinger

Democratizing opportunities

AI adaptive learning can adapt learning in real-time. These tools have the potential to provide a more personalized learning experience, but only if used properly.

The California State University system uses ChatGPT Edu (OpenAI, 2025). Students use it for AI-assisted tutoring, study aids, and writing support. These resources provide 24/7 availability of subject-matter expertise tailored to students’ learning needs. It is not a replacement for professors. Rather, it extends the reach of mentorship by reducing access barriers.

However, we must proceed with intellectual humility and ethical responsibility. Even though AI can customize messages, it cannot replace the encouragement of a teacher or professor, or the social and emotional aspects of learning. It’s at the intersection of humanistic values and knowledge development that education must find its balance.

Something Big Is Happening — from shumer.dev by Matt Shumer; see below from the BIG Questions Institute, where I got this article from

I’ve spent six years building an AI startup and investing in the space. I live in this world. And I’m writing this for the people in my life who don’t… my family, my friends, the people I care about who keep asking me “so what’s the deal with AI?” and getting an answer that doesn’t do justice to what’s actually happening. I keep giving them the polite version. The cocktail-party version. Because the honest version sounds like I’ve lost my mind. And for a while, I told myself that was a good enough reason to keep what’s truly happening to myself. But the gap between what I’ve been saying and what is actually happening has gotten far too big. The people I care about deserve to hear what is coming, even if it sounds crazy.

…

They’ve now done it. And they’re moving on to everything else.

The experience that tech workers have had over the past year, of watching AI go from “helpful tool” to “does my job better than I do”, is the experience everyone else is about to have. Law, finance, medicine, accounting, consulting, writing, design, analysis, customer service. Not in ten years. The people building these systems say one to five years. Some say less. And given what I’ve seen in just the last couple of months, I think “less” is more likely.

…

The models available today are unrecognizable from what existed even six months ago. The debate about whether AI is “really getting better” or “hitting a wall” — which has been going on for over a year — is over. It’s done. Anyone still making that argument either hasn’t used the current models, has an incentive to downplay what’s happening, or is evaluating based on an experience from 2024 that is no longer relevant. I don’t say that to be dismissive. I say it because the gap between public perception and current reality is now enormous, and that gap is dangerous… because it’s preventing people from preparing.

What “Something Big Is Happening” Means for Schools — from/by the BIG Questions Institute

Matt Shumer’s newsletter post Something Big is Happening has been read over 80 million times within the week when it was published, on February 9.

…

Still, it’s worth reading Shumer’s post. Given the claims and warnings in Something Big Is Happening (and countless other articles), how would you truly, honestly respond to these questions:

- What will the purpose of school be in 5 years?

- What are we doing now that we must leave behind right away?

- What can we leave behind gradually?

- What does rigor look like in this AI-powered world?

- Does our strategy look like making adjustments at the margins or are we preparing our students for a fundamental shift?

- What is our definition of success? How do the the implications of AI and jobs (and other important forces, from geopolitical shifts and climate change, to mental health needs and shifting generational values) impact the outcomes we prioritize? What is the story of success we want to pass on to our students and wider community?

Claude Code Puts Tech Workers on Notice — from builtin.com by Matthew Urwin

Anthropic is flexing its new and improved Claude Code, which used vibe coding to build the company’s latest tool, Cowork. The feat has inspired both excitement and angst within the tech world as the future of work continues to grow more uncertain.

Summary:

Anthropic is becoming the leader in enterprise artificial intelligence, thanks to upgrades made to Claude Code. The coding tool practically built Anthropic’s Cowork product — sparking both excitement around the possibilities of vibe coding and fears around the job outlook of tech workers.

The Campus AI Crisis — by Jeffrey Selingo; via Ryan Craig

Young graduates can’t find jobs. Colleges know they have to do something. But what?

Only now are colleges realizing that the implications of AI are much greater and are already outrunning their institutional ability to respond. As schools struggle to update their curricula and classroom policies, they also confront a deeper problem: the suddenly enormous gap between what they say a degree is for and what the labor market now demands. In that mismatch, students are left to absorb the risk. Alina McMahon and millions of other Gen-Zers like her are caught in a muddled in-between moment: colleges only just beginning to think about how to adapt and redefine their mission in the post-AI world, and a job market that’s changing much, much faster.

“Colleges and universities face an existential issue before them,” said Ryan Craig, author of Apprentice Nation and managing director of a firm that invests in new educational models. “They need to figure out how to integrate relevant, in-field, and hopefully paid work experience for every student, and hopefully multiple experiences before they graduate.”

Kling 3.0 just launched. The best video model yet. — from heatherbcooper.substack.com by Heather Cooper

& workflows from Imagine Art 1.5 pro, Pixverse Real-Time Video & Genspark

In today’s edition:

- Kling 3.0: Everyone a Director

- Character consistency, native audio, 15-second generations & first results

- Image & Video Prompts

- Imagine Art 1.5 Pro, Genspark AI Workspace 2.0 & PixVerse Real-Time Video Workflows

Kling 3.0: Everyone a Director

Kling just dropped version 3.0, and it’s a legitimate leap forward for AI video production (Kling is the GOAT). After spending early access time testing the new capabilities, I can confirm this is the most significant update to video generation tools I’ve seen in months.

Key highlights:

- Character & Element Consistency:

- Flexible Video Production:

- Native Audio with Dialogue & Singing:

- Enhanced Image Generation:

- Professional Output:

Jim VandeHei’s note to his kids: Blunt AI talk — from axios.com by CEO Jim VandeHei

Axios CEO Jim VandeHei wrote this note to his wife, Autumn, and their three kids. She suggested sharing it more broadly since so many families are wrestling with how to think and talk about AI. So here it is …

Dear Family:

I want to put to words what I’m hearing, seeing, thinking and writing about AI.

- Simply put, I’m now certain it will upend your work and life in ways more profound than the internet or possibly electricity. This will hit in months, not years.

- The changes will be fast, wide, radical, disorienting and scary. No one will avoid its reach.

I’m not trying to frighten you. And I know your opinions range from wonderment to worry. That’s natural and OK. Our species isn’t wired for change of this speed or scale.

- My conversations with the CEOs and builders of these LLMs, as well as my own deep experimentation with AI, have shaken and stirred me in ways I never imagined.

All of you must figure out how to master AI for any specific job or internship you hold or take. You’d be jeopardizing your future careers by not figuring out how to use AI to amplify and improve your work. You’d be wise to replace social media scrolling with LLM testing.

Be the very best at using AI for your gig.

Also see:

Also relevant/see:

Anthropic unveils Claude legal plugin and causes market meltdown — from legaltechnology.com

Generative AI vendor Anthropic has unveiled a legal plugin that helps customise its large language model Claude for legal tasks such as document review, sending public legal software stocks into an ensuing spin today (3 February).

…

Anthropic entering the legal tech fray comes as part of the launch of a number of different plugins that help users instruct Claude on how to get work done and what tools and data to pull from. A sales plugin, for example could connect Claude to your CRM and knowledge base to help with prospect research and follow ups. The legal plug-in is described as being capable of, for example, reviewing documents, flagging risks, NDA triage, and tracking compliance. The significance is that Anthropic is shifting from model supplier to the application layer and workflow owner.

The announcement is hitting public publishing and legal software companies hard.

Also related/see:

Anthropic’s Legal Plugin for Claude Cowork May Be the Opening Salvo In A Competition Between Foundation Models and Legal Tech Incumbents — from lawnext.com by Bob Ambrogi

Two weeks after introducing a new general-purpose “agentic” work mode called Claude Cowork, Anthropic has now rolled out a legal plugin aimed squarely at the legal workflows of in-house counsel, including contract review, NDA triage, compliance checks, briefings and templated responses.

It is configurable to an organization’s own playbook and risk tolerances, and Anthropic explicitly frames it as assistance, not advice, cautioning that outputs should be reviewed by licensed attorneys.

It may sound like just another feature drop in a crowded AI market. But for legal tech, it is landing more like a tsunami than a drop. For the first time, a foundation-model company is packaging a legal workflow product directly into its platform, rather than merely supplying an API to legal-tech vendors.

From Rooms to Ecosystems: When Connection Becomes the Catalyst

Some gatherings change not just in size, but in meaning. What started as a small, intentional space to celebrate partners has grown into a moment that reflects how an entire ecosystem has matured. Each year, the room fills with more leaders, more relationships, and more shared language about what learning can look like when people are genuinely connected. It is less about an event on the calendar and more about what it represents: an education community that knows each other, trusts each other, and keeps showing up.

That kind of connection did not happen by accident. Through efforts like Get on the Bus, hosted by the Ewing Marion Kauffman Foundation, networking for education leaders has shifted from transactional to relational. Students lead. Stories anchor the work. Conversations happen across tables, sectors, and roles. System leaders, intermediaries, industry partners, and civic organizations are not passing business cards. They are building shared understanding and social capital that lasts long after the room clears.

This week’s newsletter carries that same energy. You will find examples of learning that travels beyond buildings, leadership conversations grounded in real tensions, and models that reflect what becomes possible when ecosystems are aligned. When people feel connected to one another and to a common purpose, the work gets clearer, stronger, and more human. That sense of belonging is not just powerful. It is foundational to what comes next.

Town Hall Recap: What’s Next in Learning 2026 — from gettingsmart.com by Tom Vander Ark, Nate McClennen, Shawnee Caruthers, Victoria Andrews

As we enter 2026, the Getting Smart team is diving deep into the convergence of human potential and technological opportunity. Our annual Town Hall isn’t just a forecast—it’s a roadmap for the year ahead. We will explore how human-centered AI is reshaping pedagogy, the power of participation, and the new realities of educational leadership. Join us as we define the new dispositions for future-ready educators and discover how to build meaningful, personalized pathways for every student.

Farewell to Traditional Universities | What AI Has in Store for Education

Premiered Jan 16, 2026

Description:

What if the biggest change in education isn’t a new app… but the end of the university monopoly on credibility?

Jensen Huang has framed AI as a platform shift—an industrial revolution that turns intelligence into infrastructure. And when intelligence becomes cheap, personal, and always available, education stops being a place you go… and becomes a system that follows you. The question isn’t whether universities will disappear. The question is whether the old model—high cost, slow updates, one-size-fits-all—can survive a world where every student can have a private tutor, a lab partner, and a curriculum designer on demand.

This video explores what AI has in store for education—and why traditional universities may need to reinvent themselves fast.

In this video you’ll discover:

- How AI tutors could deliver personalized learning at scale

- Why credentials may shift from “degrees” to proof-of-skill portfolios

- What happens when the “middle” of studying becomes automated

- How universities could evolve: research hubs, networks, and high-trust credentialing

- The risks: cheating, dependency, bias, and widening inequality

- The 3 skills that become priceless when information is everywhere: judgment, curiosity, and responsibility

From DSC:

There appears to be another, similar video, but with a different date and length of the video. So I’m including this other recording as well here:

The End of Universities as We Know Them: What AI Is Bringing

Premiered Jan 27, 2026

What if universities don’t “disappear”… but lose their monopoly on learning, credentials, and opportunity?

AI is turning education into something radically different: personal, instant, adaptive, and always available. When every student can have a 24/7 tutor, a writing coach, a coding partner, and a study plan designed specifically for them, the old model—one professor, one curriculum, one pace for everyone—starts to look outdated. And the biggest disruption isn’t the classroom. It’s the credential. Because in an AI world, proof of skill can become more valuable than a piece of paper.

This video explores the end of universities as we know them: what AI is bringing, what will break, what will survive, and what replaces the traditional path.

In this video you’ll discover:

- Why AI tutoring could outperform one-size-fits-all lectures

- How “degrees” may shift into skill proof: portfolios, projects, and verified competency

- What happens when the “middle” of studying becomes automated

- How universities may evolve: research hubs, networks, high-trust credentialing

- The dark side: cheating, dependency, inequality, and biased evaluation

- The new advantage: judgment, creativity, and responsibility in a world of instant answers

FutureFit AI — helping build reskilling, demand-driven, employment, sector-based, and future-fit pathways, powered by AI

.

The above item was from Paul Fain’s recent posting, which includes the following excerpt:

The platform is powered by FutureFit AI, which is contributing the skills-matching infrastructure and navigation layer. Jobseekers get personalized recommendations for best-fit job roles as well as education and training options—including internships—that can help them break into specific careers. The project also includes a focus on providing support students need to complete their training, including scholarships and help with childcare and transportation.

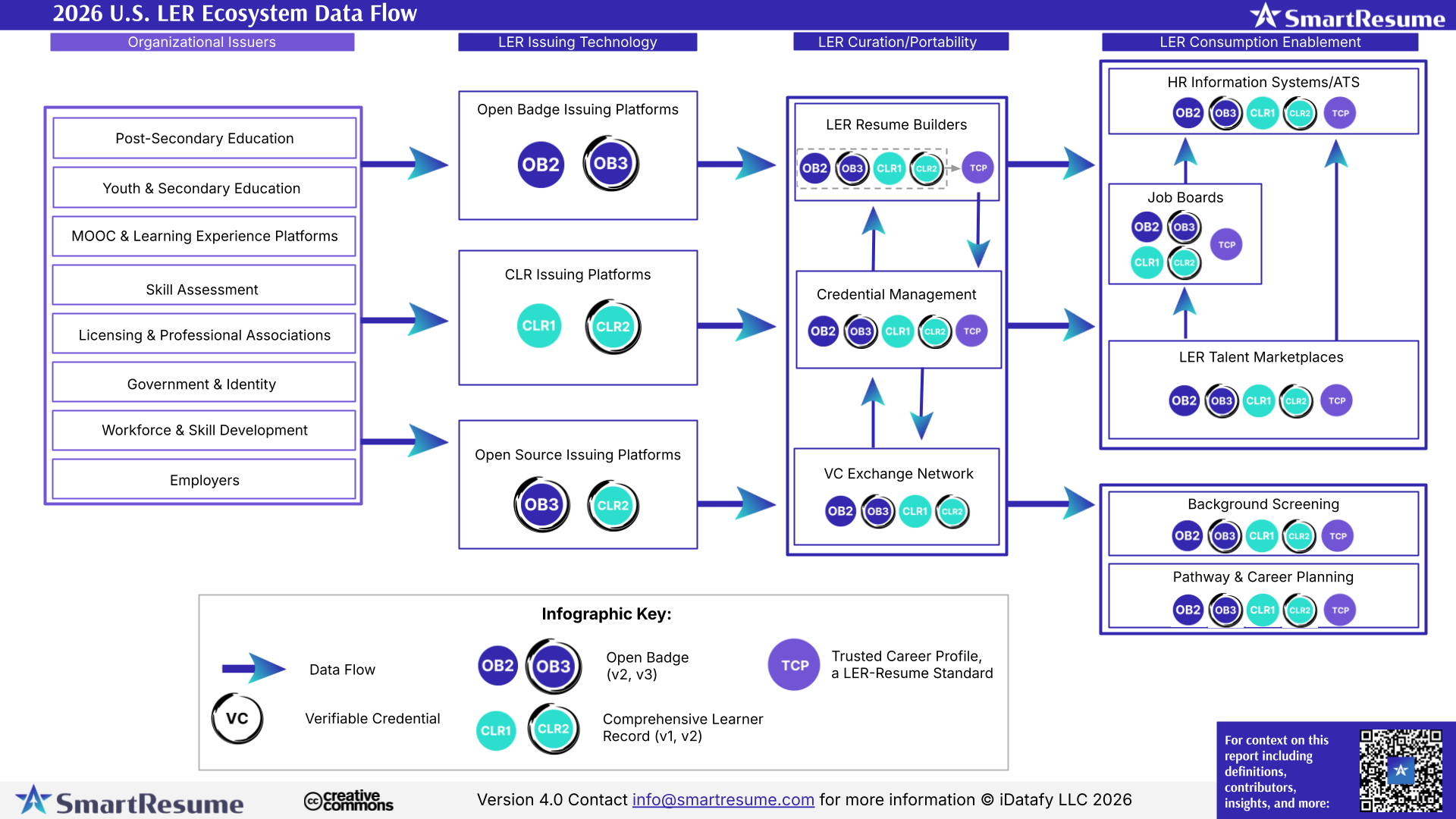

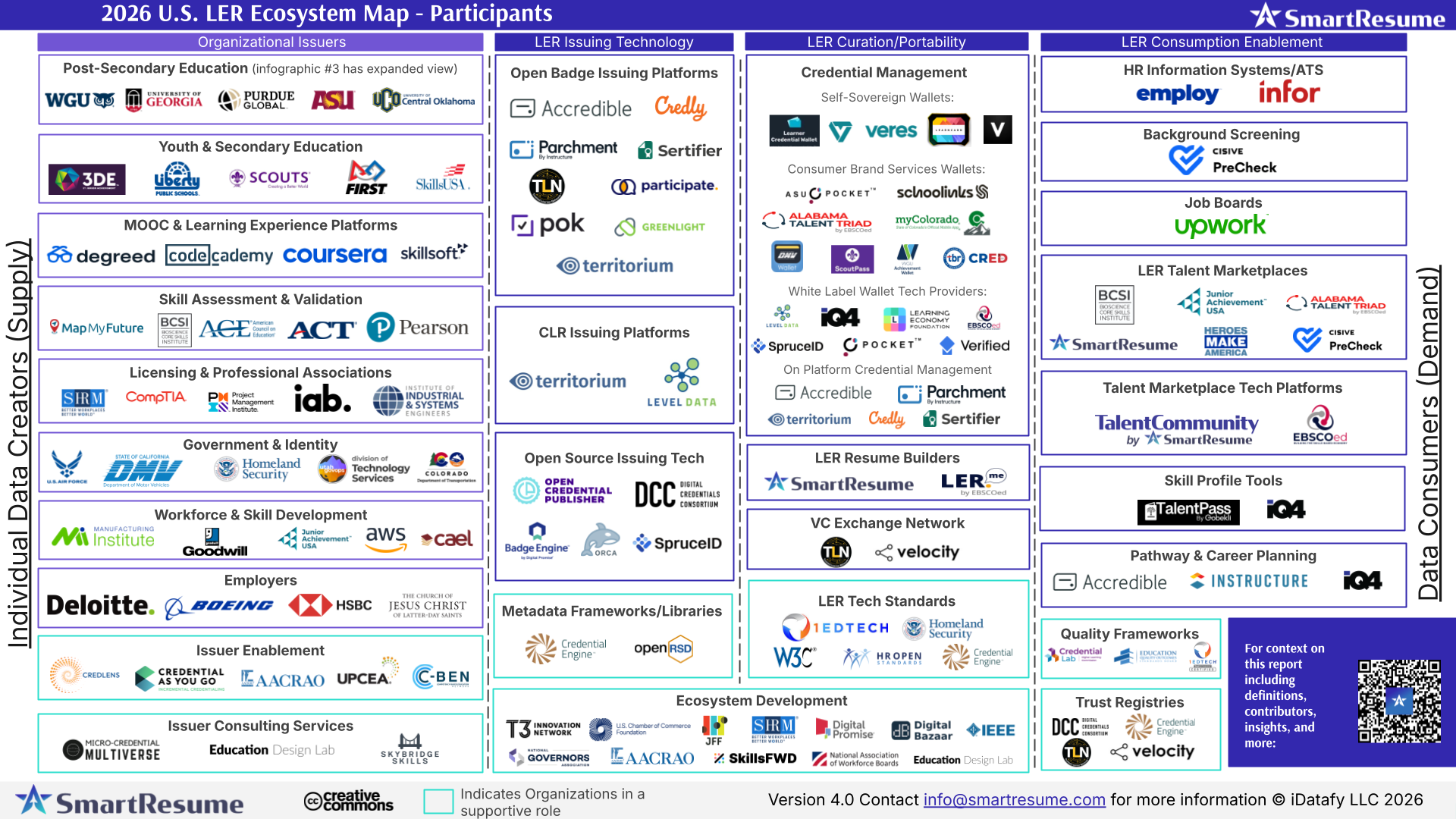

The Learning and Employment Records (LER) Report for 2026: Building the infrastructure between learning and work — from smartresume.com; with thanks to Paul Fain for this resource

Executive Summary (excerpt)

This report documents a clear transition now underway: LERs are moving from small experiments to systems people and organizations expect to rely on. Adoption remains early and uneven, but the forces reshaping the ecosystem are no longer speculative. Federal policy signals, state planning cycles, standards maturation, and employer behavior are aligning in ways that suggest 2026 will mark a shift from exploration to execution.

Across interviews with federal leaders, state CIOs, standards bodies, and ecosystem builders, a consistent theme emerged: the traditional model—where institutions control learning and employment records—no longer fits how people move through education and work. In its place, a new model is being actively designed—one in which individuals hold portable, verifiable records that systems can trust without centralizing control.

Most states are not yet operating this way. But planning timelines, RFP language, and federal signals indicate that many will begin building toward this model in early 2026.

As the ecosystem matures, another insight becomes unavoidable: records alone are not enough. Value emerges only when trusted records can be interpreted through shared skill languages, reused across contexts, and embedded into the systems and marketplaces where decisions are made.

Learning and Employment Records are not a product category. They are a data layer—one that reshapes how learning, work, and opportunity connect over time.

This report is written for anyone seeking to understand how LERs are beginning to move from concept to practice. Whether readers are new to the space or actively exploring implementation, the report focuses on observable signals, emerging patterns, and the practical conditions required to move from experimentation toward durable infrastructure.

…

…

“The building blocks for a global, interoperable skills ecosystem are already in place. As education and workforce alignment accelerates, the path toward trusted, machine-readable credentials is clear. The next phase depends on credentials that carry value across institutions, industries, states, and borders; credentials that move with learners wherever their education and careers take them. The question now isn’t whether to act, but how quickly we move.”

– Curtiss Barnes, Chief Executive Officer, 1EdTech

The above item was from Paul Fain’s recent posting, which includes the following excerpt:

SmartResume just published a guide for making sense of this rapidly expanding landscape. The LER Ecosystem Report was produced in partnership with AACRAO, Credential Engine, 1EdTech, HR Open Standards, and the U.S. Chamber of Commerce Foundation. It was based on interviews and feedback gathered over three years from 100+ leaders across education, workforce, government, standards bodies, and tech providers.

The tools are available now to create the sort of interoperable ecosystem that can make talent marketplaces a reality, the report argues. Meanwhile, federal policy moves and bipartisan attention to LERs are accelerating action at the state level.

“For state leaders, this creates a practical inflection point,” says the report. “LERs are shifting from an innovation discussion to an infrastructure planning conversation.”