Technology predictions for the second half of the decade — from techcrunch.com by Lance Smith

Excerpts:

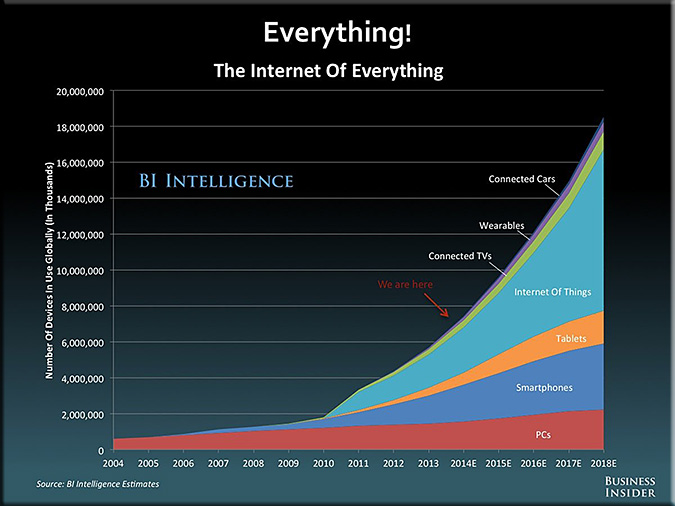

- Big Data and IoT evolve into automated information sharing

- Self-driving vehicles become mainstream

- The appearance of artificial intelligent assistants

- Real-time agility through data virtualization

EdTech trends for the coming years — from edtechreview.in

Excerpts:

EdTech is about to explode. The coming technology and the new trends on the rise can’t but forecast an extensive technology adoption in schools all around the globe.

Specific apps, systems, codable gadgets and the adaptation of general use elements to the school environment are engaging teachers and opening up the way to new pedagogical approaches. And while we are scratching the surface of some of them, others have just started to buzz persistently.

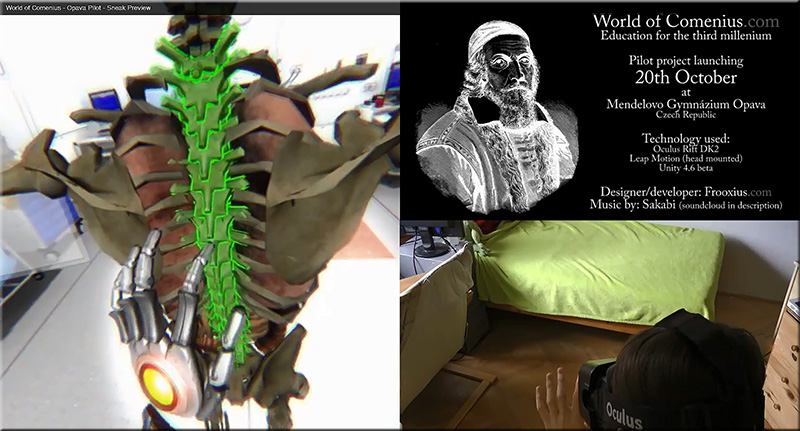

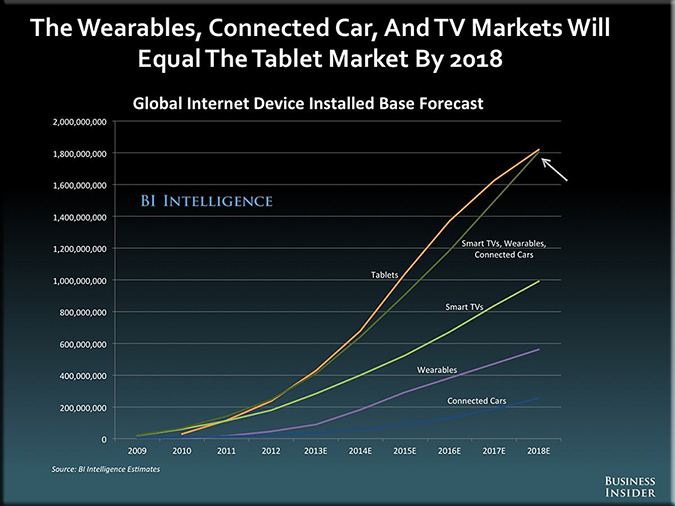

- Wearables + Nano/Micro Technology + the Internet of Things

- 3D + 4D printing

- Big Data + Data Mining

- Mobile Learning

- Coding

- Artificial Intelligence + Deep Learning

- Adaptative learning: based on a student’s behaviour and results, an intelligent assistant can predict and readapt the learning path to those necessities. Combined with biometrics and the ubiquitous persona (explained below) a student could have the best experience ever.

- Automatic courses on the fly, with contents collected by intelligent searching systems (data mining).

- Virtual tutors.

- The Ubiquitous Persona + Gamification + Social Media Learning

- Specialised Staff in Schools

Phones and wearables will spur tenfold growth in wireless data by 2019 — from recode.net

Excerpts:

Persistent growth in the use of smartphones, plus the adoption of wireless wearable devices, will cause the total amount of global wireless data traffic to rise by 10 times its current levels by 2019, according to a forecast by networking giant Cisco Systems out [on 2/3/15].

The forecast, which Cisco calls its Visual Networking Index, is based in part on the growth of wireless traffic during 2014, which Cisco says reached 30 exabytes, the equivalent of 30 billion gigabytes. If growth patterns remain consistent, Cisco’s analysts reckon, the wireless portion of traffic crossing the global Internet will reach 292 exabytes by the close of the decade.

9 ed tech trends to watch in 2015 — from the Jan/Feb edition of Campus Technology Magazine

- Learning spaces

- Badges

- Gamification

- Analytics

- 3D Printing

- Openness

- Digital

- Consumerization

- Adaptive & Personalized Learning

Even though I’ve mentioned it before, I’ll mention it again here because it fits the theme of this posting:

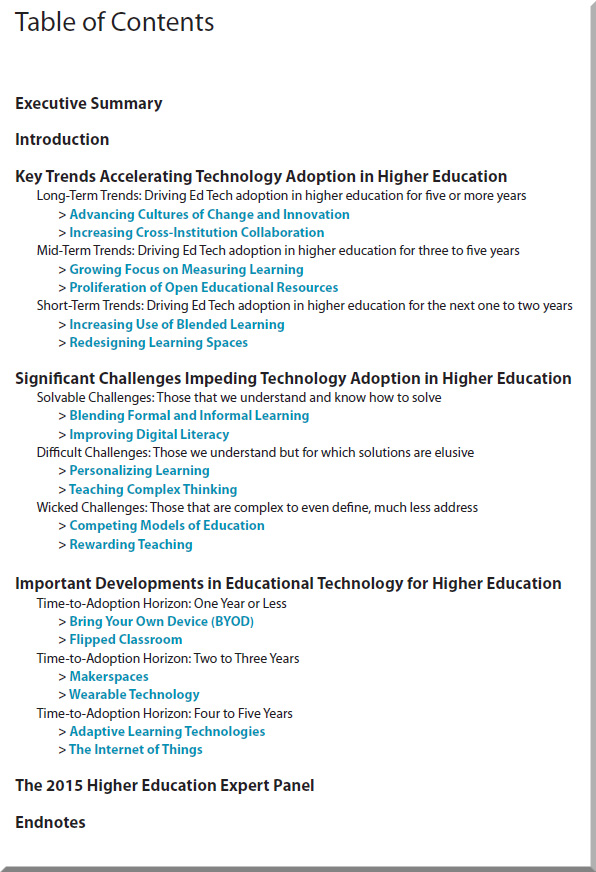

NMC Horizon Report > 2015 Higher Education Edition — from nmc.org

Excerpt:

What is on the five-year horizon for higher education institutions? Which trends and technologies will drive educational change? What are the challenges that we consider as solvable or difficult to overcome, and how can we strategize effective solutions? These questions and similar inquiries regarding technology adoption and educational change steered the collaborative research and discussions of a body of 56 experts to produce the NMC Horizon Report: 2015 Higher Education Edition, in partnership with the EDUCAUSE Learning Initiative (ELI). The NMC Horizon Report series charts the five-year horizon for the impact of emerging technologies in learning communities across the globe. With more than 13 years of research and publications, it can be regarded as the world’s longest-running exploration of emerging technology trends and uptake in education.

From DSC:

Speaking of trends…although this item isn’t necessarily technology related, I’m going to include it here anyway:

Career trends students should be watching in 2015 — from hackcollege.com by

Excerpt:

Students Need to Pay Attention to Broader Trends, and Get Ready.

For better or worse, the post-college world is changing.

According to a variety of analysis sites, including Forbes, Time, and Bing Predicts, more and more of the workforce will be impacted by increased entrepreneurship, freelancing, work-from-home trends, and non-traditional career paths.

These experts are saying that hiring practices will shift, meaning that students need to prepare LinkedIn profiles, online portfolio, work at internships, and to network to build relationships with potential future employers.

———-

Addendum on 3/6/15:

Self-driving car technology could end up in robots — from pcworld.com by Fred O’Connor

Excerpt:

The development of self-driving cars could spur advancements in robotics and cause other ripple effects, potentially benefitting society in a variety of ways.

Autonomous cars as well as robots rely on artificial intelligence, image recognition, GPS and processors, among other technologies, notes a report from consulting firm McKinsey. Some of the hardware used in self-driving cars could find its way into robots, lowering production costs and the price for consumers.

Self-driving cars could also help people grow accustomed to other machines, like robots, that can complete tasks without the need for human intervention.

Addendum on 3/6/15:

- Top 5 Emerging Technologies In 2015 — from wtvox.com

Excerpt:

1) Robotics 2.0

2) Neuromorphic Engineering

3) Intelligent Nanobots – Drones

4) 3D Printing

5) Precision Medicine

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)