K-12 and higher education are considered separate systems. What if they converged? — from edsurge.com by Jeff Young

Excerpt:

Education in America is a tale of two systems. There’s K-12 education policy and practice, but a separate set of rules—and a separate culture—for higher education. A new book argues that it doesn’t have to be that way.

In “The Convergence of K-12 and Higher Education: Policies and Programs in a Changing Era,” two education professors point out potential benefits of taking a more holistic view to American education (in a volume that collects essays from other academics). They acknowledge that there are potential pitfalls, noting that even well-intentioned systems can have negative consequences. But they argue that “now more than ever, K-12 and higher education need to converge on a shared mission and partner to advance the individual interests of American students and the collective interests of the nation.”

EdSurge recently talked with one of the book’s co-editors, Christopher Loss, associate professor of public policy and higher education at Vanderbilt University. The conversation has been edited and condensed for clarity.

Which is to say that we have tended not to think of the sector as most people actually experience it—which is one continuous ladder, one that often is missing rungs, and is sometimes difficult to climb, depending on a whole host of different factors. So, I think that the research agenda proposed by Pat and I and our collaborators is one that actually gets much closer to the experience that most people actually are having with the educational sector.

From DSC:

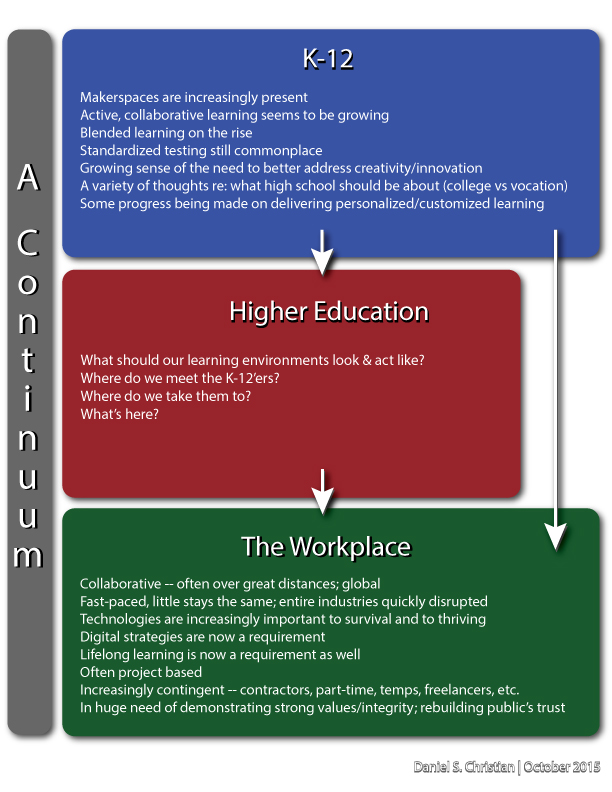

This is a great 50,000-foot level question and one that reminds me of a graphic I created a couple of years ago that speaks of the continuum that we need to more holistically address — especially as the topic of lifelong learning is increasingly critical to members of our workforce today.

Because in actuality, the lines between high school and college continue to blur. Many students are taking AP courses and/or are dually-enrolled at colleges/universities already. Some high school graduates already have enough credits to make serious headway in obtaining a college degree.

The other thing that I see over and over again is that K-12 is out innovating higher education and is better at communicating with other educators than most of higher education is. As an example, go look at some of the K-12 bloggers and educators out there on Twitter. They have tens of thousands of followers — and many of those followers being other K-12 educators. They are sharing content, best practices, questions, issues/solutions, new pedagogies, new technologies, live communication/training sessions, etc. with each other. Some examples include:

- Eric Sheninger 127 K followers

- Alice Keeler 110 K followers

- Kyle Pace 63.6 K followers

- Monica Burns 44.5 K

- Lisa Nielsen 32.4 K followers

The vast majority of the top bloggers within higher ed — and those who regularly are out on social media within higher education — are not even close to those kinds of numbers.

What that tells me is that while many educators within K-12 are out on social media sharing knowledge with each other via these relatively new means, the vast majority of administrators/faculty members/staff working within higher education are not doing that. That is, they are not regularly contributing streams of content to Twitter.

But that said, there are few people who are trying to “cross over the lines” of the two systems and converse with folks from both higher ed and K-12. We need more of these folks who are attempting to pulse-check the other systems out there in order to create a more holistic, effective continuum.

I wonder about the corporate world here as well. Are folks from the training departments and from the learning & development groups pulse-checking the ways that today’s students are being educated within higher education? Within K-12? Do they have a good sense of what the changing expectations of their new employees look like (in terms of how they prefer to learn)?

We can do better. That’s why I appreciated the question raised within Jeff’s article.

Is is time to back up a major step and practice design thinking on the entire continuum of lifelong learning?

Daniel Christian