YouTube Contest, “With Justice for All,” Seeks Submissions from Students About the Effect of Covid-19 and Recent Tragedies on Their Educational Experience

Prizes include 11 scholarships for students who best address the question, “How has your school delivered on the promise of equal access and educational excellence, particularly during these challenging times?”

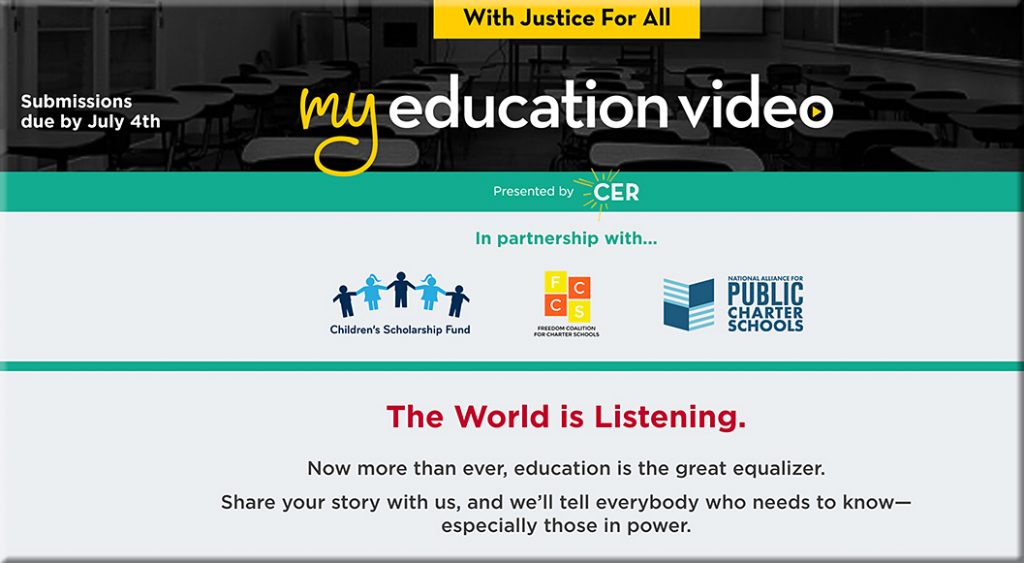

WASHINGTON, D.C. — The Center for Education Reform (CER), in partnership with the Freedom Coalition for Charter Schools, the Children’s Scholarship Fund, and the National Alliance for Public Charter Schools, today launched “With Justice for All,” a national YouTube contest for students.

Over the last few months, students and schools have faced significant challenges from distance learning and national tragedies. Times like these highlight how a great education is the most important asset a student has to effectively change the world.

So CER decided to ask students directly: Has your school delivered on the promise of equal access and educational excellence, particularly during these challenging times? Tell us how well your school did — or didn’t do — in providing you a great education.

“We want you to be able to take charge of your education,” said Jeanne Allen, CER’s founder and chief executive. “We want to assist you in writing the next chapter of your education story. Tell us your story, and we’ll tell everybody who needs to know, especially those in power.”

Videos must be shorter than three minutes, hashtagged with #MyEducationVideo, and submitted to MyEducationVideo.com by 11:59 PM EDT on July 4, 2020. Submissions will be evaluated by a panel of celebrity judges. Awards include 10 $2,500 scholarships — and one $20,000 scholarship — to the high school or college of a student’s choice. Winners will be announced during a live-streamed ceremony (date and time T.B.D.), and their videos may be shown to delegates at both of the 2020 national conventions this summer.

“We’ve designed this contest for students ages 13 and older, because we know it can be hard to get your ideas about education heard when you’re a kid,” said Allen.

For more information, visit MyEducationVideo.com.

Also see:

- The New Normal: Don’t Leave Equity Behind (video) — from er.educause.edu by Nani Jackins Park

“If we are not centering equitable student success, we’re gonna be put back decades and decades. And we’re already trying to retrofit.”