OpenAI + Figure

conversations with humans, on end-to-end neural networks:

? OpenAI is providing visual reasoning & language understanding

? Figure’s neural networks are delivering fast, low level, dexterous robot actions(thread below)pic.twitter.com/trOV2xBoax

— Brett Adcock (@adcock_brett) March 13, 2024

[Report] Generative AI Top 150: The World’s Most Used AI Tools (Feb 2024) — from flexos.work by Daan van Rossum

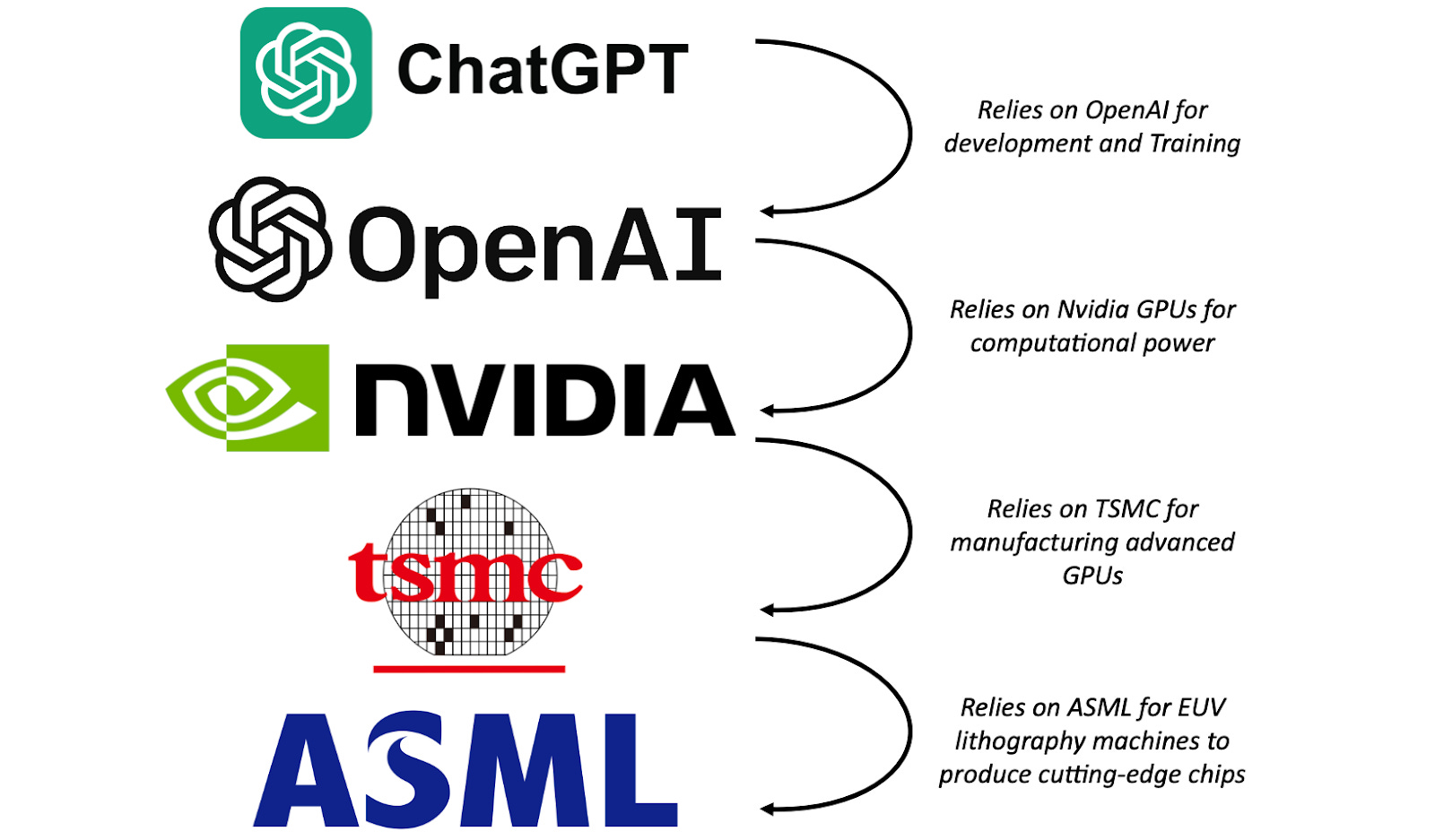

FlexOS.work surveyed Generative AI platforms to reveal which get used most. While ChatGPT reigns supreme, countless AI platforms are used by millions.

As the FlexOS research study “Generative AI at Work” concluded based on a survey amongst knowledge workers, ChatGPT reigns supreme.

…

2. AI Tool Usage is Way Higher Than People Expect – Beating Netflix, Pinterest, Twitch.

As measured by data analysis platform Similarweb based on global web traffic tracking, the AI tools in this list generate over 3 billion monthly visits.

With 1.67 billion visits, ChatGPT represents over half of this traffic and is already bigger than Netflix, Microsoft, Pinterest, Twitch, and The New York Times.

.

Artificial Intelligence Act: MEPs adopt landmark law — from europarl.europa.eu

- Safeguards on general purpose artificial intelligence

- Limits on the use of biometric identification systems by law enforcement

- Bans on social scoring and AI used to manipulate or exploit user vulnerabilities

- Right of consumers to launch complaints and receive meaningful explanations

The untargeted scraping of facial images from CCTV footage to create facial recognition databases will be banned © Alexander / Adobe Stock

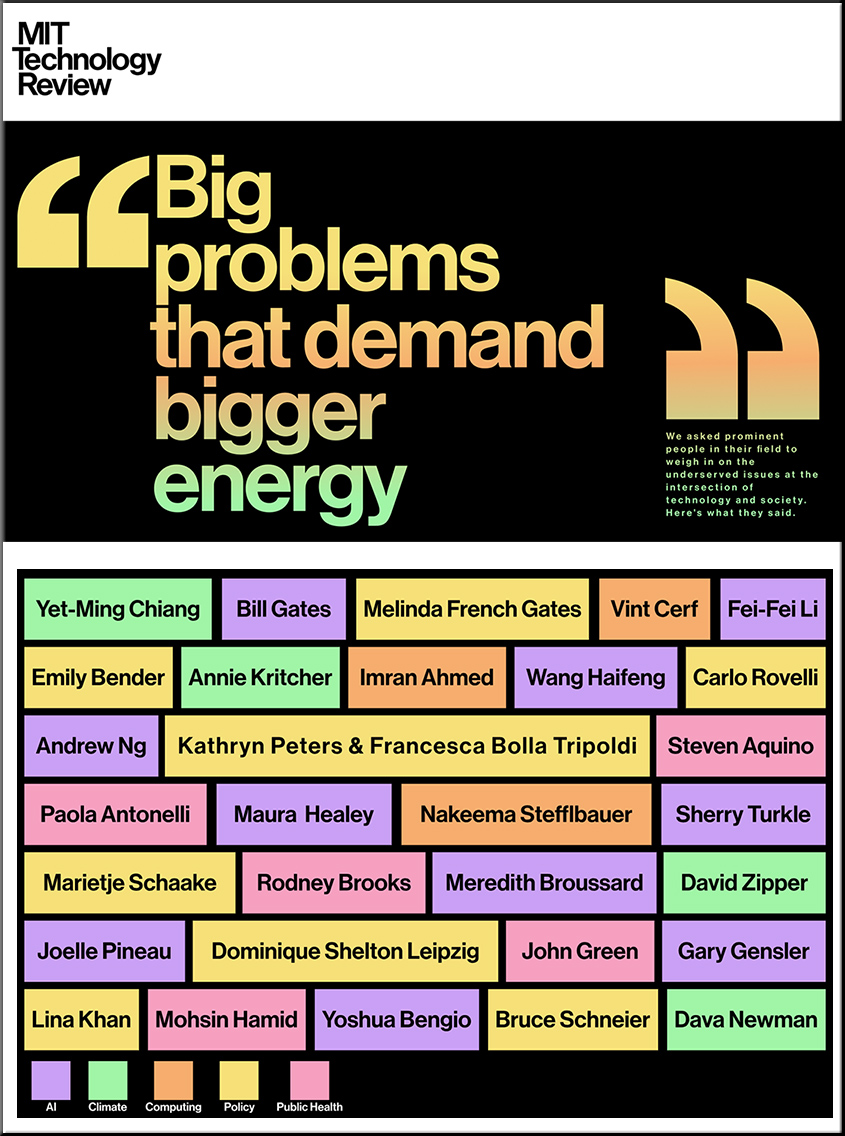

A New Surge in Power Use Is Threatening U.S. Climate Goals — from nytimes.com by Brad Plumer and Nadja Popovich

A boom in data centers and factories is straining electric grids and propping up fossil fuels.

Something unusual is happening in America. Demand for electricity, which has stayed largely flat for two decades, has begun to surge.

Over the past year, electric utilities have nearly doubled their forecasts of how much additional power they’ll need by 2028 as they confront an unexpected explosion in the number of data centers, an abrupt resurgence in manufacturing driven by new federal laws, and millions of electric vehicles being plugged in.

OpenAI and the Fierce AI Industry Debate Over Open Source — from bloomberg.com by Rachel Metz

The tumult could seem like a distraction from the startup’s seemingly unending march toward AI advancement. But the tension, and the latest debate with Musk, illuminates a central question for OpenAI, along with the tech world at large as it’s increasingly consumed by artificial intelligence: Just how open should an AI company be?

…

The meaning of the word “open” in “OpenAI” seems to be a particular sticking point for both sides — something that you might think sounds, on the surface, pretty clear. But actual definitions are both complex and controversial.

Researchers develop AI-driven tool for near real-time cancer surveillance — from medicalxpress.com by Mark Alewine; via The Rundown AI

Artificial intelligence has delivered a major win for pathologists and researchers in the fight for improved cancer treatments and diagnoses.

In partnership with the National Cancer Institute, or NCI, researchers from the Department of Energy’s Oak Ridge National Laboratory and Louisiana State University developed a long-sequenced AI transformer capable of processing millions of pathology reports to provide experts researching cancer diagnoses and management with exponentially more accurate information on cancer reporting.

.webp)