Uplimit raises stakes in corporate learning with suite of AI agents that can train thousands of employees simultaneously — from venturebeat.com by Michael Nuñez|

Uplimit unveiled a suite of AI-powered learning agents today designed to help companies rapidly upskill employees while dramatically reducing administrative burdens traditionally associated with corporate training.

The San Francisco-based company announced three sets of purpose-built AI agents that promise to change how enterprises approach learning and development: skill-building agents, program management agents, and teaching assistant agents. The technology aims to address the growing skills gap as AI advances faster than most workforces can adapt.

“There is an unprecedented need for continuous learning—at a scale and speed traditional systems were never built to handle,” said Julia Stiglitz, CEO and co-founder of Uplimit, in an interview with VentureBeat. “The companies best positioned to thrive aren’t choosing between AI and their people—they’re investing in both.”

Introducing Claude for Education — from anthropic.com

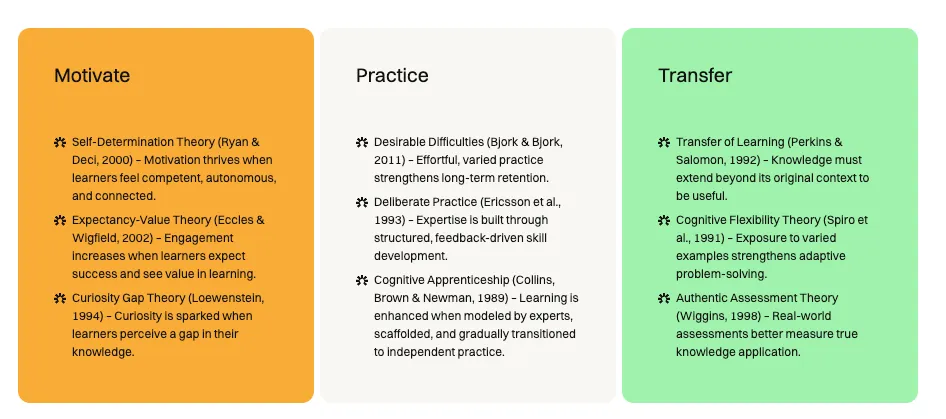

Today we’re launching Claude for Education, a specialized version of Claude tailored for higher education institutions. This initiative equips universities to develop and implement AI-enabled approaches across teaching, learning, and administration—ensuring educators and students play a key role in actively shaping AI’s role in society.

As part of announcing Claude for Education, we’re introducing:

- Learning mode: A new Claude experience that guides students’ reasoning process rather than providing answers, helping develop critical thinking skills

- University-wide Claude availability: Full campus access agreements with Northeastern University, London School of Economics and Political Science (LSE), and Champlain College, making Claude available to all students

- Academic partnerships: Joining Internet2 and working with Instructure to embed AI into teaching & learning with Canvas LMS

- Student programs: A new Claude Campus Ambassadors program along with an initiative offering API credits for student projects

A comment on this from The Rundown AI:

Why it matters: Education continues to grapple with AI, but Anthropic is flipping the script by making the tech a partner in developing critical thinking rather than an answer engine. While the controversy over its use likely isn’t going away, this generation of students will have access to the most personalized, high-quality learning tools ever.

Should College Graduates Be AI Literate? — from chronicle.com by Beth McMurtrie (behind a paywall)

More institutions are saying yes. Persuading professors is only the first barrier they face.

Last fall one of Jacqueline Fajardo’s students came to her office, eager to tell her about an AI tool that was helping him learn general chemistry. Had she heard of Google NotebookLM? He had been using it for half a semester in her honors course. He confidently showed her how he could type in the learning outcomes she posted for each class and the tool would produce explanations and study guides. It even created a podcast based on an academic paper he had uploaded. He did not feel it was important to take detailed notes in class because the AI tool was able to summarize the key points of her lectures.

Showing Up for the Future: Why Educators Can’t Sit Out the AI Conversation — from marcwatkins.substack.com with a guest post from Lew Ludwig

The Risk of Disengagement

Let’s be honest: most of us aren’t jumping headfirst into AI. At many of our institutions, it’s not a gold rush—it’s a quiet standoff. But the group I worry most about isn’t the early adopters. It’s the faculty who’ve decided to opt out altogether.

That choice often comes from a place of care. Concerns about data privacy, climate impact, exploitative labor, and the ethics of using large language models are real—and important. But choosing not to engage at all, even on ethical grounds, doesn’t remove us from the system. It just removes our voices from the conversation.

And without those voices, we risk letting others—those with very different priorities—make the decisions that shape what AI looks like in our classrooms, on our campuses, and in our broader culture of learning.

Turbocharge Your Professional Development with AI — from learningguild.com by Dr. RK Prasad

You’ve just mastered a few new eLearning authoring tools, and now AI is knocking on the door, offering to do your job faster, smarter, and without needing coffee breaks. Should you be worried? Or excited?

If you’re a Learning and Development (L&D) professional today, AI is more than just a buzzword—it’s transforming the way we design, deliver, and measure corporate training. But here’s the good news: AI isn’t here to replace you. It’s here to make you better at what you do.

The challenge is to harness its potential to build digital-ready talent, not just within your organization but within yourself.

Let’s explore how AI is reshaping L&D strategies and how you can leverage it for professional development.

5 Recent AI Notables — from automatedteach.com by Graham Clay

1. OpenAI’s New Image Generator

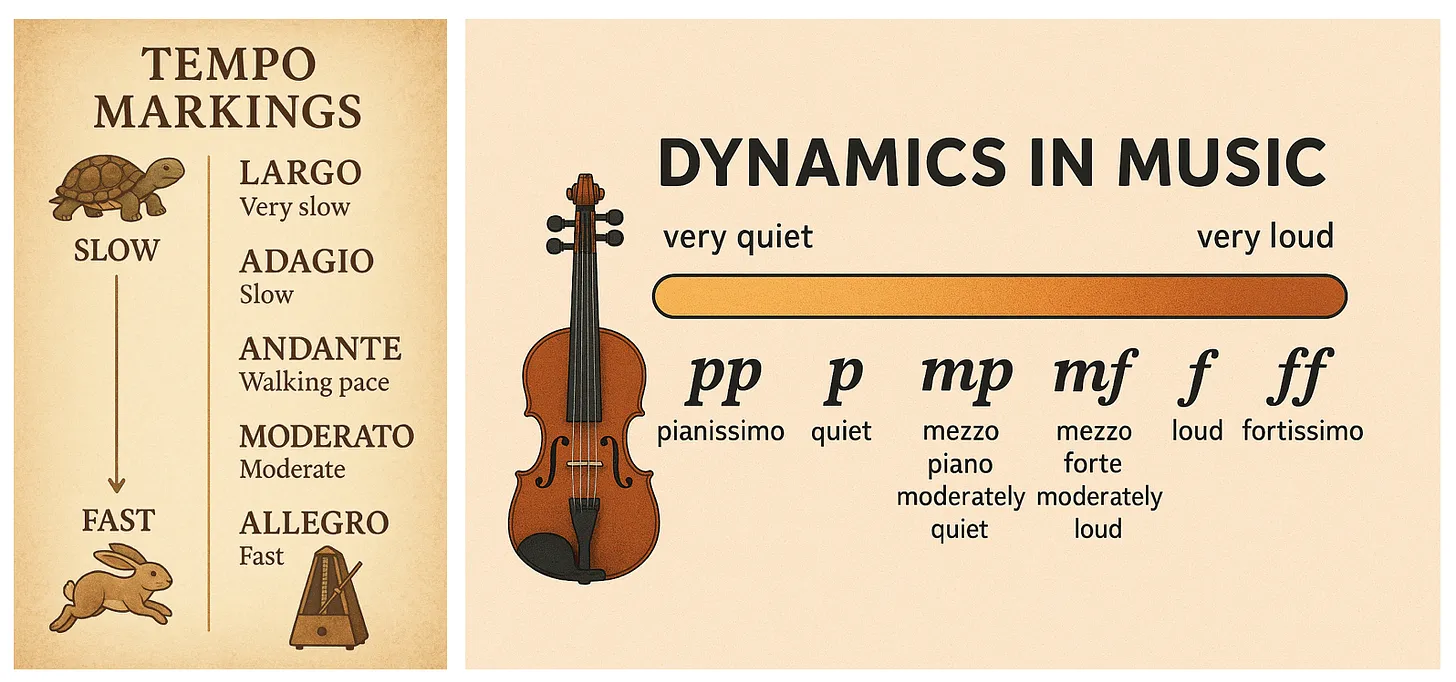

What Happened: OpenAI integrated a much more powerful image generator directly into GPT-4o, making it the default image creator in ChatGPT. Unlike previous image models, this one excels at accurately rendering text in images, precise visualization of diagrams/charts, and multi-turn image refinement through conversation.

Why It’s Big: For educators, this represents a significant advancement in creating educational visuals, infographics, diagrams, and other instructional materials with unprecedented accuracy and control. It’s not perfect, but you can now quickly generate custom illustrations that accurately display mathematical equations, chemical formulas, or process workflows — previously a significant hurdle in digital content creation — without requiring graphic design expertise or expensive software. This capability dramatically reduces the time between conceptualizing a visual aid and implementing it in course materials.

.

The 4 AI modes that will supercharge your workflow — from aiwithallie.beehiiv.com by Allie K. Miller

The framework most people and companies won’t discover until 2026