We closed the fifth annual Student Podcast Challenge — more than 2,900 entries!!!

…

So today, I wanted to share something that I’m also personally proud of – an elaborate resources page for student podcasting that our team published earlier this year. My big boss Steve Drummond named it “ Sound Advice: The NPR guide to student podcasting.” And, again, this isn’t just for Student Podcast Challenge participants. We have guides from NPR and more for anyone interested in starting a podcast!

Here’s a sampler of some of my favorite resources:

- Using sound: Teachers, here’s a lovely video you can play for your class! Or for any visual learners, this is a fun watch! In this video, veteran NPR correspondent Don Gonyea walks you through how to build your own recording studio – a pillow fort! (And yes, this is an actual trick we use at NPR!)

- Voice coaching: Speaking into a microphone is hard, even for our radio veterans. In this video, NPR voice coach Jessica Hansen and our training team share a few vocal exercises that will help you sound more natural in front of a mic! I personally watched this video before recording my first radio story, so I’d highly recommend it for everyone!

- Life Kit episode on podcasting: In this episode from NPR’s Life Kit , Lauren Migaki, our very own NPR Ed senior producer, brings us tips from podcast producers across NPR, working on all your favorite shows, including Code Switch, Planet Money and more! It’s an awesome listen for a class or on your own!

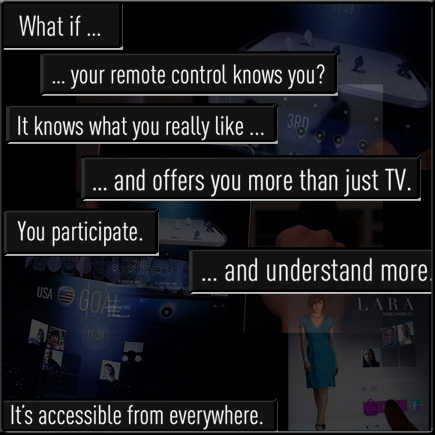

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)