DC: This is the type of thing that might impact the interface design of future #AI related applications. If so, our current efforts to refine prompts may have a shorter lifespan that we expected.https://t.co/MuNMf2kGwL

— Daniel Christian (he/him/his) (@dchristian5) June 26, 2023

- The GPT-4 Browser That Will Change Your Search Game — from noise.beehiiv.com by Alex Banks

Why Microsoft Has The ‘Edge’ On Google

Excerpts:

Microsoft has launched a GPT-4 enhanced Edge browser.

By integrating OpenAI’s GPT-4 technology with Microsoft Edge, you can now use ChatGPT as a copilot in your Bing browser. This delivers superior search results, generates content, and can even transform your copywriting skills (read on to find out how).

Benefits mentioned include: Better Search, Complete Answers, and Creative Spark.

The new interactive chat feature means you can get the complete answer you are looking for by refining your search by asking for more details, clarity, and ideas.

From DSC:

I have to say that since the late 90’s, I haven’t been a big fan of web browsers from Microsoft. (I don’t like how Microsoft unfairly buried Netscape Navigator and the folks who had out-innovated them during that time.) As such, I don’t use Edge so I can’t fully comment on the above article.

But I do have to say that this is the type of thing that may make me reevaluate my stance regarding Microsoft’s browsers. Integrating GPT-4 into their search/chat functionalities seems like it would be a very solid, strategic move — at least as of late April 2023.

Speaking of new items coming from Microsoft, also see:

Microsoft makes its AI-powered Designer tool available in preview — from techcrunch.com by Kyle Wiggers

Excerpts:

[On 4/27/23], Microsoft Designer, Microsoft’s AI-powered design tool, launched in public preview with an expanded set of features.

Announced in October, Designer is a Canva-like web app that can generate designs for presentations, posters, digital postcards, invitations, graphics and more to share on social media and other channels. It leverages user-created content and DALL-E 2, OpenAI’s text-to-image AI, to ideate designs, with drop-downs and text boxes for further customization and personalization.

…

Designer will remain free during the preview period, Microsoft says — it’s available via the Designer website and in Microsoft’s Edge browser through the sidebar. Once the Designer app is generally available, it’ll be included in Microsoft 365 Personal and Family subscriptions and have “some” functionality free to use for non-subscribers, though Microsoft didn’t elaborate.

From DSC:

Check this confluence of emerging technologies out!

Natural language interfaces have truly arrived. Here’s ChatARKit: an open source demo using #chatgpt to create experiences in #arkit. How does it work? Read on. (1/) pic.twitter.com/R2pYKS5RBq

— Bart Trzynadlowski (@BartronPolygon) December 21, 2022

Also see:

The Future of Education Using AR

via @gigadgets_ #AR #AugmentedReality #MR #mixedreality #ai #technology #vr #virtualreality #innovation #edtech #tech #future #medtech #healthtech #education #iot #teacher #classroom #mi #futurism #digitalasset #edutech pic.twitter.com/ZOP0l2kkoR

— Fred Steube (@steube) December 23, 2022

How to spot AI-generated text — from technologyreview.com by Melissa Heikkilä

The internet is increasingly awash with text written by AI software. We need new tools to detect it.

Excerpt:

This sentence was written by an AI—or was it? OpenAI’s new chatbot, ChatGPT, presents us with a problem: How will we know whether what we read online is written by a human or a machine?

…

“If you have enough text, a really easy cue is the word ‘the’ occurs too many times,” says Daphne Ippolito, a senior research scientist at Google Brain, the company’s research unit for deep learning.

…

“A typo in the text is actually a really good indicator that it was human-written,” she adds.

7 Best Tech Developments of 2022 — from /thetechranch.comby

Excerpt:

As we near the end of 2022, it’s a great time to look back at some of the top technologies that have emerged this year. From AI and virtual reality to renewable energy and biotechnology, there have been a number of exciting developments that have the potential to shape the future in a big way. Here are some of the top technologies that have emerged in 2022:

Virtual or in-person: The next generation of trial lawyers must be prepared for anything — from reuters.com by Stratton Horres and Karen L. Bashor

/cloudfront-us-east-2.images.arcpublishing.com/reuters/VC6OEOGOOFDENO7BIW6JR6RGBU.jpg)

Excerpt:

In this article, we will examine several key ways in which COVID-19 has changed trial proceedings, strategy and preparation and how mentoring programs can make a difference.

…

COVID-19 has shaken up the jury trial experience for both new and experienced attorneys. For those whose only trials have been conducted during COVID-19 restrictions and for everyone easing back into the in-person trials, these are key elements to keep in mind practicing forward. Firm mentoring programs should be considered to prepare the future generation of trial lawyers for both live and virtual trials.

From DSC:

I think law firms will need to expand the number of disciplines coming to their strategic tables. That is, as more disciplines are required to successfully practice law in the 21st century, more folks with technical backgrounds and/or abilities will be needed. Web front and back end developers, User Experience Designers, Instructional Designers, Audio/Visual Specialists, and others come to my mind. Such people can help develop the necessary spaces, skills, training, and mentoring programs mentioned in this article. As within our learning ecosystems, the efficient and powerful use of teams of specialists will deliver the best products and services.

From DSC:

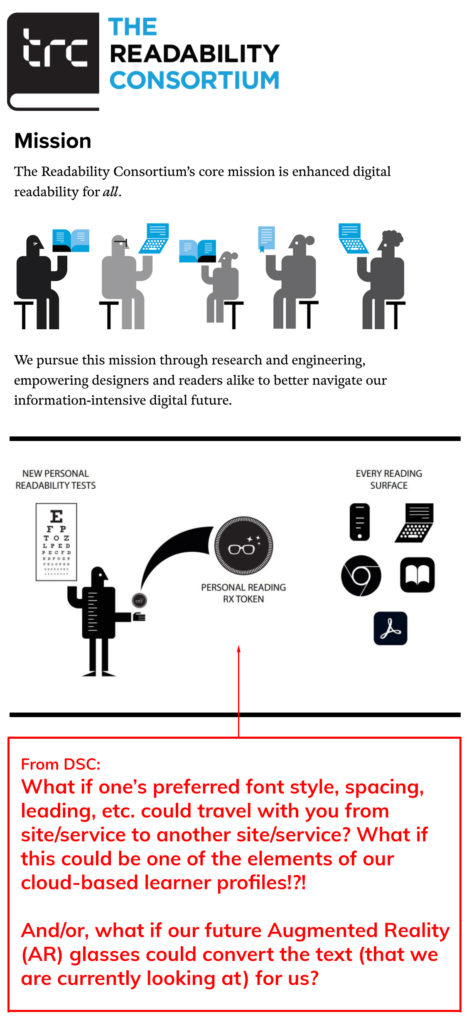

After checking out the following two links, I created the graphic below:

- Readability initiative > Better reading for all. — from Adobe.com

We’re working with educators, nonprofits, and technologists to help people of all ages and abilities read better by personalizing the reading experience on digital devices. - The Readability Consortium > About page

Also related/see:

- Microsoft Education Unveils Expanded Literacy, Accessibility Tools for Students and Teachers — from thejournal.com by Kristal Kuykendall

- Announcing an expanded literacy portfolio to reach every learner — from educationblog.microsoft.com by Paige Johnson

- What Kids Are Reading — from renaissance.com

ELC 070: Conversation Design for the Voice User Interface — from theelearningcoach.com (ELC) by Connie Malamed

A Conversation with Myra Roldan

Excerpt (emphasis DSC):

Do you wonder what learning experience designers will be doing in the future? I think one area where we will need to upskill is in conversation design. Think of the possibilities that chatbots and voice interfaces will provide for accessing information for learning and for support in the flow of work. In this episode, I speak with Myra Roldan about conversation design for the voice user interface (VUI). We discuss what makes an effective conversation and the technologies for getting started with voice user interface design.

Cisco and Google join forces to transform the future of hybrid work — from blog.webex.com by Kedar Ganta

Excerpts:

Webex [on 12/7/21] announced the public preview of its native meeting experience for Glass Enterprise Edition 2 (Glass), a lightweight eye wearable device with a transparent display developed by Google. Webex Expert on Demand on Glass provides an immersive collaboration experience that supports natural voice commands, gestures on touchpad, and head movements to accomplish routine tasks.