Student Use Cases for AI: Start by Sharing These Guidelines with Your Class — from hbsp.harvard.edu by Ethan Mollick and Lilach Mollick

To help you explore some of the ways students can use this disruptive new technology to improve their learning—while making your job easier and more effective—we’ve written a series of articles that examine the following student use cases:

- AI as feedback generator

- AI as personal tutor

- AI as team coach

- AI as learner

Recap: Teaching in the Age of AI (What’s Working, What’s Not) — from celt.olemiss.edu by Derek Bruff, visiting associate director

Earlier this week, CETL and AIG hosted a discussion among UM faculty and other instructors about teaching and AI this fall semester. We wanted to know what was working when it came to policies and assignments that responded to generative AI technologies like ChatGPT, Google Bard, Midjourney, DALL-E, and more. We were also interested in hearing what wasn’t working, as well as questions and concerns that the university community had about teaching and AI.

Teaching: Want your students to be skeptical of ChatGPT? Try this. — from chronicle.com by Beth McMurtrie

Then, in class he put them into groups where they worked together to generate a 500-word essay on “Why I Write” entirely through ChatGPT. Each group had complete freedom in how they chose to use the tool. The key: They were asked to evaluate their essay on how well it offered a personal perspective and demonstrated a critical reading of the piece. Weiss also graded each ChatGPT-written essay and included an explanation of why he came up with that particular grade.

After that, the students were asked to record their observations on the experiment on the discussion board. Then they came together again as a class to discuss the experiment.

Weiss shared some of his students’ comments with me (with their approval). Here are a few:

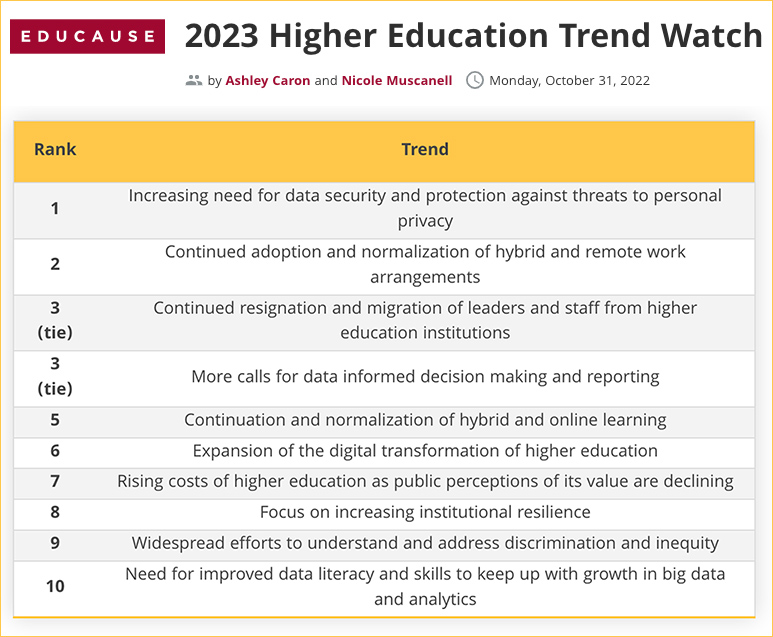

2023 EDUCAUSE Horizon Action Plan: Generative AI — from library.educause.edu by Jenay Robert and Nicole Muscanell

Asked to describe the state of generative AI that they would like to see in higher education 10 years from now, panelists collaboratively constructed their preferred future.

.

Will Teachers Listen to Feedback From AI? Researchers Are Betting on It — from edsurge.com by Olina Banerji

Julie York, a computer science and media teacher at South Portland High School in Maine, was scouring the internet for discussion tools for her class when she found TeachFX. An AI tool that takes recorded audio from a classroom and turns it into data about who talked and for how long, it seemed like a cool way for York to discuss issues of data privacy, consent and bias with her students. But York soon realized that TeachFX was meant for much more.

York found that TeachFX listened to her very carefully, and generated a detailed feedback report on her specific teaching style. York was hooked, in part because she says her school administration simply doesn’t have the time to observe teachers while tending to several other pressing concerns.

“I rarely ever get feedback on my teaching style. This was giving me 100 percent quantifiable data on how many questions I asked and how often I asked them in a 90-minute class,” York says. “It’s not a rubric. It’s a reflection.”

TeachFX is easy to use, York says. It’s as simple as switching on a recording device.

…

But TeachFX, she adds, is focused not on her students’ achievements, but instead on her performance as a teacher.

ChatGPT Is Landing Kids in the Principal’s Office, Survey Finds — from the74million.org by Mark Keierleber

While educators worry that students are using generative AI to cheat, a new report finds students are turning to the tool more for personal problems.

Indeed, 58% of students, and 72% of those in special education, said they’ve used generative AI during the 2022-23 academic year, just not primarily for the reasons that teachers fear most. Among youth who completed the nationally representative survey, just 23% said they used it for academic purposes and 19% said they’ve used the tools to help them write and submit a paper. Instead, 29% reported having used it to deal with anxiety or mental health issues, 22% for issues with friends and 16% for family conflicts.

Part of the disconnect dividing teachers and students, researchers found, may come down to gray areas. Just 40% of parents said they or their child were given guidance on ways they can use generative AI without running afoul of school rules. Only 24% of teachers say they’ve been trained on how to respond if they suspect a student used generative AI to cheat.

Embracing weirdness: What it means to use AI as a (writing) tool — from oneusefulthing.org by Ethan Mollick

AI is strange. We need to learn to use it.

But LLMs are not Google replacements, or thesauruses or grammar checkers. Instead, they are capable of so much more weird and useful help.

Diving Deep into AI: Navigating the L&D Landscape — from learningguild.com by Markus Bernhardt

The prospect of AI-powered, tailored, on-demand learning and performance support is exhilarating: It starts with traditional digital learning made into fully adaptive learning experiences, which would adjust to strengths and weaknesses for each individual learner. The possibilities extend all the way through to simulations and augmented reality, an environment to put into practice knowledge and skills, whether as individuals or working in a team simulation. The possibilities are immense.

Thanks to generative AI, such visions are transitioning from fiction to reality.

Video: Unleashing the Power of AI in L&D — from drphilippahardman.substack.com by Dr. Philippa Hardman

An exclusive video walkthrough of my keynote at Sweden’s national L&D conference this week

Highlights

- The wicked problem of L&D: last year, $371 billion was spent on workplace training globally, but only 12% of employees apply what they learn in the workplace

- An innovative approach to L&D: when Mastery Learning is used to design & deliver workplace training, the rate of “transfer” (i.e. behaviour change & application) is 67%

- AI 101: quick summary of classification, generative and interactive AI and its uses in L&D

- The impact of AI: my initial research shows that AI has the potential to scale Mastery Learning and, in the process:

- reduce the “time to training design” by 94% > faster

- reduce the cost of training design by 92% > cheaper

- increase the quality of learning design & delivery by 96% > better

- Research also shows that the vast majority of workplaces are using AI only to “oil the machine” rather than innovate and improve our processes & practices

- Practical tips: how to get started on your AI journey in your company, and a glimpse of what L&D roles might look like in a post-AI world