Is graduate employability a core university priority? — from timeshighereducation.com by Katherine Emms and Andrea Laczik

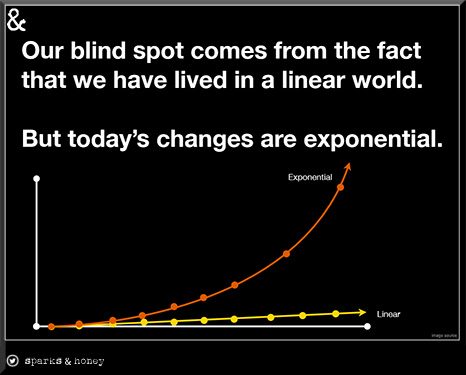

Universities, once judged primarily on the quality of their academic outcomes, are now also expected to prepare students for the workplace. Here’s how higher education is adapting to changing pressures

A clear, deliberate shift in priorities is under way. Embedding employability is central to an Edge Foundation report, carried out in collaboration with UCL’s Institute of Education, looking at how English universities are responding. In placing employability at the centre of their strategies – not just for professional courses but across all disciplines – the two universities that were analysed in this research show how they aim to prepare students for the labour market overall. Although the employability strategy is initialled by the universities’ senior leaders, the research showed that realising this employability strategy must be understood and executed by staff at all levels across departments. The complexity of offering insights into industry pathways and building relevant skills involves curricula development, student-centred teaching, careers support, partnership work and employer engagement.

Every student can benefit from an entrepreneurial mindset — from timeshighereducation.com by Nicolas Klotz

To develop the next generation of entrepreneurs, universities need to nurture the right mindset in students of all disciplines. Follow these tips to embed entrepreneurial education

This shift demands a radical rethink of how we approach entrepreneurial mindset in higher education. Not as a specialism for a niche group of business students but as a core competency that every student, in every discipline, can benefit from.

At my university, we’ve spent the past several years re-engineering how we embed entrepreneurship into daily student life and learning.

What we’ve learned could help other institutions, especially smaller or resource-constrained ones, adapt to this new landscape.

The first step is recognising that entrepreneurship is not only about launching start-ups for profit. It’s about nurturing a mindset that values initiative, problem-solving, resilience and creative risk-taking. Employers increasingly want these traits, whether the student is applying for a traditional job or proposing their own venture.

Build foundations for university-industry partnerships in 90 days— from timeshighereducation.com by Raul Villamarin Rodriguez and Hemachandran K

Graduate employability could be transformed through systematic integration of industry partnerships. This practical guide offers a framework for change in Indian universities

The most effective transformation strategy for Indian universities lies in systematic industry integration that moves beyond superficial partnerships and towards deep curriculum collaboration. Rather than hoping market alignment will occur naturally, institutions must reverse-engineer academic programmes from verified industry needs.

Our six-month implementation at Woxsen University demonstrates this framework’s practical effectiveness, achieving more than 130 industry partnerships, 100 per cent faculty participation in transformation training, and 75 per cent of students receiving industry-validated credentials with significantly improved employment outcomes.

.webp)