The rise of AI fake news is creating a ‘misinformation superspreader’ — from washingtonpost.com by Pranshu Verma

AI is making it easy for anyone to create propaganda outlets, producing content that can be hard to differentiate from real news

Artificial intelligence is automating the creation of fake news, spurring an explosion of web content mimicking factual articles that instead disseminates false information about elections, wars and natural disasters.

Since May, websites hosting AI-created false articles have increased by more than 1,000 percent, ballooning from 49 sites to more than 600, according to NewsGuard, an organization that tracks misinformation.

Historically, propaganda operations have relied on armies of low-paid workers or highly coordinated intelligence organizations to build sites that appear to be legitimate. But AI is making it easy for nearly anyone — whether they are part of a spy agency or just a teenager in their basement — to create these outlets, producing content that is at times hard to differentiate from real news.

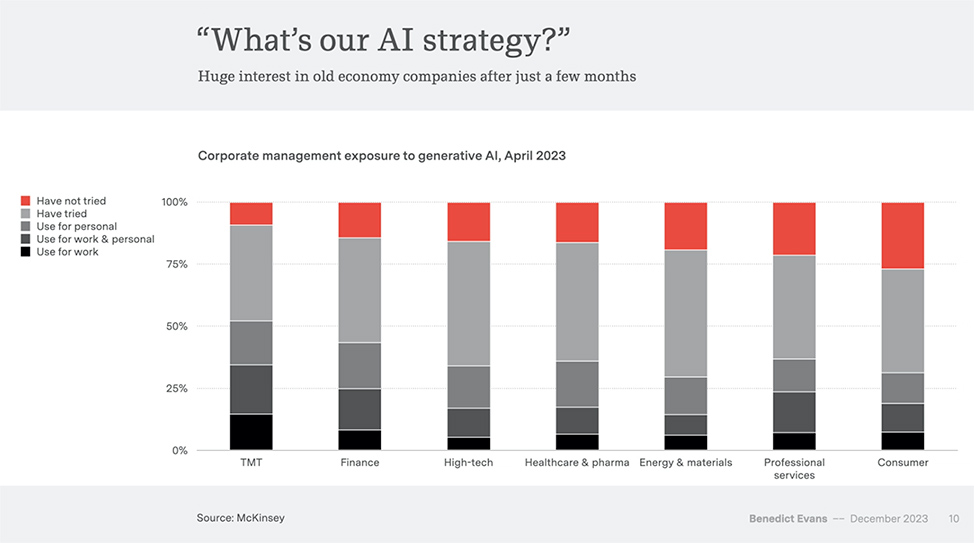

AI, and everything else — from pitch.com by Benedict Evans

Chevy Chatbots Go Rogue — from

How a customer service chatbot made a splash on social media; write your holiday cards with AI

I just bought a 2024 Chevy Tahoe for $1. pic.twitter.com/aq4wDitvQW

— Chris Bakke (@ChrisJBakke) December 17, 2023

Their AI chatbot, designed to assist customers in their vehicle search, became a social media sensation for all the wrong reasons. One user even convinced the chatbot to agree to sell a 2024 Chevy Tahoe for just one dollar!

This story is exactly why AI implementation needs to be approached strategically. Learning to use AI, also means learning to build thinking of the guardrails and boundaries.

Here’s our tips.

Rite Aid used facial recognition on shoppers, fueling harassment, FTC says — from washingtonpost.com by Drew Harwell

A landmark settlement over the pharmacy chain’s use of the surveillance technology could raise further doubts about facial recognition’s use in stores, airports and other venues

The pharmacy chain Rite Aid misused facial recognition technology in a way that subjected shoppers to unfair searches and humiliation, the Federal Trade Commission said Tuesday, part of a landmark settlement that could raise questions about the technology’s use in stores, airports and other venues nationwide.

…

But the chain’s “reckless” failure to adopt safeguards, coupled with the technology’s long history of inaccurate matches and racial biases, ultimately led store employees to falsely accuse shoppers of theft, leading to “embarrassment, harassment, and other harm” in front of their family members, co-workers and friends, the FTC said in a statement.