Report: Smart-city IoT isn’t smart enough yet — from networkworld.com by Jon Gold

A report from Forrester Research details vulnerabilities affecting smart-city internet of things (IoT) infrastructure and offers some methods of mitigation.

From DSC:

Are you kidding me!? Geo-fencing technology or not, I don’t trust this for one second.

Amazon patents ‘surveillance as a service’ tech for its delivery drones — from theverge.com by Jon Porter

Including technology that cuts out footage of your neighbor’s house

Excerpt:

The patent gives a few hints how the surveillance service could work. It says customers would be allowed to pay for visits on an hourly, daily, or weekly basis, and that drones could be equipped with night vision cameras and microphones to expand their sensing capabilities.

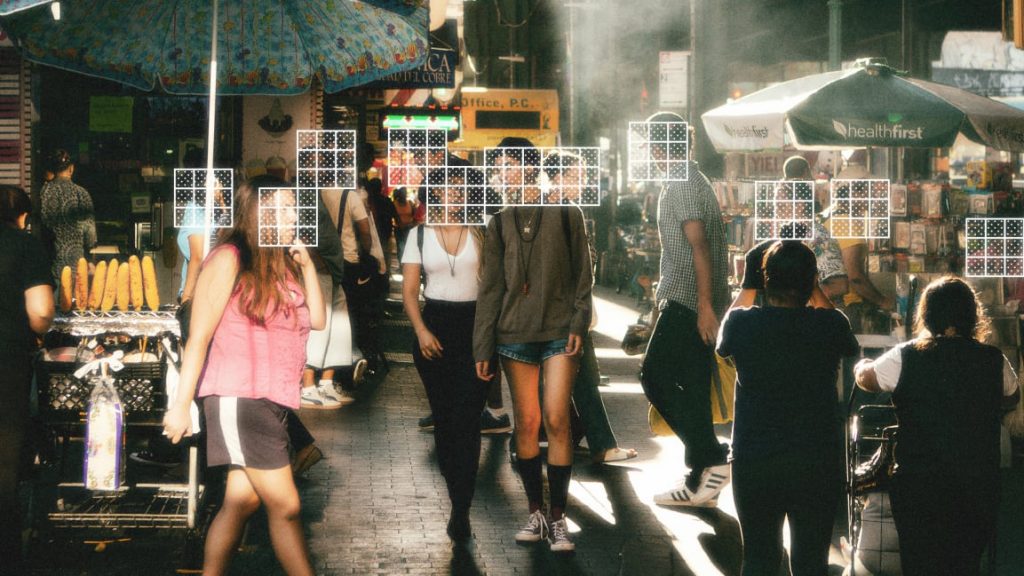

How surveillance cameras could be weaponized with A.I. — from nytimes.com by Niraj Chokshi

Advances in artificial intelligence could supercharge surveillance cameras, allowing footage to be constantly monitored and instantly analyzed, the A.C.L.U. warned in a new report.

Excerpt:

In the report, the organization imagined a handful of dystopian uses for the technology. In one, a politician requests footage of his enemies kissing in public, along with the identities of all involved. In another, a life insurance company offers rates based on how fast people run while exercising. And in another, a sheriff receives a daily list of people who appeared to be intoxicated in public, based on changes to their gait, speech or other patterns.

Analysts have valued the market for video analytics at as much as $3 billion, with the expectation that it will grow exponentially in the years to come. The important players include smaller businesses as well as household names such as Amazon, Cisco, Honeywell, IBM and Microsoft.

From DSC:

We can no longer let a handful of companies tell the rest of us how our society will be formed/shaped/act.

For example, Amazon should NOT be able to just send its robots/drones to deliver packages — that type of decision is NOT up to them. I have a suspicion that Amazon cares more about earning larger profits and pleasing Wall Street rather than being concerned with our society at large. If Amazon is able to introduce their robots all over the place, what’s to keep any and every company from introducing their own army of robots or drones? If we allow this to occur, it won’t be long before our streets, sidewalks, and air spaces are filled with noise and clutter.

So…a question for representatives, senators, legislators, mayors, judges, lawyers, etc.:

- What should we be building in order to better allow citizens to weigh in on emerging technologies and whether any given emerging technology — or a specific product/service — should be rolled out…or not?

Stanford engineers make editing video as easy as editing text — from news.stanford.edu by Andrew Myers

A new algorithm allows video editors to modify talking head videos as if they were editing text – copying, pasting, or adding and deleting words.

Excerpts:

In television and film, actors often flub small bits of otherwise flawless performances. Other times they leave out a critical word. For editors, the only solution so far is to accept the flaws or fix them with expensive reshoots.

Imagine, however, if that editor could modify video using a text transcript. Much like word processing, the editor could easily add new words, delete unwanted ones or completely rearrange the pieces by dragging and dropping them as needed to assemble a finished video that looks almost flawless to the untrained eye.

…

The work could be a boon for video editors and producers but does raise concerns as people increasingly question the validity of images and videos online, the authors said. However, they propose some guidelines for using these tools that would alert viewers and performers that the video has been manipulated.

Addendum on 6/13/19:

- Top AI researchers race to detect ‘deepfake’ videos: ‘We are outgunned’ — from mercurynews.com by Drew Harwell | The Washington Post

Top artificial-intelligence researchers across the country are racing to defuse an extraordinary political weapon: computer-generated fake videos that could undermine candidates and mislead voters during the 2020 presidential campaign.

An image created from a fake video of former president Barack Obama displays elements of facial mapping used in new technology that allows users to create convincing fabricated footage of real people, known as “deepfakes.” (AP)