American students have changed their majors — from bloomberg.com by Justin Fox

Health professions are in, education and the humanities are out. Here are some reasons for the shift.

American students have changed their majors — from bloomberg.com by Justin Fox

Health professions are in, education and the humanities are out. Here are some reasons for the shift.

Microsoft debuts Ideas in Word, a grammar and style suggestions tool powered by AI — from venturebeat.com by Kyle Wiggers; with thanks to Mr. Jack Du Mez for his posting on this over on LinkedIn

Excerpt:

The first day of Microsoft’s Build developer conference is typically chock-full of news, and this year was no exception. During a keynote headlined by CEO Satya Nadella, the Seattle company took the wraps off a slew of updates to Microsoft 365, its lineup of productivity-focused, cloud-hosted software and subscription services. Among the highlights were a new AI-powered grammar and style checker in Word Online, dubbed Ideas in Word, and dynamic email messages in Outlook Mobile.

Ideas in Word builds on Editor, an AI-powered proofreader for Office 365 that was announced in July 2016 and replaced the Spelling & Grammar pane in Office 2016 later that year. Ideas in Words similarly taps natural language processing and machine learning to deliver intelligent, contextually aware suggestions that could improve a document’s readability. For instance, it’ll recommend ways to make phrases more concise, clear, and inclusive, and when it comes across a particularly tricky snippet, it’ll put forward synonyms and alternative phrasings.

Also see:

An algorithm wipes clean the criminal pasts of thousands — from bbc.com by Dave Lee

Excerpt:

This month, a judge in California cleared thousands of criminal records with one stroke of his pen. He did it thanks to a ground-breaking new algorithm that reduces a process that took months to mere minutes. The programmers behind it say: we’re just getting started solving America’s urgent problems.

Drawalong AR Turns YouTube Art Tutorials Into Augmented Tracing Paper — from vrscout.com by Kyle Melnick

We Built an ‘Unbelievable’ (but Legal) Facial Recognition Machine — from nytimes.com by Sahil Chinoy

“The future of human flourishing depends upon facial recognition technology being banned,” wrote Woodrow Hartzog, a professor of law and computer science at Northeastern, and Evan Selinger, a professor of philosophy at the Rochester Institute of Technology, last year. ‘Otherwise, people won’t know what it’s like to be in public without being automatically identified, profiled, and potentially exploited.’ Facial recognition is categorically different from other forms of surveillance, Mr. Hartzog said, and uniquely dangerous. Faces are hard to hide and can be observed from far away, unlike a fingerprint. Name and face databases of law-abiding citizens, like driver’s license records, already exist. And for the most part, facial recognition surveillance can be set up using cameras already on the streets.” — Sahil Chinoy; per a weekly e-newsletter from Sam DeBrule at Machine Learnings in Berkeley, CA

Excerpt:

Most people pass through some type of public space in their daily routine — sidewalks, roads, train stations. Thousands walk through Bryant Park every day. But we generally think that a detailed log of our location, and a list of the people we’re with, is private. Facial recognition, applied to the web of cameras that already exists in most cities, is a threat to that privacy.

To demonstrate how easy it is to track people without their knowledge, we collected public images of people who worked near Bryant Park (available on their employers’ websites, for the most part) and ran one day of footage through Amazon’s commercial facial recognition service. Our system detected 2,750 faces from a nine-hour period (not necessarily unique people, since a person could be captured in multiple frames). It returned several possible identifications, including one frame matched to a head shot of Richard Madonna, a professor at the SUNY College of Optometry, with an 89 percent similarity score. The total cost: about $60.

From DSC:

What do you think about this emerging technology and its potential impact on our society — and on other societies like China? Again I ask…what kind of future do we want?

As for me, my face is against the use of facial recognition technology in the United States — as I don’t trust where this could lead.

This wild, wild, west situation continues to develop. For example, note how AI and facial recognition get their foot in the door via techs installed years ago:

The cameras in Bryant Park were installed more than a decade ago so that people could see whether the lawn was open for sunbathing, for example, or check how busy the ice skating rink was in the winter. They are not intended to be a security device, according to the corporation that runs the park.

So Amazon’s use of facial recognition is but another foot in the door.

This needs to be stopped. Now.

Facial recognition technology is a menace disguised as a gift. It’s an irresistible tool for oppression that’s perfectly suited for governments to display unprecedented authoritarian control and an all-out privacy-eviscerating machine.

We should keep this Trojan horse outside of the city. (source)

MIT has just announced a $1 billion plan to create a new college for AI — from technologyreview.com

Excerpt:

One of the birthplaces of artificial intelligence, MIT, has announced a bold plan to reshape its academic program around the technology. With $1 billion in funding, MIT will create a new college that combines AI, machine learning, and data science with other academic disciplines. It is the largest financial investment in AI by any US academic institution to date.

From this page:

The College will:

How MIT’s Mini Cheetah Can Help Accelerate Robotics Research — from spectrum.ieee.org by Evan Ackerman

Sangbae Kim talks to us about the new Mini Cheetah quadruped and his future plans for the robot

From DSC:

Sorry, but while the video/robot is incredible, a feeling in the pit of my stomach makes me reflect upon what’s likely happening along these lines in the militaries throughout the globe…I don’t mean to be a fear monger, but rather a realist.

Law schools escalate their focus on digital skills — from edtechmagazine.com by Eli Zimmerman

Coding, data analytics and device integration give students the tools to become more efficient lawyers.

Excerpt:

Participants learned to use analytics programs and artificial intelligence to complete work in a fraction of the time it usually takes.

For example, students analyzed contracts using AI programs to find errors and areas for improvement across various legal jurisdictions. In another exercise, students learned to use data programs to draft nondisclosure agreements in less than half an hour.

By learning analytics models, students will graduate with the skills to make them more effective — and more employable — professionals.

“As advancing technology and massive data sets enable lawyers to answer complex legal questions with greater speed and efficiency, courses like Legal Analytics will help KU Law students be better advocates for tomorrow’s clients and more competitive for tomorrow’s jobs,” Stephen Mazza, dean of the University of Kansas School of Law, tells Legaltech News.

Reflecting that shift, the Law School Admission Council, which organizes and distributes the Law School Admission Test, will be offering the test exclusively on Microsoft Surface Go tablets starting in July 2019.

From DSC:

I appreciate the article, thanks Eli. From one of the articles that was linked to, it appears that, “To facilitate the transition to the Digital LSAT starting July 2019, LSAC is procuring thousands of Surface Go tablets that will be loaded with custom software and locked down to ensure the integrity of the exam process and security of the test results.”

Microsoft built a chat bot to match patients to clinical trials — from fortune.com by Dina Bass

Excerpt:

A chat bot that began as a hackathon project at Microsoft’s lab in Israel makes it easier for sick patients to find clinical trials that could provide otherwise unavailable medicines and therapies.

The Clinical Trials Bot lets patients and doctors search for studies related to a disease and then answer a succession of text questions. The bot then suggests links to trials that best match the patients’ needs. Drugmakers can also use it to find test subjects.

Half of all clinical trials for new drugs and therapies never reach the number of patients needed to start, and many others are delayed for the same reason, Bitran said. Meanwhile patients, sometimes desperately sick, find it hard to comb through the roughly 50,000 trials worldwide and their arcane and lengthy criteria—typically 20 to 30 factors. Even doctors struggle to search quickly on behalf of patients, Bitran said.

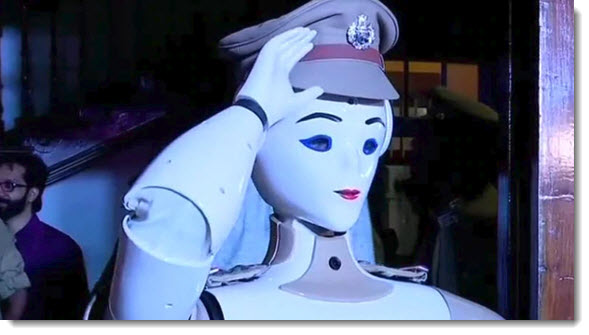

India Just Swore in Its First Robot Police Officer — from futurism.com by Dan Robitzski

RoboCop, meet KP-Bot.

Excerpt:

RoboCop

India just swore in its first robotic police officer, which is named KP-Bot.

The animatronic-looking machine was granted the rank of sub-inspector on Tuesday, and it will operate the front desk of Thiruvananthapuram police headquarters, according to India Today.

From DSC:

Whoa….hmmm…note to the ABA and to the legal education field — and actually to anyone involved in developing laws — we need to catch up. Quickly.

My thoughts go to the governments and to the militaries around the globe. Are we now on a slippery slope? How far along are the militaries of the world in integrating robotics and AI into their weapons of war? Quite far, I think.

Also, at the higher education level, are Computer Science and Engineering Departments taking their responsibilities seriously in this regard? What kind of teaching is being done (or not done) in terms of the moral responsibilities of their code? Their robots?

The real reason tech struggles with algorithmic bias — from wired.com by Yael Eisenstat

Excerpts:

ARE MACHINES RACIST? Are algorithms and artificial intelligence inherently prejudiced? Do Facebook, Google, and Twitter have political biases? Those answers are complicated.

But if the question is whether the tech industry doing enough to address these biases, the straightforward response is no.

…

Humans cannot wholly avoid bias, as countless studies and publications have shown. Insisting otherwise is an intellectually dishonest and lazy response to a very real problem.

…

In my six months at Facebook, where I was hired to be the head of global elections integrity ops in the company’s business integrity division, I participated in numerous discussions about the topic. I did not know anyone who intentionally wanted to incorporate bias into their work. But I also did not find anyone who actually knew what it meant to counter bias in any true and methodical way.

But the company has created its own sort of insular bubble in which its employees’ perception of the world is the product of a number of biases that are engrained within the Silicon Valley tech and innovation scene.

Gartner survey shows 37% of organizations have implemented AI in some form — from gartner.com

Despite talent shortages, the percentage of enterprises employing AI grew 270% over the past four years

Excerpt:

The number of enterprises implementing artificial intelligence (AI) grew 270 percent in the past four years and tripled in the past year, according to the Gartner, Inc. 2019 CIO Survey. Results showed that organizations across all industries use AI in a variety of applications, but struggle with acute talent shortages.

The deployment of AI has tripled in the past year — rising from 25 percent in 2018 to 37 percent today. The reasons for this big jump is that AI capabilities have matured significantly and thus enterprises are more willing to implement the technology. “We still remain far from general AI that can wholly take over complex tasks, but we have now entered the realm of AI-augmented work and decision science — what we call ‘augmented intelligence,’” Mr. Howard added.

Key Findings from the “2019 CIO Survey: CIOs Have Awoken to the Importance of AI”

From DSC:

In this posting, I discussed an idea for a new TV show — a program that would be both entertaining and educational. So I suppose that this posting is a Part II along those same lines.

The program that came to my mind at that time was a program that would focus on significant topics and issues within American society — offered up in a debate/presentation style format.

I had envisioned that you could have different individuals, groups, or organizations discuss the pros and cons of an issue or topic. The show would provide contact information for helpful resources, groups, organizations, legislators, etc. These contacts would be for learning more about a subject or getting involved with finding a solution for that problem.

OR

…as I revist that idea today…perhaps the show could feature humans versus an artificial intelligence such as IBM’s Project Debater:

Project Debater is the first AI system that can debate humans on complex topics. Project Debater digests massive texts, constructs a well-structured speech on a given topic, delivers it with clarity and purpose, and rebuts its opponent. Eventually, Project Debater will help people reason by providing compelling, evidence-based arguments and limiting the influence of emotion, bias, or ambiguity.

Robots as a platform: Are you ready? — from dzone.com by Donna Thomas

Robots as a platform are just about to change the world. What are you going to build?

Excerpt:

But unlike those other platforms, robots can independently interact with the physical environment, and that changes everything. As a robot skill developer, you are no longer limited to having your code push pixels around a phone screen.

Instead, your code can push around the phone* itself.

From DSC:

* Or a bomb.

Hmmm….it seems to me that this is another area where we need to slow down and first ask some questions about what we want our future to look/be like. Plus, the legal side of the house needs to catch up as quickly as possible — for society’s benefit.

Digital transformation reality check: 10 trends — from enterprisersproject.com by Stephanie Overby

2019 is the year when CIOs scrutinize investments, work even more closely with the CEO, and look to AI to shape strategy. What other trends will prove key?

Excerpt (emphasis DSC):

6. Technology convergence expands

Lines have already begun to blur between software development and IT operations thanks to the widespread adoption of DevOps. Meanwhile, IT and operational technology are also coming together in data-centric industries like manufacturing and logistics.

“A third convergence – that many are feeling but not yet articulating will have a profound impact on how CIOs structure and staff their organizations, design their architectures, build their budgets, and govern their operations – is the convergence of applications and infrastructure,” says Edwards. “In the digital age, it is nearly impossible to build a strategy for infrastructure that doesn’t include a substantial number of considerations for applications and vice versa.”

Most IT organizations still have heads of infrastructure and applications managing their own teams, but that may begin to change.

While most IT organizations still have heads of infrastructure and applications managing their own teams, that may begin to change as trends like software-defined infrastructure grow. “In 2019, CIOs will need to begin to grapple with the challenges to their operating models when the lines within the traditional IT tower blur and sometimes fade,” Edwards says.