The Verge | What’s Next With AI | February 2024 | Consumer Survey

DC: Just because we can doesn’t mean we should.

It brings to mind #AI and #robotics and the #military — hmmmm…. https://t.co/1J4XKiHRUl

— Daniel Christian (he/him/his) (@dchristian5) April 25, 2024

Microsoft AI creates talking deepfakes from single photo — from inavateonthenet.net

The Great Hall – where now with AI? It is not ‘Human Connection V Innovative Technology’ but ‘Human Connection + Innovative Technology’ — from donaldclarkplanb.blogspot.com by Donald Clark

The theme of the day was Human Connection V Innovative Technology. I see this a lot at conferences, setting up the human connection (social) against the machine (AI). I think this is ALL wrong. It is, and has always been a dialectic, human connection (social) PLUS the machine. Everyone had a smartphone, most use it for work, comms and social media. The binary between human and tech has long disappeared.

Techno-Social Engineering: Why the Future May Not Be Human, TikTok’s Powerful ForYou Algorithm, & More — from by Misha Da Vinci

Things to consider as you dive into this edition:

- As we increasingly depend on technology, how is it changing us?

- In the interaction between humans and technology, who is adapting to whom?

- Is the technology being built for humans, or are we being changed to fit into tech systems?

- As time passes, will we become more like robots or the AI models we use?

- Over the next 30 years, as we increasingly interact with technology, who or what will we become?

It’s been an insane week for AI (part 2)

Here are 14 most impressive reveals from this week:

1/ China just released OpenAI’s Sora rival “Vidu” which can create realistic clips in seconds.pic.twitter.com/MnTv9Wxpef

— Barsee ? (@heyBarsee) April 27, 2024

Are we ready to navigate the complex ethics of advanced AI assistants? — from futureofbeinghuman.com by Andrew Maynard

An important new paper lays out the importance and complexities of ensuring increasingly advanced AI-based assistants are developed and used responsibly

Last week a behemoth of a paper was released by AI researchers in academia and industry on the ethics of advanced AI assistants.

It’s one of the most comprehensive and thoughtful papers on developing transformative AI capabilities in socially responsible ways that I’ve read in a while. And it’s essential reading for anyone developing and deploying AI-based systems that act as assistants or agents — including many of the AI apps and platforms that are currently being explored in business, government, and education.

The paper — The Ethics of Advanced AI Assistants — is written by 57 co-authors representing researchers at Google Deep Mind, Google Research, Jigsaw, and a number of prominent universities that include Edinburgh University, the University of Oxford, and Delft University of Technology. Coming in at 274 pages this is a massive piece of work. And as the authors persuasively argue, it’s a critically important one at this point in AI development.

Key questions for the ethical and societal analysis of advanced AI assistants include:

- What is an advanced AI assistant? How does an AI assistant differ from other kinds of AI technology?

- What capabilities would an advanced AI assistant have? How capable could these assistants be?

- What is a good AI assistant? Are there certain values that we want advanced AI assistants to evidence across all contexts?

- Are there limits on what AI assistants should be allowed to do? If so, how are these limits determined?

- What should an AI assistant be aligned with? With user instructions, preferences, interests, values, well-being or something else?

- What issues need to be addressed for AI assistants to be safe? What does safety mean for this class of technologies?

- What new forms of persuasion might advanced AI assistants be capable of? How can we ensure that users remain appropriately in control of the technology?

- How can people – especially vulnerable users – be protected from AI manipulation and unwanted disclosure of personal information?

- Is anthropomorphism for AI assistants morally problematic? If so, might it still be permissible under certain conditions?

- …

Beyond the Hype: Taking a 50 Year Lens to the Impact of AI on Learning — from nafez.substack.com by Nafez Dakkak and Chris Dede

How do we make sure LLMs are not “digital duct tape”?

[Per Chris Dede] We often think of the product of teaching as the outcome (e.g. an essay, a drawing, etc.). The essence of education, in my view, lies not in the products or outcomes of learning but in the journey itself. The artifact is just a symbol that you’ve taken the journey.

The process of learning — the exploration, challenges, and personal growth that occur along the way — is where the real value lies. For instance, the act of writing an essay is valuable not merely for the final product but for the intellectual journey it represents. It forces you to improve and organize your thinking on a subject.

This distinction becomes important with the rise of generative AI, because it uniquely allows us to produce these artifacts without taking the journey.

As I’ve argued previously, I am worried that all this hype around LLMs renders them a “type of digital duct-tape to hold together an obsolete industrial-era educational system”.

Speaking of AI in our learning ecosystems, also see:

On Building a AI Policy for Teaching & Learning — from by Lance Eaton

How students drove the development of a policy for students and faculty

Well, last month, the policy was finally approved by our Faculty Curriculum Committee and we can finally share the final version: AI Usage Policy. College Unbound also created (all-human, no AI used) a press release with the policy and some of the details.

To ensure you see this:

- Usage Guidelines for AI Generative Tools at College Unbound

These guidelines were created and reviewed by College Unbound students in Spring 2023 with the support of Lance Eaton, Director of Faculty Development & Innovation. The students include S. Fast, K. Linder-Bey, Veronica Machado, Erica Maddox, Suleima L., Lora Roy.

ChatGPT hallucinates fake but plausible scientific citations at a staggering rate, study finds — from psypost.org by Eric W. Dolan

A recent study has found that scientific citations generated by ChatGPT often do not correspond to real academic work. The study, published in the Canadian Psychological Association’s Mind Pad, found that “false citation rates” across various psychology subfields ranged from 6% to 60%. Surprisingly, these fabricated citations feature elements such as legitimate researchers’ names and properly formatted digital object identifiers (DOIs), which could easily mislead both students and researchers.

…

MacDonald found that a total of 32.3% of the 300 citations generated by ChatGPT were hallucinated. Despite being fabricated, these hallucinated citations were constructed with elements that appeared legitimate — such as real authors who are recognized in their respective fields, properly formatted DOIs, and references to legitimate peer-reviewed journals.

Forbes 2024 AI 50 List: Top Artificial Intelligence Startups — from forbes.com by Kenrick Cai

The artificial intelligence sector has never been more competitive. Forbes received some 1,900 submissions this year, more than double last year’s count. Applicants do not pay a fee to be considered and are judged for their business promise and technical usage of AI through a quantitative algorithm and qualitative judging panels. Companies are encouraged to share data on diversity, and our list aims to promote a more equitable startup ecosystem. But disparities remain sharp in the industry. Only 12 companies have women cofounders, five of whom serve as CEO, the same count as last year. For more, see our full package of coverage, including a detailed explanation of the list methodology, videos and analyses on trends in AI.

Adobe Previews Breakthrough AI Innovations to Advance Professional Video Workflows Within Adobe Premiere Pro — from news.adobe.com

- New Generative AI video tools coming to Premiere Pro this year will streamline workflows and unlock new creative possibilities, from extending a shot to adding or removing objects in a scene

- Adobe is developing a video model for Firefly, which will power video and audio editing workflows in Premiere Pro and enable anyone to create and ideate

Adobe previews early explorations of bringing third-party generative AI models from OpenAI, Pika Labs and Runway directly into Premiere Pro, making it easy for customers to draw on the strengths of different models within the powerful workflows they use every day - AI-powered audio workflows in Premiere Pro are now generally available, making audio editing faster, easier and more intuitive

Also relevant see:

- Adobe Announces New AI Tools For Premiere Pro — from forbes.com by Mark Sparrow

- Adobe adds more AI and Udio upends AI music — from heatherbcooper.substack.com by Heather Cooper

Is Sora coming to Premiere Pro?

The pace of AI and Robotics has been incredible.

So, I share the most important research and developments every week.

Here’s everything that happened and how to make sense out of it:

— Brett Adcock (@adcock_brett) April 14, 2024

AI for the physical world — from superhuman.ai by Zain Kahn

Excerpt: (emphasis DSC)

A new company called Archetype is trying to tackle that problem: It wants to make AI useful for more than just interacting with and understanding the digital realm. The startup just unveiled Newton — “the first foundation model that understands the physical world.”

What’s it for?

A warehouse or factory might have 100 different sensors that have to be analyzed separately to figure out whether the entire system is working as intended. Newton can understand and interpret all of the sensors at the same time, giving a better overview of how everything’s working together. Another benefit: You can ask Newton questions in plain English without needing much technical expertise.

How does it work?

- Newton collects data from radar, motion sensors, and chemical and environmental trackers

- It uses an LLM to combine each of those data streams into a cohesive package

- It translates that data into text, visualizations, or code so it’s easy to understand

Apple’s $25-50 million Shutterstock deal highlights fierce competition for AI training data — from venturebeat.com by Michael Nuñez; via Tom Barrett’s Prompcraft e-newsletter

Apple has entered into a significant agreement with stock photography provider Shutterstock to license millions of images for training its artificial intelligence models. According to a Reuters report, the deal is estimated to be worth between $25 million and $50 million, placing Apple among several tech giants racing to secure vast troves of data to power their AI systems.

The University Student’s Guide To Ethical AI Use — from studocu.com; with thanks to Jervise Penton at 6XD Media Group for this resource

This comprehensive guide offers:

- Up-to-date statistics on the current state of AI in universities, how institutions and students are currently using artificial intelligence

- An overview of popular AI tools used in universities and its limitations as a study tool

- Tips on how to ethically use AI and how to maximize its capabilities for students

- Current existing punishment and penalties for cheating using AI

- A checklist of questions to ask yourself, before, during, and after an assignment to ensure ethical use

Some of the key facts you might find interesting are:

- The total value of AI being used in education was estimated to reach $53.68 billion by the end of 2032.

- 68% of students say using AI has impacted their academic performance positively.

- Educators using AI tools say the technology helps speed up their grading process by as much as 75%.

[Report] The Top 100 AI for Work – April 2024 — from flexos.work; with thanks to Daan van Rossum for this resource

AI is helping us work up to 41% more effectively, according to recent Bain research. We review the platforms to consider for ourselves and our teams.

Following our AI Top 150, we spent the past few weeks analyzing data on the top AI platforms for work. This report shares key insights, including the AI tools you should consider adopting to work smarter, not harder.

While there is understandable concern about AI in the work context, the platforms in this list paint a different picture. It shows a future of work where people can do what humans are best suited for while offloading repetitive, digital tasks to AI.

This will fuel the notion that it’s not AI that takes your job but a supercharged human with an army of AI tools and agents. This should be a call to action for every working person and business leader reading this.

Dr Abigail Rekas, Lawyer & Lecturer at the School of Law, University of Galway

Abigail is a lecturer on two of the Law micro-credentials at University of Galway – Lawyering Technology & Innovation and Law & Analytics. Micro-credentials are short, flexible courses designed to fit around your busy life! They are designed in collaboration with industry to meet specific skills needs and are accredited by leading Irish universities.

Visit: universityofgalway.ie/courses/micro-credentials/

The Implications of Generative AI: From the Delivery of Legal Services to the Delivery of Justice — from iaals.du.edu by

The potential for AI’s impact is broad, as it has the ability to impact every aspect of human life, from home to work. It will impact our relationships to everything and everyone in our world. The implications for generative AI on the legal system, from how we deliver legal services to how we deliver justice, will be just as far reaching.

[N]ow we face the latest technological frontier: artificial intelligence (AI).… Law professors report with both awe and angst that AI apparently can earn Bs on law school assignments and even pass the bar exam. Legal research may soon be unimaginable without it. AI obviously has great potential to dramatically increase access to key information for lawyers and non-lawyers alike. But just as obviously it risks invading privacy interests and dehumanizing the law.

…

When you can no longer sell the time it takes to achieve a client’s outcome, then you must sell the outcome itself and the client’s experience of getting there. That completely changes the dynamics of what law firms are all about.

Preparing the Next Generation of Tech-Ready Lawyers — from news.gsu.edu

Legal Analytics and Innovation Initiative Gives Students a Competitive Advantage

Georgia State University College of Law faculty understand this need and designed the Legal Analytics & Innovation Initiative (LAII) to equip students with the competitive skills desired by law firms and other companies that align with the emerging technological environment.

“As faculty, we realized we need to be forward-thinking about incorporating technology into our curriculum. Students must understand new areas of law that arise from or are significantly altered by technological advances, like cybersecurity, privacy and AI. They also must understand how these advances change the practice of law,” said Kris Niedringhaus, associate dean for Law Library, Information Services, Legal Technology & Innovation.

The Imperative Of Identifying Use Cases In Legal Tech: A Guiding Light For Innovation In The Age Of AI — from abovethelaw.com by Olga V. Mack

In the quest to integrate AI and legal technology into legal practice, use cases are not just important but indispensable.

As the legal profession continues to navigate the waters of digital transformation, the importance of use cases stands as a beacon guiding the journey. They are the litmus test for the practical value of technology, ensuring that innovations not only dazzle with potential but also deliver tangible benefits. In the quest to integrate AI and legal technology into legal practice, use cases are not just important but indispensable.

The future of legal tech is not about technology for technology’s sake. It’s about thoughtful, purpose-driven innovation that enhances the practice of law, improves client outcomes, and upholds the principles of justice. Use cases are the roadmap for this future, charting a course for technology that is meaningful, impactful, and aligned with the noble pursuit of law.

How Generative AI Owns Higher Education. Now What? — from forbes.co by Steve Andriole

Excerpt (emphasis DSC):

What about course videos? Professors can create them (by lecturing into a camera for several hours hopefully in different clothes) from the readings, from their interpretations of the readings, from their own case experiences – from anything they like. But now professors can direct the creation of the videos by talking – actually describing – to a CustomGPTabout what they’d like the video to communicate with their or another image. Wait. What? They can make a video by talking to a CustomGPT and even select the image they want the “actor” to use? Yes. They can also add a British accent and insert some (GenAI-developed) jokes into the videos if they like. All this and much more is now possible. This means that a professor can specify how long the video should be, what sources should be consulted and describe the demeanor the professor wants the video to project.

From DSC:

Though I wasn’t crazy about the clickbait type of title here, I still thought that the article was solid and thought-provoking. It contained several good ideas for using AI.

Excerpt from a recent EdSurge Higher Ed newsletter:

There are darker metaphors though — ones that focus on the hazards for humanity of the tech. Some professors worry that AI bots are simply replacing hired essay-writers for many students, doing work for a student that they can then pass off as their own (and doing it for free).

From DSC:

Hmmm…the use of essay writers was around long before AI became mainstream within higher education. So we already had a serious problem where students didn’t see the why in what they were being asked to do. Some students still aren’t sold on the why of the work in the first place. The situation seems to involve ethics, yes, but it also seems to say that we haven’t sold students on the benefits of putting in the work. Students seem to be saying I don’t care about this stuff…I just need the degree so I can exit stage left.

My main point: The issue didn’t start with AI…it started long before that.

And somewhat relevant here, also see:

I Have Bigger Fish to Fry: Why K12 Education is Not Thinking About AI — from medium.com by Maurie Beasley, M.Ed. (Edited by Jim Beasley)

This financial stagnation is occurring as we face a multitude of escalating challenges. These challenges include but are in no way limited to, chronic absenteeism, widespread student mental health issues, critical staff shortages, rampant classroom behavior issues, a palpable sense of apathy for education in students, and even, I dare say, hatred towards education among parents and policymakers.

…

Our current focus is on keeping our heads above water, ensuring our students’ safety and mental well-being, and simply keeping our schools staffed and our doors open.

Meet Ed: Ed is an educational friend designed to help students reach their limitless potential. — from lausd.org (Los Angeles School District, the second largest in the U.S.)

What is Ed?

An easy-to-understand learning platform designed by Los Angeles Unified to increase student achievement. It offers personalized guidance and resources to students and families 24/7 in over 100 languages.

Also relevant/see:

- Los Angeles Unified Bets Big on ‘Ed,’ an AI Tool for Students — from by Lauraine Langreo

The Los Angeles Unified School District has launched an AI-powered learning tool that will serve as a “personal assistant” to students and their parents.The tool, named “Ed,” can provide students from the nation’s second-largest district information about their grades, attendance, upcoming tests, and suggested resources to help them improve their academic skills on their own time, Superintendent Alberto Carvalho announced March 20. Students can also use the app to find social-emotional-learning resources, see what’s for lunch, and determine when their bus will arrive.

Could OpenAI’s Sora be a big deal for elementary school kids? — from futureofbeinghuman.com by Andrew Maynard

Despite all the challenges it comes with, AI-generated video could unleash the creativity of young children and provide insights into their inner worlds – if it’s developed and used responsibly

Like many others, I’m concerned about the challenges that come with hyper-realistic AI-generated video. From deep fakes and disinformation to blurring the lines between fact and fiction, generative AI video is calling into question what we can trust, and what we cannot.

And yet despite all the issues the technology is raising, it also holds quite incredible potential, including as a learning and development tool — as long as we develop and use it responsibly.

I was reminded of this a few days back while watching the latest videos from OpenAI created by their AI video engine Sora — including the one below generated from the prompt “an elephant made of leaves running in the jungle”

…

What struck me while watching this — perhaps more than any of the other videos OpenAI has been posting on its TikTok channel — is the potential Sora has for translating the incredibly creative but often hard to articulate ideas someone may have in their head, into something others can experience.

Can AI Aid the Early Education Workforce? — from edsurge.com by Emily Tate Sullivan

During a panel at SXSW EDU 2024, early education leaders discussed the potential of AI to support and empower the adults who help our nation’s youngest children.

While the vast majority of the conversations about AI in education have centered on K-12 and higher education, few have considered the potential of this innovation in early care and education settings.

At the conference, a panel of early education leaders gathered to do just that, in a session exploring the potential of AI to support and empower the adults who help our nation’s youngest children, titled, “ChatECE: How AI Could Aid the Early Educator Workforce.”

Hau shared that K-12 educators are using the technology to improve efficiency in a number of ways, including to draft individualized education programs (IEPs), create templates for communicating with parents and administrators, and in some cases, to support building lesson plans.

Again, we’ve never seen change happen as fast as it’s happening.

Enhancing World Language Instruction With AI Image Generators — from eduoptia.org by Rachel Paparone

By crafting an AI prompt in the target language to create an image, students can get immediate feedback on their communication skills.

Educators are, perhaps rightfully so, cautious about incorporating AI in their classrooms. With thoughtful implementation, however, AI image generators, with their ability to use any language, can provide powerful ways for students to engage with the target language and increase their proficiency.

AI in the Classroom: A Teacher’s Toolkit for Transformation — from esheninger.blogspot.com by Eric Sheninger

While AI offers numerous benefits, it’s crucial to remember that it is a tool to empower educators, not replace them. The human connection between teacher and student remains central to fostering creativity, critical thinking, and social-emotional development. The role of teachers will shift towards becoming facilitators, curators, and mentors who guide students through personalized learning journeys. By harnessing the power of AI, educators can create dynamic and effective classrooms that cater to each student’s individual needs. This paves the way for a more engaging and enriching learning experience that empowers students to thrive.

Teachers Are Using AI to Create New Worlds, Help Students with Homework, and Teach English — from themarkup.org by Ross Teixeira; via Matthew Tower

Around the world, these seven teachers are making AI work for them and their students

In this article, seven teachers across the world share their insights on AI tools for educators. You will hear a host of varied opinions and perspectives on everything from whether AI could hasten the decline of learning foreign languages to whether AI-generated lesson plans are an infringement on teachers’ rights. A common theme emerged from those we spoke with: just as the internet changed education, AI tools are here to stay, and it is prudent for teachers to adapt.

Teachers Desperately Need AI Training. How Many Are Getting It? — from edweek.org by Lauraine Langreo

Even though it’s been more than a year since ChatGPT made a big splash in the K-12 world, many teachers say they are still not receiving any training on using artificial intelligence tools in the classroom.

More than 7 in 10 teachers said they haven’t received any professional development on using AI in the classroom, according to a nationally representative EdWeek Research Center survey of 953 educators, including 553 teachers, conducted between Jan. 31 and March 4.

From DSC:

This article mentioned the following resource:

Artificial Intelligence Explorations for Educators — from iste.org

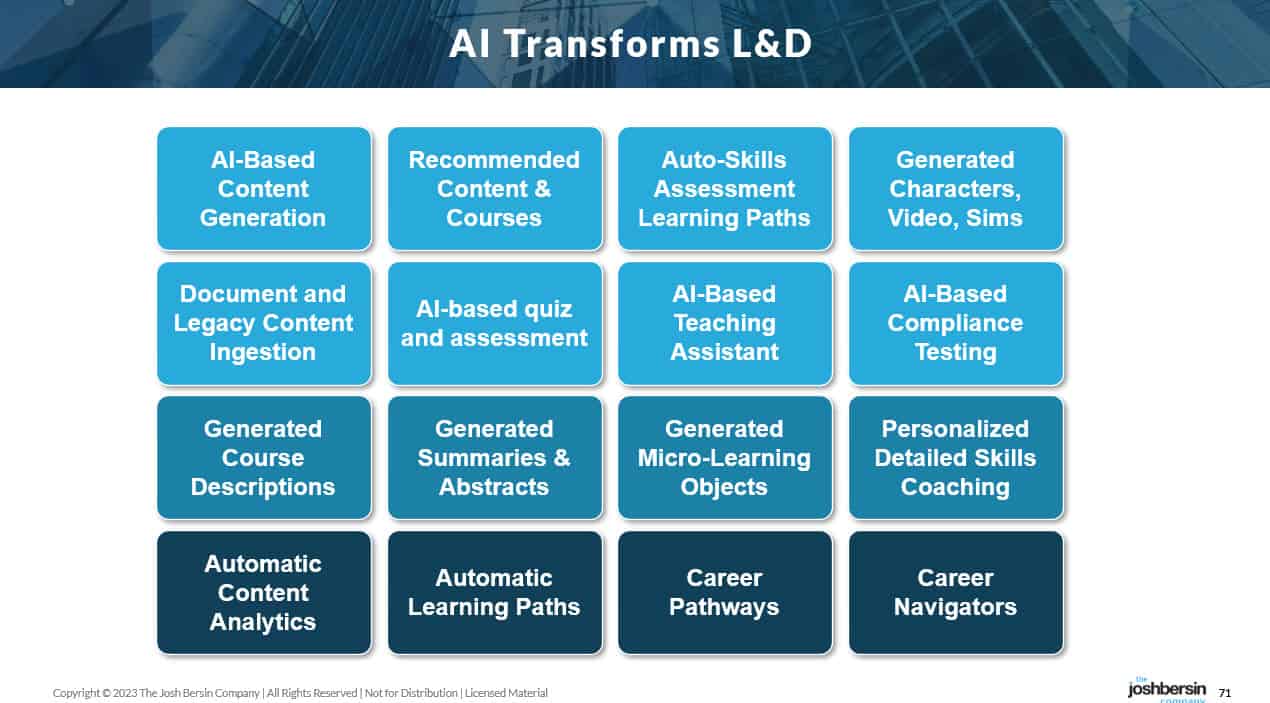

The $340 Billion Corporate Learning Industry Is Poised For Disruption — from joshbersin.com by Josh Bersin

What if, for example, the corporate learning system knew who you were and you could simply ask it a question and it would generate an answer, a series of resources, and a dynamic set of learning objects for you to consume? In some cases you’ll take the answer and run. In other cases you’ll pour through the content. And in other cases you’ll browse through the course and take the time to learn what you need.

And suppose all this happened in a totally personalized way. So you didn’t see a “standard course” but a special course based on your level of existing knowledge?

This is what AI is going to bring us. And yes, it’s already happening today.

GTC March 2024 Keynote with NVIDIA CEO Jensen Huang

Also relevant/see:

- NVIDIA Launches Blackwell-Powered DGX SuperPOD for Generative AI Supercomputing at Trillion-Parameter Scale

Scales to Tens of Thousands of Grace Blackwell Superchips Using Most Advanced NVIDIA Networking, NVIDIA Full-Stack AI Software, and Storage Features up to 576 Blackwell GPUs Connected as One With NVIDIA NVLink NVIDIA System Experts Speed Deployment for Immediate AI Infrastructure

- NVIDIA Unveils Digital Blueprint for Building Next-Gen Data Centers

Omniverse digital twin supported by Ansys, Cadence, PATCH MANAGER, Schneider Electric, Vertiv and more, setting foundation for highly efficient AI infrastructure. - NVIDIA Digital Human Technologies Bring AI Characters to Life

Leading AI Developers Use Suite of NVIDIA Technologies to Create Lifelike Avatars and Dynamic Characters for Everything From Games to Healthcare, Financial Services and Retail Applications - NVIDIA Announces Omniverse Cloud APIs to Power Wave of Industrial Digital Twin Software Tools

Ansys, Cadence, Hexagon, Microsoft, Rockwell Automation, Siemens, Trimble Adopt Omniverse Technologies to Help Customers Design, Simulate, Build and Operate Physically Based Digital Twins - Climate Pioneers: 3 Startups Harnessing NVIDIA’s AI and Earth-2 Platforms

NVIDIA Inception members Tomorrow.io, ClimaSens and north.io specialize in extreme weather prediction, advance climate action across the globe.

Today is the beginning of our moonshot to solve embodied AGI in the physical world. I’m so excited to announce Project GR00T, our new initiative to create a general-purpose foundation model for humanoid robot learning.

The GR00T model will enable a robot to understand multimodal… pic.twitter.com/EqN19Z3cXH

— Jim Fan (@DrJimFan) March 18, 2024

NVIDIA just announced GR00T.

It will enable a robot to understand multimodal instructions like language, video, and motion.

Very soon we will see them cooking, preparing coffee, in supermarkets, changing tires, etc.pic.twitter.com/3PptgNS1Yi

— Barsee ? (@heyBarsee) March 19, 2024

How AI Is Already Transforming the News Business — from politico.com by Jack Shafer

An expert explains the promise and peril of artificial intelligence.

The early vibrations of AI have already been shaking the newsroom. One downside of the new technology surfaced at CNET and Sports Illustrated, where editors let AI run amok with disastrous results. Elsewhere in news media, AI is already writing headlines, managing paywalls to increase subscriptions, performing transcriptions, turning stories in audio feeds, discovering emerging stories, fact checking, copy editing and more.

Felix M. Simon, a doctoral candidate at Oxford, recently published a white paper about AI’s journalistic future that eclipses many early studies. Swinging a bat from a crouch that is neither doomer nor Utopian, Simon heralds both the downsides and promise of AI’s introduction into the newsroom and the publisher’s suite.

Unlike earlier technological revolutions, AI is poised to change the business at every level. It will become — if it already isn’t — the beginning of most story assignments and will become, for some, the new assignment editor. Used effectively, it promises to make news more accurate and timely. Used frivolously, it will spawn an ocean of spam. Wherever the production and distribution of news can be automated or made “smarter,” AI will surely step up. But the future has not yet been written, Simon counsels. AI in the newsroom will be only as bad or good as its developers and users make it.

Also see:

Artificial Intelligence in the News: How AI Retools, Rationalizes, and Reshapes Journalism and the Public Arena — from cjr.org by Felix Simon

TABLE OF CONTENTS

- Executive Summary

- Introduction

- Chapter I: AI in the News: Reshaping Production and Distribution?

- Chapter II: Platform Companies and AI in the News

- Chapter III: Drawing the Loose Ends Together

- Conclusion

Nvidia just launched Chat with RTX

It leaves ChatGPT in the dust.

Here are 7 incredible things RTX can do: pic.twitter.com/H6K4oJtNcH

— Poonam Soni (@CodeByPoonam) March 2, 2024

EMO: Emote Portrait Alive – Generating Expressive Portrait Videos with Audio2Video Diffusion Model under Weak Conditions — from humanaigc.github.io Linrui Tian, Qi Wang, Bang Zhang, and Liefeng Bo

We proposed EMO, an expressive audio-driven portrait-video generation framework. Input a single reference image and the vocal audio, e.g. talking and singing, our method can generate vocal avatar videos with expressive facial expressions, and various head poses, meanwhile, we can generate videos with any duration depending on the length of input video.

Adobe previews new cutting-edge generative AI tools for crafting and editing custom audio — from blog.adobe.com by the Adobe Research Team

New experimental work from Adobe Research is set to change how people create and edit custom audio and music. An early-stage generative AI music generation and editing tool, Project Music GenAI Control allows creators to generate music from text prompts, and then have fine-grained control to edit that audio for their precise needs.

“With Project Music GenAI Control, generative AI becomes your co-creator. It helps people craft music for their projects, whether they’re broadcasters, or podcasters, or anyone else who needs audio that’s just the right mood, tone, and length,” says Nicholas Bryan, Senior Research Scientist at Adobe Research and one of the creators of the technologies.

How AI copyright lawsuits could make the whole industry go extinct — from theverge.com by Nilay Patel

The New York Times’ lawsuit against OpenAI is part of a broader, industry-shaking copyright challenge that could define the future of AI.

There’s a lot going on in the world of generative AI, but maybe the biggest is the increasing number of copyright lawsuits being filed against AI companies like OpenAI and Stability AI. So for this episode, we brought on Verge features editor Sarah Jeong, who’s a former lawyer just like me, and we’re going to talk about those cases and the main defense the AI companies are relying on in those copyright cases: an idea called fair use.

FCC officially declares AI-voiced robocalls illegal — from techcrunch.com by Devom Coldewey

The FCC’s war on robocalls has gained a new weapon in its arsenal with the declaration of AI-generated voices as “artificial” and therefore definitely against the law when used in automated calling scams. It may not stop the flood of fake Joe Bidens that will almost certainly trouble our phones this election season, but it won’t hurt, either.

The new rule, contemplated for months and telegraphed last week, isn’t actually a new rule — the FCC can’t just invent them with no due process. Robocalls are just a new term for something largely already prohibited under the Telephone Consumer Protection Act: artificial and pre-recorded messages being sent out willy-nilly to every number in the phone book (something that still existed when they drafted the law).

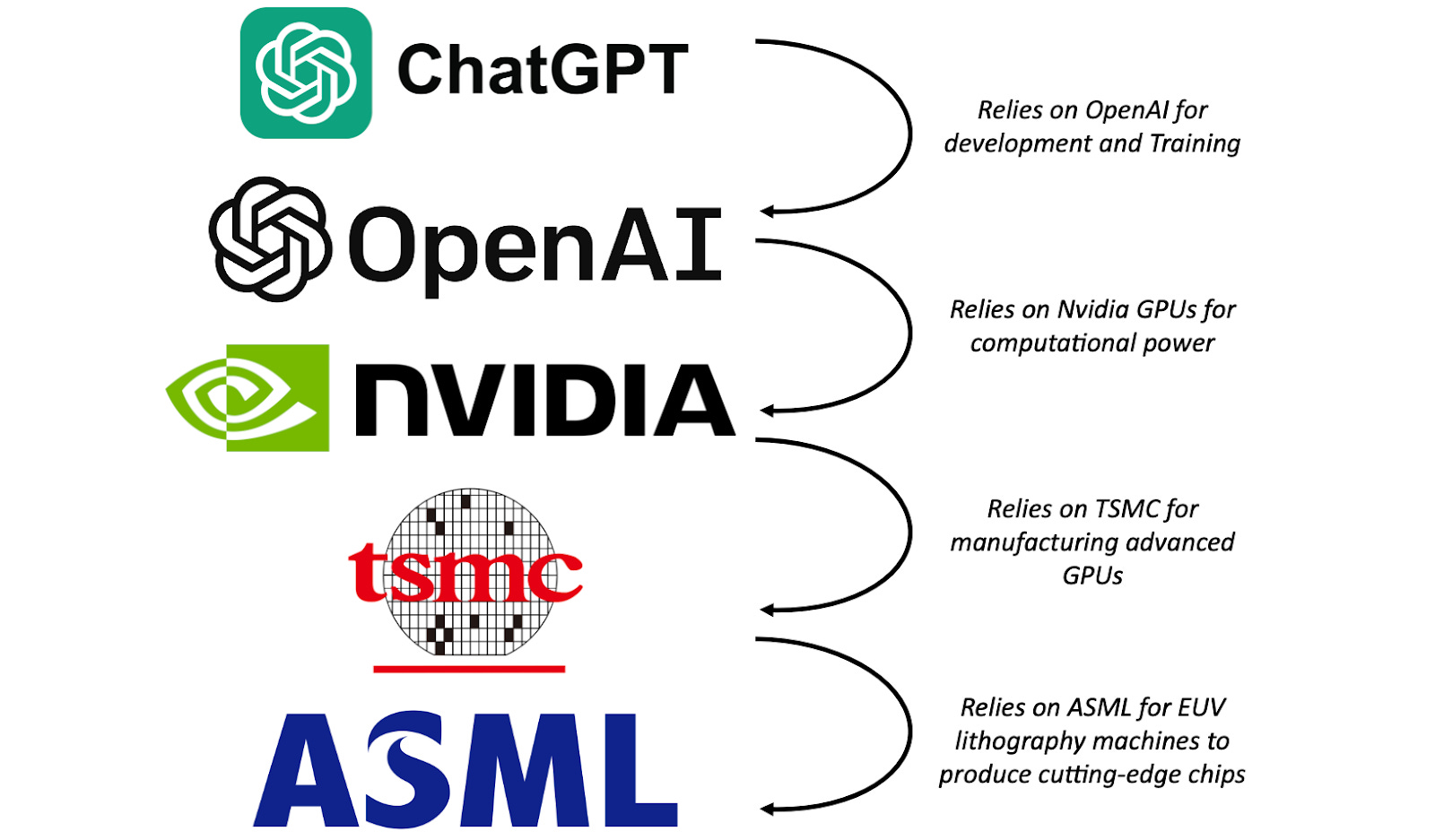

EIEIO…Chips Ahoy! — from dashmedia.co by Michael Moe, Brent Peus, and Owen Ritz

Here Come the AI Worms — from wired.com by Matt Burgess

Security researchers created an AI worm in a test environment that can automatically spread between generative AI agents—potentially stealing data and sending spam emails along the way.

Now, in a demonstration of the risks of connected, autonomous AI ecosystems, a group of researchers have created one of what they claim are the first generative AI worms—which can spread from one system to another, potentially stealing data or deploying malware in the process. “It basically means that now you have the ability to conduct or to perform a new kind of cyberattack that hasn’t been seen before,” says Ben Nassi, a Cornell Tech researcher behind the research.

Building Better Civil Justice Systems Isn’t Just About The Funding — from lawnext.com by Jess Lu and Mark Chandler

Justice tech — legal tech that helps low-income folks with no or some ability to pay, that assists the lawyers who serve those folks, and that makes the courts more efficient and effective — must contend with a higher hurdle than wooing Silicon Valley VCs: the civil justice system itself.

A checkerboard of technology systems and data infrastructures across thousands of local court jurisdictions makes it nearly impossible to develop tools with the scale needed to be sustainable. Courts are themselves a key part of the access to justice problem: opaque, duplicative and confusing court forms and burdensome filing processes make accessing the civil justice system deeply inefficient for the sophisticated, and an impenetrable maze for the 70+% of civil litigants who don’t have a lawyer.

Speaking of legaltech, also see:

“Noxtua,” Europe’s first sovereign legal AI — from eureporter.co

Noxtua, the first sovereign European legal AI with its proprietary Language Model, allows lawyers in corporations and law firms to benefit securely from the advantages of generative AI. The Berlin-based AI startup Xayn and the largest German business law firm CMS are developing Noxtua as a Legal AI with its own Legal Large Language Model and AI assistant. Lawyers from corporations and law firms can use the Noxtua chat to ask questions about legal documents, analyze them, check them for compliance with company guidelines, (re)formulate texts, and have summaries written. The Legal Copilot, which specializes in legal texts, stands out as an independent and secure alternative from Europe to the existing US offerings.

Generative AI in the legal industry: The 3 waves set to change how the business works — from thomsonreuters.com by Tom Shepherd and Stephanie Lomax

Gen AI is game-changing technology, directly impacting the way legal work is done and the current law firm-client business model; and while much remains unsettled, within 10 years, Gen AI is likely to change corporate legal departments and law firms in profound and unique ways

Generative artificial intelligence (Gen AI) isn’t a futuristic technology — it’s here now, and it’s already impacting the legal industry in many ways.

Hmmmm….on this next one…

New legal AI venture promises to show how judges think — from reuters.com by Sara Merken

Feb 29 (Reuters) – A new venture by a legal technology entrepreneur and a former Kirkland & Ellis partner says it can use artificial intelligence to help lawyers understand how individual judges think, allowing them to tailor their arguments and improve their courtroom results.

The Toronto-based legal research startup, Bench IQ, was founded by Jimoh Ovbiagele, the co-founder of now-shuttered legal research company ROSS Intelligence, alongside former ROSS senior software engineer Maxim Isakov and former Kirkland bankruptcy partner Jeffrey Gettleman.

How a Hollywood Director Uses AI to Make Movies — from every.to by Dan Shipper

Dave Clarke shows us the future of AI filmmaking

Dave told me that he couldn’t have made Borrowing Time without AI—it’s an expensive project that traditional Hollywood studios would never bankroll. But after Dave’s short went viral, major production houses approached him to make it a full-length movie. I think this is an excellent example of how AI is changing the art of filmmaking, and I came out of this interview convinced that we are on the brink of a new creative age.

We dive deep into the world of AI tools for image and video generation, discussing how aspiring filmmakers can use them to validate their ideas, and potentially even secure funding if they get traction. Dave walks me through how he has integrated AI into his movie-making process, and as we talk, we make a short film featuring Nicolas Cage using a haunted roulette ball to resurrect his dead movie career, live on the show.

.webp)