The Learning and Employment Records (LER) Report for 2026: Building the infrastructure between learning and work — from smartresume.com; with thanks to Paul Fain for this resource

Executive Summary (excerpt)

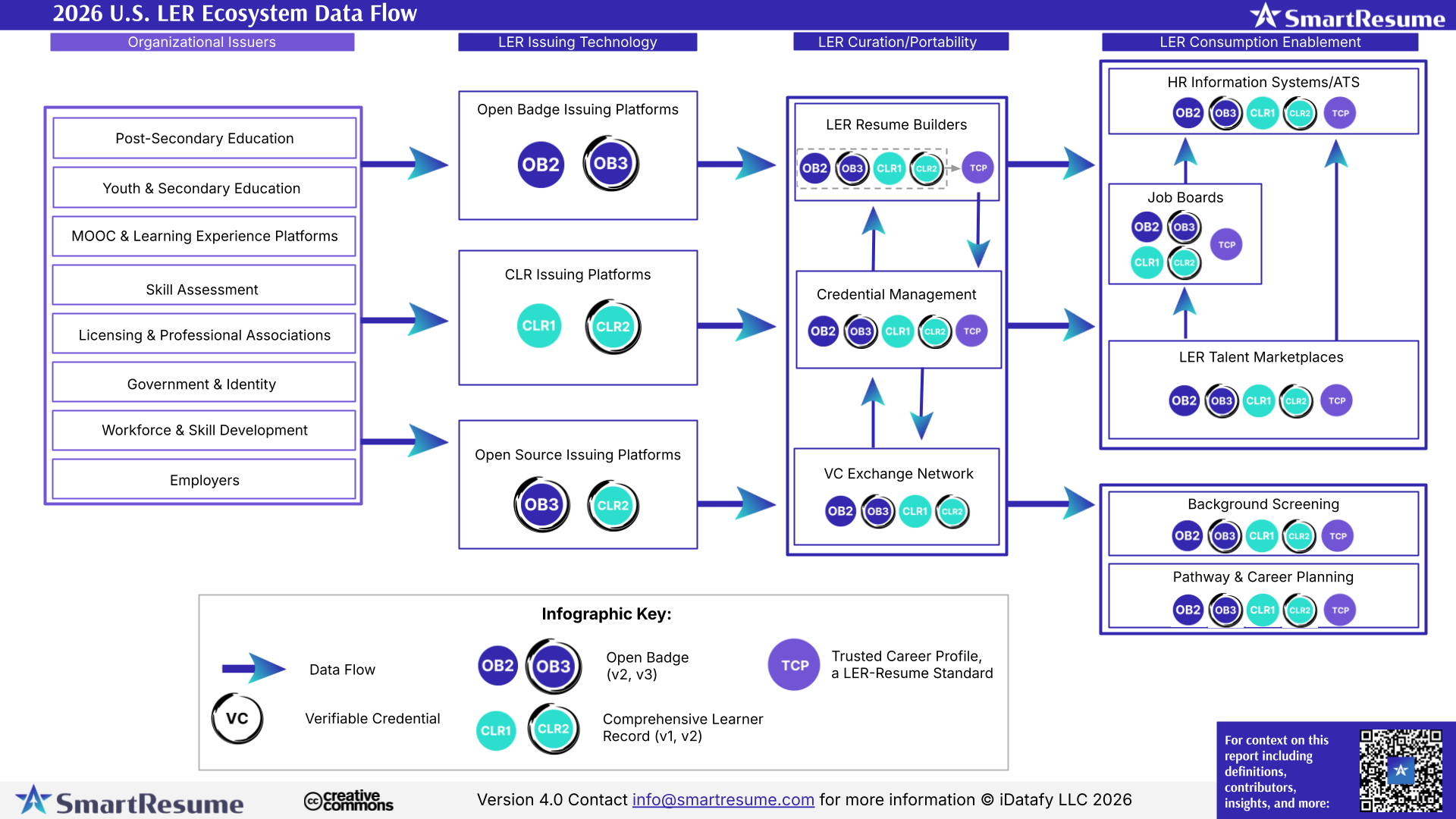

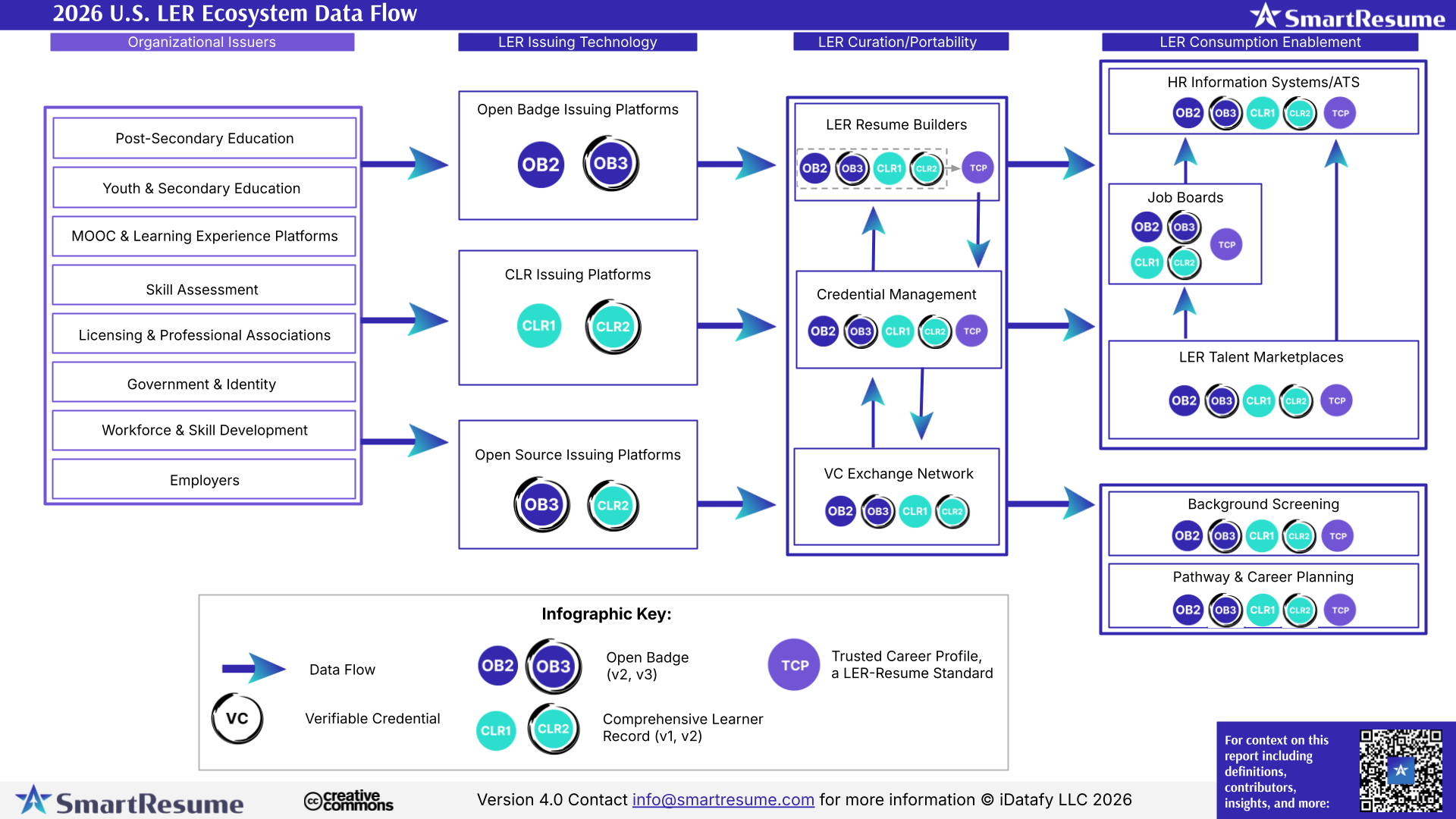

This report documents a clear transition now underway: LERs are moving from small experiments to systems people and organizations expect to rely on. Adoption remains early and uneven, but the forces reshaping the ecosystem are no longer speculative. Federal policy signals, state planning cycles, standards maturation, and employer behavior are aligning in ways that suggest 2026 will mark a shift from exploration to execution.

Across interviews with federal leaders, state CIOs, standards bodies, and ecosystem builders, a consistent theme emerged: the traditional model—where institutions control learning and employment records—no longer fits how people move through education and work. In its place, a new model is being actively designed—one in which individuals hold portable, verifiable records that systems can trust without centralizing control.

Most states are not yet operating this way. But planning timelines, RFP language, and federal signals indicate that many will begin building toward this model in early 2026.

As the ecosystem matures, another insight becomes unavoidable: records alone are not enough. Value emerges only when trusted records can be interpreted through shared skill languages, reused across contexts, and embedded into the systems and marketplaces where decisions are made.

Learning and Employment Records are not a product category. They are a data layer—one that reshapes how learning, work, and opportunity connect over time.

This report is written for anyone seeking to understand how LERs are beginning to move from concept to practice. Whether readers are new to the space or actively exploring implementation, the report focuses on observable signals, emerging patterns, and the practical conditions required to move from experimentation toward durable infrastructure.

…

…

“The building blocks for a global, interoperable skills ecosystem are already in place. As education and workforce alignment accelerates, the path toward trusted, machine-readable credentials is clear. The next phase depends on credentials that carry value across institutions, industries, states, and borders; credentials that move with learners wherever their education and careers take them. The question now isn’t whether to act, but how quickly we move.”

– Curtiss Barnes, Chief Executive Officer, 1EdTech

The above item was from Paul Fain’s recent posting, which includes the following excerpt:

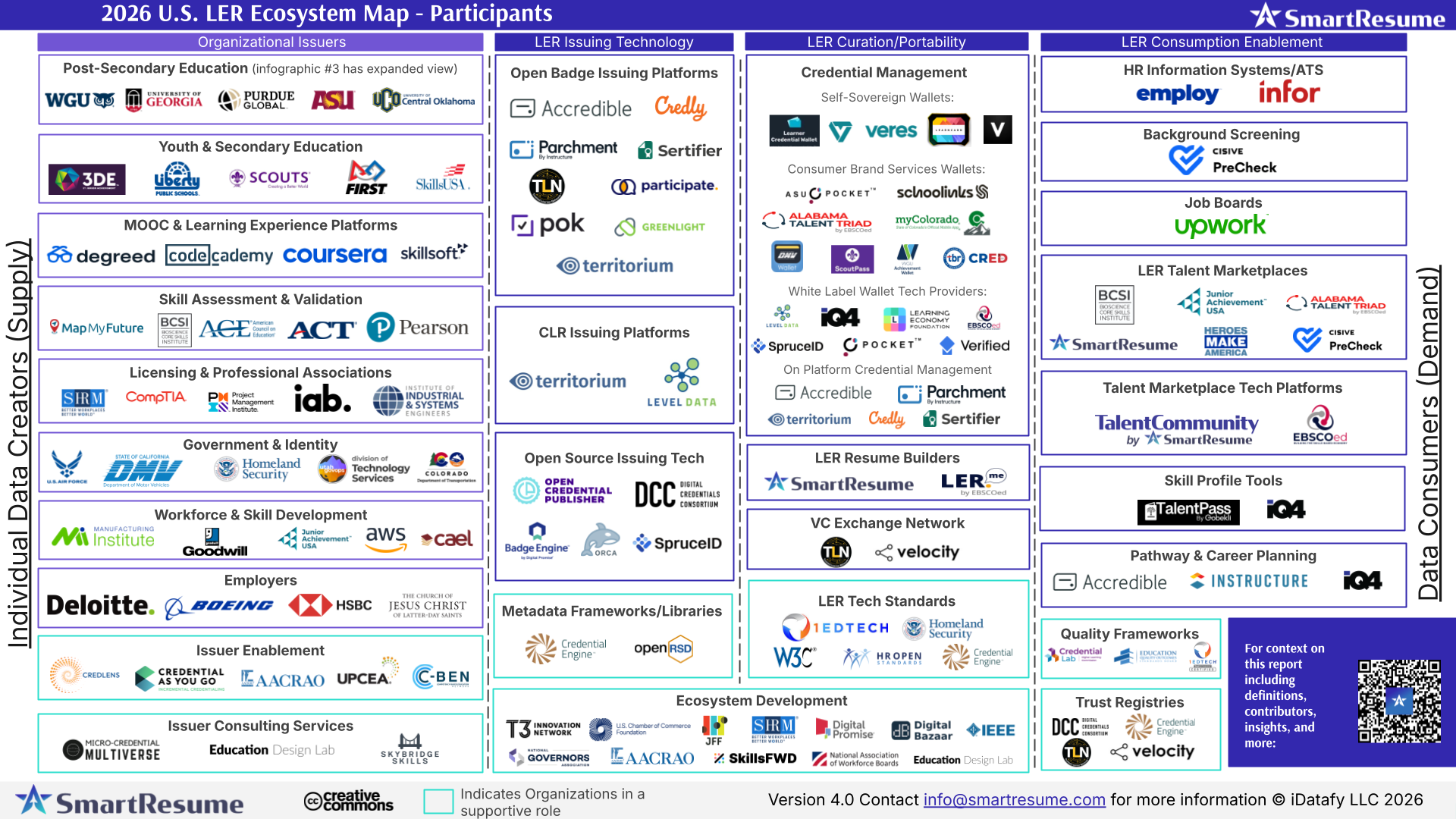

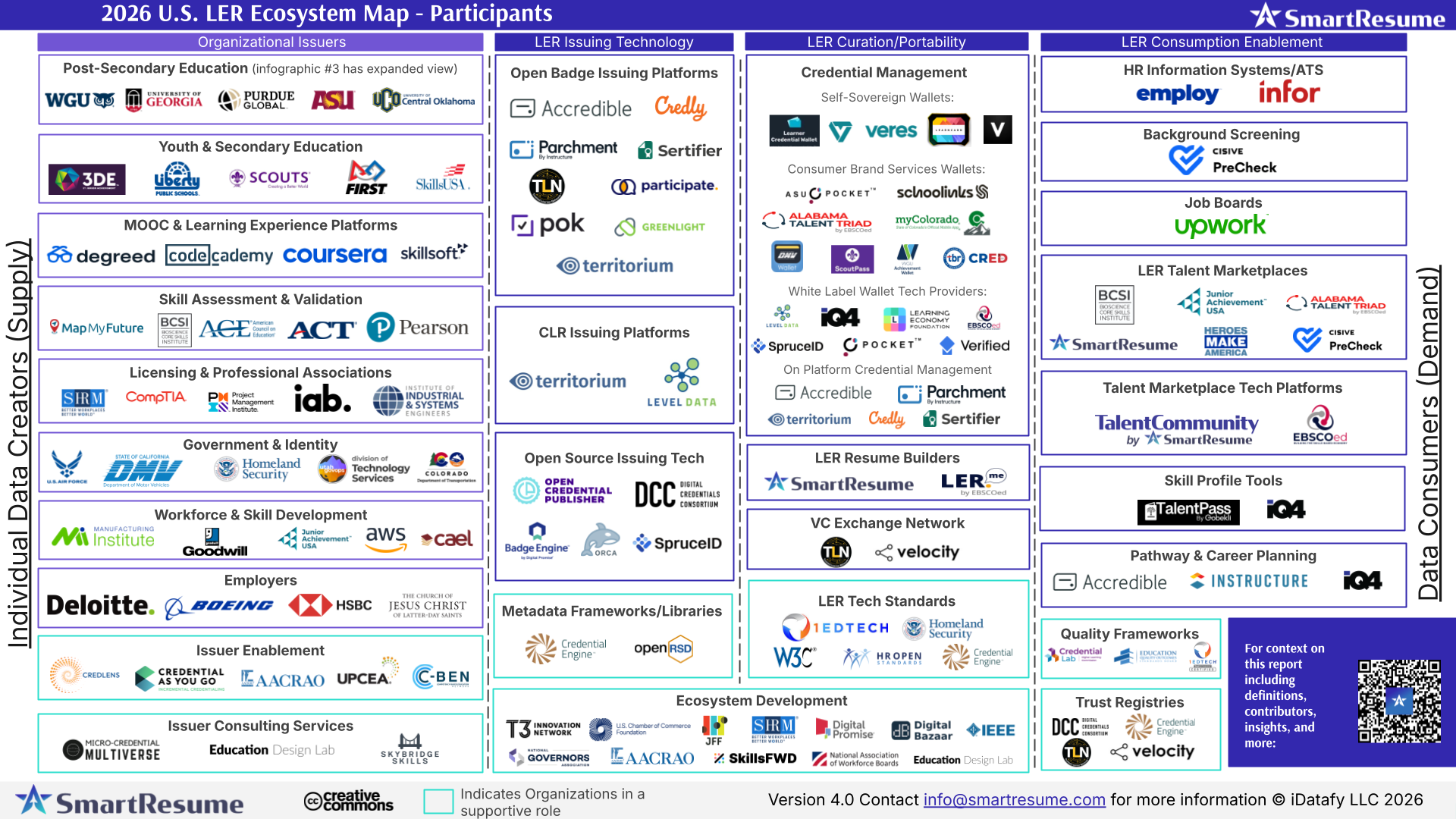

SmartResume just published a guide for making sense of this rapidly expanding landscape. The LER Ecosystem Report was produced in partnership with AACRAO, Credential Engine, 1EdTech, HR Open Standards, and the U.S. Chamber of Commerce Foundation. It was based on interviews and feedback gathered over three years from 100+ leaders across education, workforce, government, standards bodies, and tech providers.

The tools are available now to create the sort of interoperable ecosystem that can make talent marketplaces a reality, the report argues. Meanwhile, federal policy moves and bipartisan attention to LERs are accelerating action at the state level.

“For state leaders, this creates a practical inflection point,” says the report. “LERs are shifting from an innovation discussion to an infrastructure planning conversation.”