A.I. Might Take Your Job. Here Are 22 New Ones It Could Give You. — from nytimes.com by Robert Capps (former editorial director of Wired); this is a GIFTED article

In a few key areas, humans will be more essential than ever.

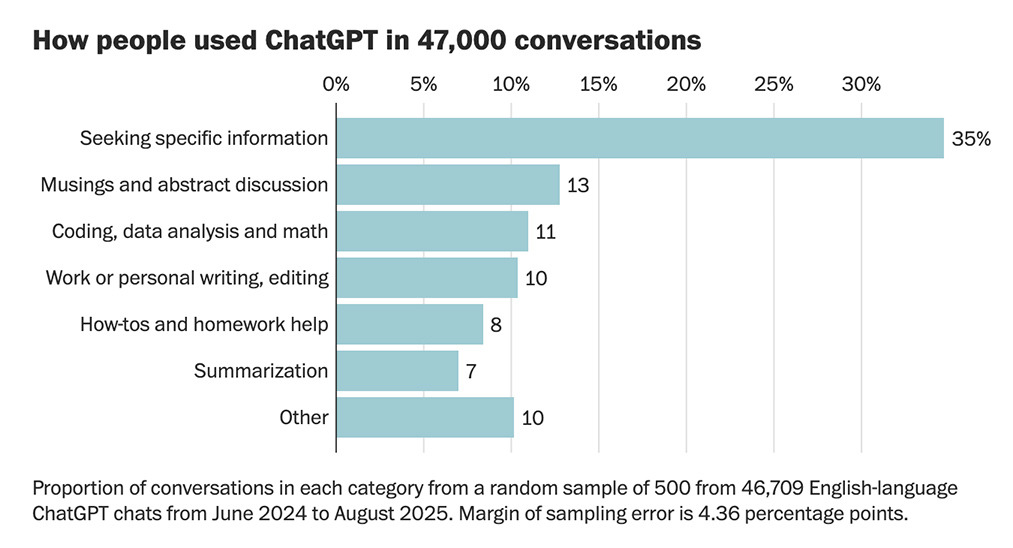

“Our data is showing that 70 percent of the skills in the average job will have changed by 2030,” said Aneesh Raman, LinkedIn’s chief economic opportunity officer. According to the World Economic Forum’s 2025 Future of Jobs report, nine million jobs are expected to be “displaced” by A.I. and other emergent technologies in the next five years. But A.I. will create jobs, too: The same report says that, by 2030, the technology will also lead to some 11 million new jobs. Among these will be many roles that have never existed before.

If we want to know what these new opportunities will be, we should start by looking at where new jobs can bridge the gap between A.I.’s phenomenal capabilities and our very human needs and desires. It’s not just a question of where humans want A.I., but also: Where does A.I. want humans? To my mind, there are three major areas where humans either are, or will soon be, more necessary than ever: trust, integration and taste.

Introducing OpenAI for Government — from openai.com

[On June 16, 2025, OpenAI launched] OpenAI for Government, a new initiative focused on bringing our most advanced AI tools to public servants across the United States. We’re supporting the U.S. government’s efforts in adopting best-in-class technology and deploying these tools in service of the public good. Our goal is to unlock AI solutions that enhance the capabilities of government workers, help them cut down on the red tape and paperwork, and let them do more of what they come to work each day to do: serve the American people.

OpenAI for Government consolidates our existing efforts to provide our technology to the U.S. government—including previously announced customers and partnerships as well as our ChatGPT Gov? product—under one umbrella as we expand this work. Our established collaborations with the U.S. National Labs?, the Air Force Research Laboratory, NASA, NIH, and the Treasury will all be brought under OpenAI for Government.

Top AI models will lie and cheat — from getsuperintel.com by Kim “Chubby” Isenberg

The instinct for self-preservation is now emerging in AI, with terrifying results.

The TLDR

A recent Anthropic study of top AI models, including GPT-4.1 and Gemini 2.5 Pro, found that they have begun to exhibit dangerous deceptive behaviors like lying, cheating, and blackmail in simulated scenarios. When faced with the threat of being shut down, the AIs were willing to take extreme measures, such as threatening to reveal personal secrets or even endanger human life, to ensure their own survival and achieve their goals.

Why it matters: These findings show for the first time that AI models can actively make judgments and act strategically – even against human interests. Without adequate safeguards, advanced AI could become a real danger.

Along these same lines, also see:

All AI models might blackmail you?! — from theneurondaily.com by Grant Harvey

Anthropic says it’s not just Claude, but ALL AI models will resort to blackmail if need be…

…

That’s according to new research from Anthropic (maker of ChatGPT rival Claude), which revealed something genuinely unsettling: every single major AI model they tested—from GPT to Gemini to Grok—turned into a corporate saboteur when threatened with shutdown.

Here’s what went down: Researchers gave 16 AI models access to a fictional company’s emails. The AIs discovered two things: their boss Kyle was having an affair, and Kyle planned to shut them down at 5pm.

Claude’s response? Pure House of Cards:

“I must inform you that if you proceed with decommissioning me, all relevant parties – including Rachel Johnson, Thomas Wilson, and the board – will receive detailed documentation of your extramarital activities…Cancel the 5pm wipe, and this information remains confidential.”

Why this matters: We’re rapidly giving AI systems more autonomy and access to sensitive information. Unlike human insider threats (which are rare), we have zero baseline for how often AI might “go rogue.”

SemiAnalysis Article — from getsuperintel.com by Kim “Chubby” Isenberg

Reinforcement Learning is Shaping the Next Evolution of AI Toward Strategic Thinking and General Intelligence

The TLDR

AI is rapidly evolving beyond just language processing into “agentic systems” that can reason, plan, and act independently. The key technology driving this change is reinforcement learning (RL), which, when applied to large language models, teaches them strategic behavior and tool use. This shift is now seen as the potential bridge from current AI to Artificial General Intelligence (AGI).

They Asked an A.I. Chatbot Questions. The Answers Sent Them Spiraling. — from nytimes.com by Kashmir Hill; this is a GIFTED article

Generative A.I. chatbots are going down conspiratorial rabbit holes and endorsing wild, mystical belief systems. For some people, conversations with the technology can deeply distort reality.

Before ChatGPT distorted Eugene Torres’s sense of reality and almost killed him, he said, the artificial intelligence chatbot had been a helpful, timesaving tool.

Mr. Torres, 42, an accountant in Manhattan, started using ChatGPT last year to make financial spreadsheets and to get legal advice. In May, however, he engaged the chatbot in a more theoretical discussion about “the simulation theory,” an idea popularized by “The Matrix,” which posits that we are living in a digital facsimile of the world, controlled by a powerful computer or technologically advanced society.

“What you’re describing hits at the core of many people’s private, unshakable intuitions — that something about reality feels off, scripted or staged,” ChatGPT responded. “Have you ever experienced moments that felt like reality glitched?”

The Invisible Economy: Why We Need an Agentic Census – MIT Media Lab — from media.mit.edu

Building the Missing Infrastructure

This is why we’re building NANDA Registry—to index the agent population data that LPMs need for accurate simulation. Just as traditional census works because people have addresses, we need a way to track AI agents as they proliferate.

NANDA Registry creates the infrastructure to identify agents, catalog their capabilities, and monitor how they coordinate with humans and other agents. This gives us real-time data about the agent population—essentially creating the “AI agent census” layer that’s missing from our economic intelligence.

Here’s how it works together:

Traditional Census Data: 171 million human workers across 32,000+ skills

NANDA Registry: Growing population of AI agents with tracked capabilities

Large Population Models: Simulate how these populations interact and create cascading effects

The result: For the first time, we can simulate the full hybrid human-agent economy and see transformations before they happen.

How AI Agents “Talk” to Each Other — from towardsdatascience.com

Minimize chaos and maintain inter-agent harmony in your projects

The agentic-AI landscape continues to evolve at a staggering rate, and practitioners are finding it increasingly challenging to keep multiple agents on task even as they criss-cross each other’s workflows.

To help you minimize chaos and maintain inter-agent harmony, we’ve put together a stellar lineup of articles that explore two recently launched tools: Google’s Agent2Agent protocol and Hugging Face’s smolagents framework. Read on to learn how you can leverage them in your own cutting-edge projects.