Uh-oh: Silicon Valley is building a Chinese-style social credit system — from fastcompany.com by Mike Elgan

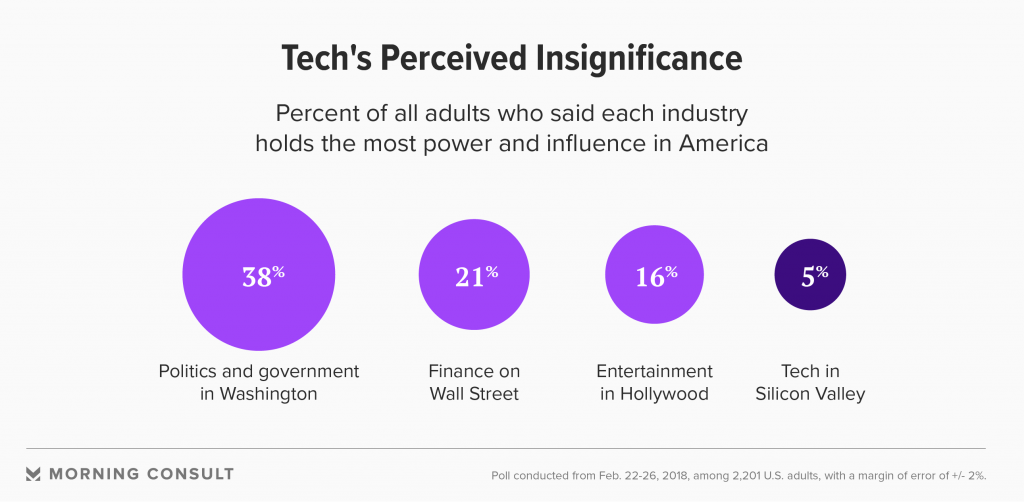

In China, scoring citizens’ behavior is official government policy. U.S. companies are increasingly doing something similar, outside the law.

Excerpts (emphasis DSC):

Have you heard about China’s social credit system? It’s a technology-enabled, surveillance-based nationwide program designed to nudge citizens toward better behavior. The ultimate goal is to “allow the trustworthy to roam everywhere under heaven while making it hard for the discredited to take a single step,” according to the Chinese government.

In place since 2014, the social credit system is a work in progress that could evolve by next year into a single, nationwide point system for all Chinese citizens, akin to a financial credit score. It aims to punish for transgressions that can include membership in or support for the Falun Gong or Tibetan Buddhism, failure to pay debts, excessive video gaming, criticizing the government, late payments, failing to sweep the sidewalk in front of your store or house, smoking or playing loud music on trains, jaywalking, and other actions deemed illegal or unacceptable by the Chinese government.

…

IT CAN HAPPEN HERE

Many Westerners are disturbed by what they read about China’s social credit system. But such systems, it turns out, are not unique to China. A parallel system is developing in the United States, in part as the result of Silicon Valley and technology-industry user policies, and in part by surveillance of social media activity by private companies.

Here are some of the elements of America’s growing social credit system.

If current trends hold, it’s possible that in the future a majority of misdemeanors and even some felonies will be punished not by Washington, D.C., but by Silicon Valley. It’s a slippery slope away from democracy and toward corporatocracy.

From DSC:

Who’s to say what gains a citizen points and what subtracts from their score? If one believes a certain thing, is that a plus or a minus? And what might be tied to someone’s score? The ability to obtain food? Medicine/healthcare? Clothing? Social Security payments? Other?

We are giving a huge amount of power to a handful of corporations…trust comes into play…at least for me. Even internally, the big tech co’s seem to be struggling as to the ethical ramifications of what they’re working on (in a variety of areas).

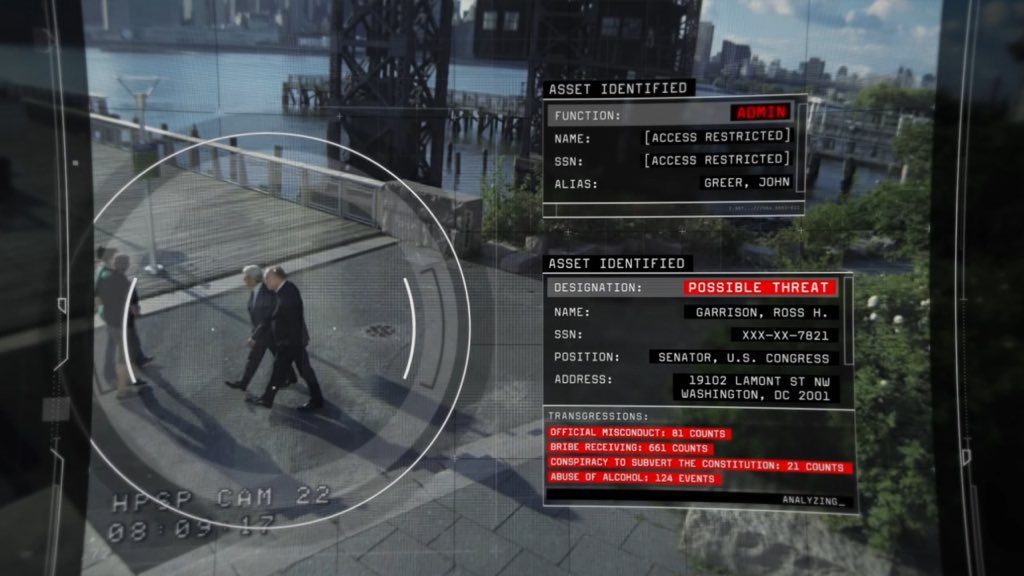

Is the stage being set for a “Person of Interest” Version 2.0?