How Your Learners *Actually* Learn with AI — from drphilippahardman.substack.com by Dr. Philippa Hardman

What 37.5 million AI chats show us about how learners use AI at the end of 2025 — and what this means for how we design & deliver learning experiences in 2026

Last week, Microsoft released a similar analysis of a whopping 37.5 million Copilot conversations. These conversation took place on the platform from January to September 2025, providing us with a window into if and how AI use in general — and AI use among learners specifically – has evolved in 2025.

Microsoft’s mass behavioural data gives us a detailed, global glimpse into what learners are actually doing across devices, times of day and contexts. The picture that emerges is pretty clear and largely consistent with what OpenAI’s told us back in the summer:

AI isn’t functioning primarily as an “answers machine”: the majority of us use AI as a tool to personalise and differentiate generic learning experiences and – ultimately – to augment human learning.

Let’s dive in!

Learners don’t “decide” to use AI anymore. They assume it’s there, like search, like spellcheck, like calculators. The question has shifted from “should I use this?” to “how do I use this effectively?”

8 AI Agents Every HR Leader Needs To Know In 2026 — from forbes.com by Bernard Marr

So where do you start? There are many agentic tools and platforms for AI tasks on the market, and the most effective approach is to focus on practical, high-impact workflows. So here, I’ll look at some of the most compelling use cases, as well as provide an overview of the tools that can help you quickly deliver tangible wins.

…

Some of the strongest opportunities in HR include:

- Workforce management, administering job satisfaction surveys, monitoring and tracking performance targets, scheduling interventions, and managing staff benefits, medical leave, and holiday entitlement.

- Recruitment screening, automatically generating and posting job descriptions, filtering candidates, ranking applicants against defined criteria, identifying the strongest matches, and scheduling interviews.

- Employee onboarding, issuing new hires with contracts and paperwork, guiding them to onboarding and training resources, tracking compliance and completion rates, answering routine enquiries, and escalating complex cases to human HR specialists.

- Training and development, identifying skills gaps, providing self-service access to upskilling and reskilling opportunities, creating personalized learning pathways aligned with roles and career goals, and tracking progress toward completion.

AI Has Landed in Education: Now What? — from learningfuturesdigest.substack.com by Dr. Philippa Hardman

Here’s what’s shaped the AI-education landscape in the last month:

- The AI Speed Trap is [still] here: AI adoption in L&D is basically won (87%)—but it’s being used to ship faster, not learn better (84% prioritising speed), scaling “more of the same” at pace.

- AI tutors risk a “pedagogy of passivity”: emerging evidence suggests tutoring bots can reduce cognitive friction and pull learners down the ICAP spectrum—away from interactive/constructive learning toward efficient consumption.

- Singapore + India are building what the West lacks: they’re treating AI as national learning infrastructure—for resilience (Singapore) and access + language inclusion (India)—while Western systems remain fragmented and reactive.

- Agentic AI is the next pivot: early signs show a shift from AI as a content engine to AI as a learning partner—with UConn using agents to remove barriers so learners can participate more fully in shared learning.

- Moodle’s AI stance sends two big signals: the traditional learning ecosystem in fragmenting, and the concept of “user sovereignty” over by AI is emerging.

Four strategies for implementing custom AIs that help students learn, not outsource — from educational-innovation.sydney.edu.au by Kria Coleman, Matthew Clemson, Laura Crocco and Samantha Clarke; via Derek Bruff

For Cogniti to be taken seriously, it needs to be woven into the structure of your unit and its delivery, both in class and on Canvas, rather than left on the side. This article shares practical strategies for implementing Cogniti in your teaching so that students:

- understand the context and purpose of the agent,

- know how to interact with it effectively,

- perceive its value as a learning tool over any other available AI chatbots, and

- engage in reflection and feedback.

In this post, we discuss how to introduce and integrate Cogniti agents into the learning environment so students understand their context, interact effectively, and see their value as customised learning companions.

In this post, we share four strategies to help introduce and integrate Cogniti in your teaching so that students understand their context, interact effectively, and see their value as customised learning companions.

Collection: Teaching with Custom AI Chatbots — from teaching.virginia.edu; via Derek Bruff

The default behaviors of popular AI chatbots don’t always align with our teaching goals. This collection explores approaches to designing AI chatbots for particular pedagogical purposes.

Example/excerpt:

- Not Your Default Chatbot: Five Teaching Applications of Custom AI Bots

Agile Learning

derekbruff.org/2025/10/01/five-teaching-applications-of-custom-ai-chatbots/

Beyond Infographics: How to Use Nano Banana to *Actually* Support Learning — from drphilippahardman.substack.com by Dr Philippa Hardman

Six evidence-based use cases to try in Google’s latest image-generating AI tool

While it’s true that Nano Banana generates better infographics than other AI models, the conversation has so far massively under-sold what’s actually different and valuable about this tool for those of us who design learning experiences.

What this means for our workflow:

Instead of the traditional “commission ? wait ? tweak ? approve ? repeat” cycle, Nano Banana enables an iterative, rapid-cycle design process where you can:

- Sketch an idea and see it refined in minutes.

- Test multiple visual metaphors for the same concept without re-briefing a designer.

- Build 10-image storyboards with perfect consistency by specifying the constraints once, not manually editing each frame.

- Implement evidence-based strategies (contrasting cases, worked examples, observational learning) that are usually too labour-intensive to produce at scale.

This shift—from “image generation as decoration” to “image generation as instructional scaffolding”—is what makes Nano Banana uniquely useful for the 10 evidence-based strategies below.

4 Simple & Easy Ways to Use AI to Differentiate Instruction — from mindfulaiedu.substack.com (Mindful AI for Education) by Dani Kachorsky, PhD

Designing for All Learners with AI and Universal Design Learning

So this year, I’ve been exploring new ways that AI can help support students with disabilities—students on IEPs, learning plans, or 504s—and, honestly, it’s changing the way I think about differentiation in general.

As a quick note, a lot of what I’m finding applies just as well to English language learners or really to any students. One of the big ideas behind Universal Design for Learning (UDL) is that accommodations and strategies designed for students with disabilities are often just good teaching practices. When we plan instruction that’s accessible to the widest possible range of learners, everyone benefits. For example, UDL encourages explaining things in multiple modes—written, visual, auditory, kinesthetic—because people access information differently. I hear students say they’re “visual learners,” but I think everyone is a visual learner, and an auditory learner, and a kinesthetic learner. The more ways we present information, the more likely it is to stick.

So, with that in mind, here are four ways I’ve been using AI to differentiate instruction for students with disabilities (and, really, everyone else too):

The Periodic Table of AI Tools In Education To Try Today — from ictevangelist.com by Mark Anderson

What I’ve tried to do is bring together genuinely useful AI tools that I know are already making a difference.

For colleagues wanting to explore further, I’m sharing the list exactly as it appears in the table, including website links, grouped by category below. Please do check it out, as along with links to all of the resources, I’ve also written a brief summary explaining what each of the different tools do and how they can help.

Seven Hard-Won Lessons from Building AI Learning Tools — from linkedin.com by Louise Worgan

Last week, I wrapped up Dr Philippa Hardman’s intensive bootcamp on AI in learning design. Four conversations, countless iterations, and more than a few humbling moments later – here’s what I am left thinking about.

Finally Catching Up to the New Models — from michellekassorla.substack.com by Michelle Kassorla

There are some amazing things happening out there!

An aside: Google is working on a new vision for textbooks that can be easily differentiated based on the beautiful success for NotebookLM. You can get on the waiting list for that tool by going to LearnYourWay.withgoogle.com.

…

Nano Banana Pro

Sticking with the Google tools for now, Nano Banana Pro (which you can use for free on Google’s AI Studio), is doing something that everyone has been waiting a long time for: it adds correct text to images.

Introducing AI assistants with memory — from perplexity.ai

The simple act of remembering is the crux of how we navigate the world: it shapes our experiences, informs our decisions, and helps us anticipate what comes next. For AI agents like Comet Assistant, that continuity leads to a more powerful, personalized experience.

Today we are announcing new personalization features to remember your preferences, interests, and conversations. Perplexity now synthesizes them automatically like memory, for valuable context on relevant tasks. Answers are smarter, faster, and more personalized, no matter how you work.

From DSC :

This should be important as we look at learning-related applications for AI.

For the last three days, my Substack has been in the top “Rising in Education” list. I realize this is based on a hugely flawed metric, but it still feels good. ?

– Michael G Wagner

I’m a Professor. A.I. Has Changed My Classroom, but Not for the Worse. — from nytimes.com by Carlo Rotella [this should be a gifted article]

My students’ easy access to chatbots forced me to make humanities instruction even more human.

Three Years from GPT-3 to Gemini 3 — from oneusefulthing.org by Ethan Mollick

From chatbots to agents

Three years ago, we were impressed that a machine could write a poem about otters. Less than 1,000 days later, I am debating statistical methodology with an agent that built its own research environment. The era of the chatbot is turning into the era of the digital coworker. To be very clear, Gemini 3 isn’t perfect, and it still needs a manager who can guide and check it. But it suggests that “human in the loop” is evolving from “human who fixes AI mistakes” to “human who directs AI work.” And that may be the biggest change since the release of ChatGPT.

Results May Vary — from aiedusimplified.substack.com by Lance Eaton, PhD

On Custom Instructions with GenAI Tools….

I’m sharing today about custom instructions and my use of them across several AI tools (paid versions of ChatGPT, Gemini, and Claude). I want to highlight what I’m doing, how it’s going, and solicit from readers to share in the comments some of their custom instructions that they find helpful.

I’ve been in a few conversations lately that remind me that not everyone knows about them, even some of the seasoned folks around GenAI and how you might set them up to better support your work. And, of course, they are, like all things GenAI, highly imperfect!

I’ll include and discuss each one below, but if you want to keep abreast of my custom instructions, I’ll be placing them here as I adjust and update them so folks can see the changes over time.

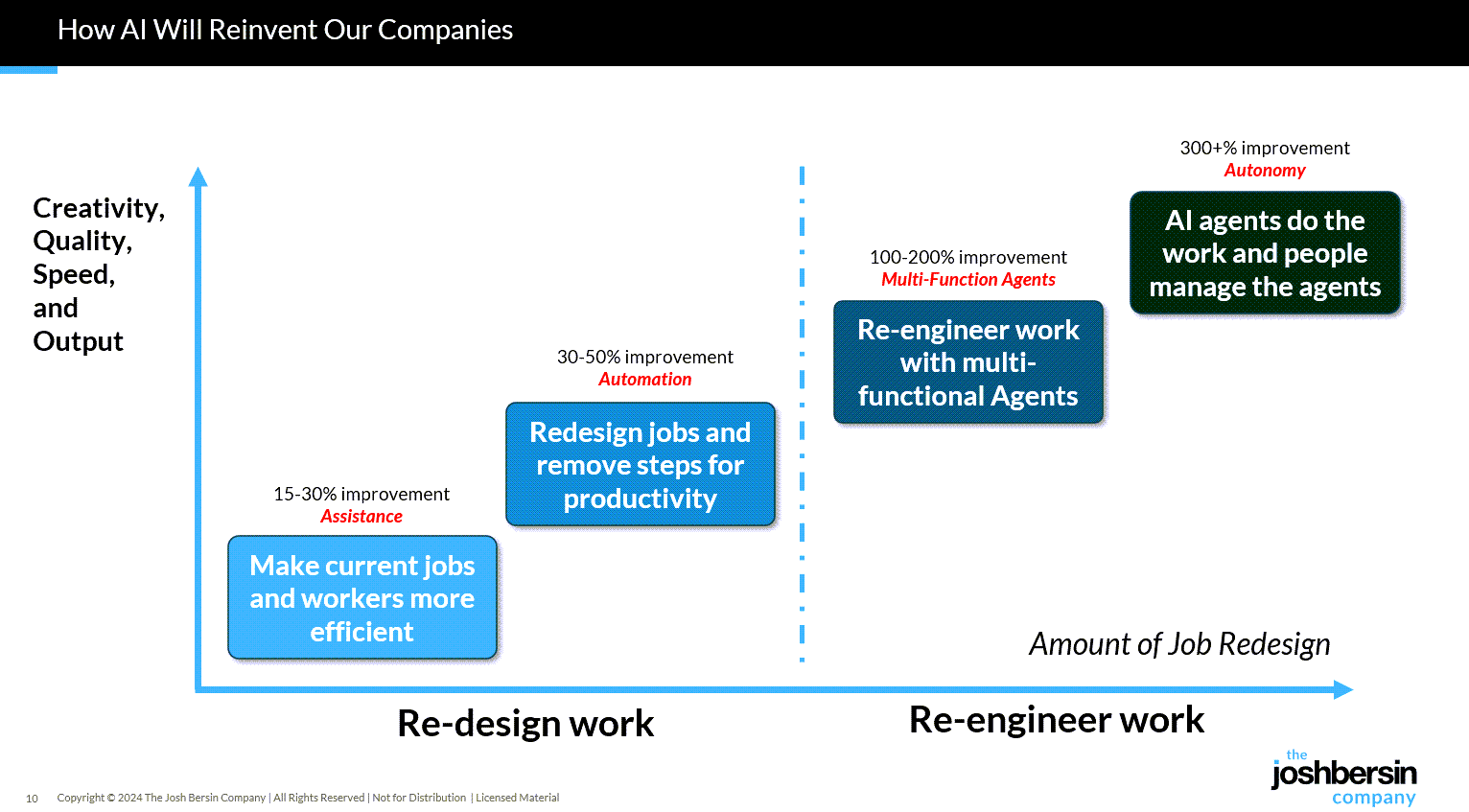

Gen AI Is Going Mainstream: Here’s What’s Coming Next — from joshbersin.com by Josh Bersin

I just completed nearly 60,000 miles of travel across Europe, Asia, and the Middle East meeting with hundred of companies to discuss their AI strategies. While every company’s maturity is different, one thing is clear: AI as a business tool has arrived: it’s real and the use-cases are growing.

A new survey by Wharton shows that 46% of business leaders use Gen AI daily and 80% use it weekly. And among these users, 72% are measuring ROI and 74% report a positive return. HR, by the way, is the #3 department in use cases, only slightly behind IT and Finance.

What are companies getting out of all this? Productivity. The #1 use case, by far, is what we call “stage 1” usage – individual productivity.

From DSC:

Josh writes: “Many of our large clients are now implementing AI-native learning systems and seeing 30-40% reduction in staff with vast improvements in workforce enablement.”

While I get the appeal (and ROI) from management’s and shareholders’ perspective, this represents a growing concern for employment and people’s ability to earn a living.

And while I highly respect Josh and his work through the years, I disagree that we’re over the problems with AI and how people are using it:

Two years ago the NYT was trying to frighten us with stories of AI acting as a romance partner. Well those stories are over, and thanks to a $Trillion (literally) of capital investment in infrastructure, engineering, and power plants, this stuff is reasonably safe.

Those stories are just beginning…they’re not close to being over.

“… imagine a world where there’s no separation between learning and assessment…” — from aiedusimplified.substack.com by Lance Eaton, Ph.D. and Tawnya Means

An interview with Tawnya Means

So let’s imagine a world where there’s no separation between learning and assessment: it’s ongoing. There’s always assessment, always learning, and they’re tied together. Then we can ask: what is the role of the human in that world? What is it that AI can’t do?

…

Imagine something like that in higher ed. There could be tutoring or skill-based work happening outside of class, and then relationship-based work happening inside of class, whether online, in person, or some hybrid mix.

The aspects of learning that don’t require relational context could be handled by AI, while the human parts remain intact. For example, I teach strategy and strategic management. I teach people how to talk with one another about the operation and function of a business. I can help students learn to be open to new ideas, recognize when someone pushes back out of fear of losing power, or draw from my own experience in leading a business and making future-oriented decisions.

But the technical parts such as the frameworks like SWOT analysis, the mechanics of comparing alternative viewpoints in a boardroom—those could be managed through simulations or reports that receive immediate feedback from AI. The relational aspects, the human mentoring, would still happen with me as their instructor.

Part 2 of their interview is here:

From DSC:

One of my sisters shared this piece with me. She is very concerned about our society’s use of technology — whether it relates to our youth’s use of social media or the relentless pressure to be first in all things AI. As she was a teacher (at the middle school level) for 37 years, I greatly appreciate her viewpoints. She keeps me grounded in some of the negatives of technology. It’s important for us to listen to each other.

Nvidia becomes first $5 trillion company — from theaivallye.com by Barsee

PLUS: OpenAI IPO at $1 trillion valuation by late 2026 / early 2027

Nvidia has officially become the first company in history to cross the $5 trillion market cap, cementing its position as the undisputed leader of the AI era. Just three months ago, the chipmaker hit $4 trillion; it’s already added another trillion since.

Nvidia market cap milestones:

- Jan 2020: $144 billion

- May 2023: $1 trillion

- Feb 2024: $2 trillion

- Jun 2024: $3 trillion

- Jul 2025: $4 trillion

- Oct 2025: $5 trillion

The above posting linked to:

- Nvidia becomes first public company worth $5 trillion — from techcrunch.com by Ivan Mehta

The biggest beneficiary of the ongoing AI boom, Nvidia has become the first public company to pass the $5 trillion market cap milestone.

OpenAI’s Atlas: the End of Online Learning—or Just the Beginning? — from drphilippahardman.substack.com by Dr. Philippa Hardman

My take is this: in all of the anxiety lies a crucial and long-overdue opportunity to deliver better learning experiences. Precisely because Atlas perceives the same context in the same moment as you, it can transform learning into a process aligned with core neuro-scientific principles—including active retrieval, guided attention, adaptive feedback and context-dependent memory formation.

Perhaps in Atlas we have a browser that for the first time isn’t just a portal to information, but one which can become a co-participant in active cognitive engagement—enabling iterative practice, reflective thinking, and real-time scaffolding as you move through challenges and ideas online.

With this in mind, I put together 10 use cases for Atlas for you to try for yourself.

…

6. Retrieval Practice

What: Pulling information from memory drives retention better than re-reading.

Why: Practice testing delivers medium-to-large effects (Adesope et al., 2017).

Try: Open a document with your previous notes. Ask Atlas for a mixed activity set: “Quiz me on the Krebs cycle—give me a near-miss, high-stretch MCQ, then a fill-in-the-blank, then ask me to explain it to a teen.”

Atlas uses its browser memory to generate targeted questions from your actual study materials, supporting spaced, varied retrieval.

From DSC:

A quick comment. I appreciate these ideas and approaches from Katarzyna and Rita. I do think that someone is going to want to be sure that the AI models/platforms/tools are given up-to-date information and updated instructions — i.e., any new procedures, steps to take, etc. Perhaps I’m missing the boat here, but an internal AI platform is going to need to have access to up-to-date information and instructions.

Is Your Institution Ready for the Earnings Premium Buzzsaw? — from ailearninsights.substack.com by Alfred Essa

On Wednesday [October 29th, 2025], I’m launching the Beta version of an Education Accountability Website (”EDU Accountability Lab”). It analyzes federal student aid, institutional outcomes, and accountability metrics across 6,000+ colleges and universities in the US.

Our Mission

The EDU Accountability Lab delivers independent, data-driven analysis of higher education with a focus on accountability, affordability, and outcomes. Our audience includes policymakers, researchers, and taxpayers who seek greater transparency and effectiveness in postsecondary education. We take no advocacy position on specific institutions, programs, metrics, or policies. Our goal is to provide clear and well-documented methods that support policy discussions, strengthen institutional accountability, and improve public understanding of the value of higher education.

But right now, there’s one area demanding urgent attention.

Starting July 1, 2026, every degree program at every institution receiving federal student aid must prove its graduates earn more than people without that credential—or lose Title IV eligibility.

This isn’t about institutions passing or failing. It’s about programs. Every Bachelor’s in Psychology. Every Master’s in Education. Every Associate in Nursing. Each one assessed separately. Each one facing the same pass-or-fail tests.

Goldman economists on the Gen Z hiring nightmare: ‘Jobless growth’ is probably the new normal — from fortune.com by Nick Lichtenberg (this article is behind a paywall)

The challenging U.S. labor market is entering a new normal, according to Goldman Sachs economists David Mericle and Pierfrancesco Mei, who tackled the phenomenon of “jobless growth” in an Oct. 13 note. It resonates with what Federal Reserve Chair Jerome Powell memorably described in September as a “low-hire, low-fire” labor market, in which, for some reason, “kids coming out of college and younger people, minorities, are having a hard time finding jobs.”

Some analysts blame the downturn in entry-level hiring on the impact of AI on the economy, others on macroeconomic uncertainty, especially the seesawing tariffs regime from the Trump administration. The takeaway is clear, though, that getting hired is really hard in the mid-2020s.

This shift is clear in data collated by the investment bank. Payroll growth by industry shows almost all sectors outside health care posting weak, zero, or even negative net job creation, despite otherwise solid macroeconomic indicators. Meanwhile, the share of executives who mention both AI and employment in the same context on earnings calls has reached historic highs.?

…

For now, Mericle’s “low-hire, low-fire” diagnosis serves as both warning and guide: Jobless growth may not mean mass layoffs, but it does mean fewer opportunities for job seekers and slower rebounds from economic shocks in the years to come.?

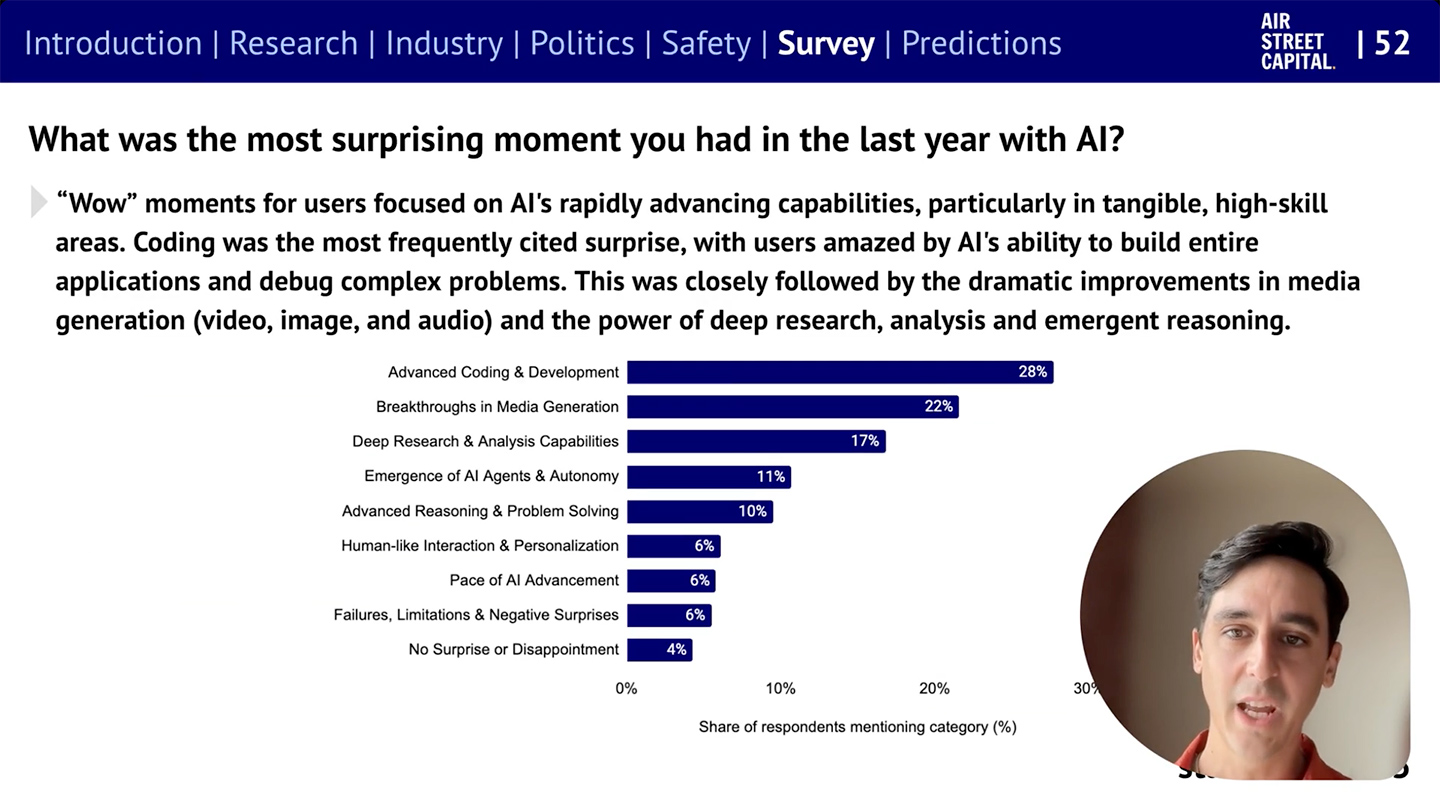

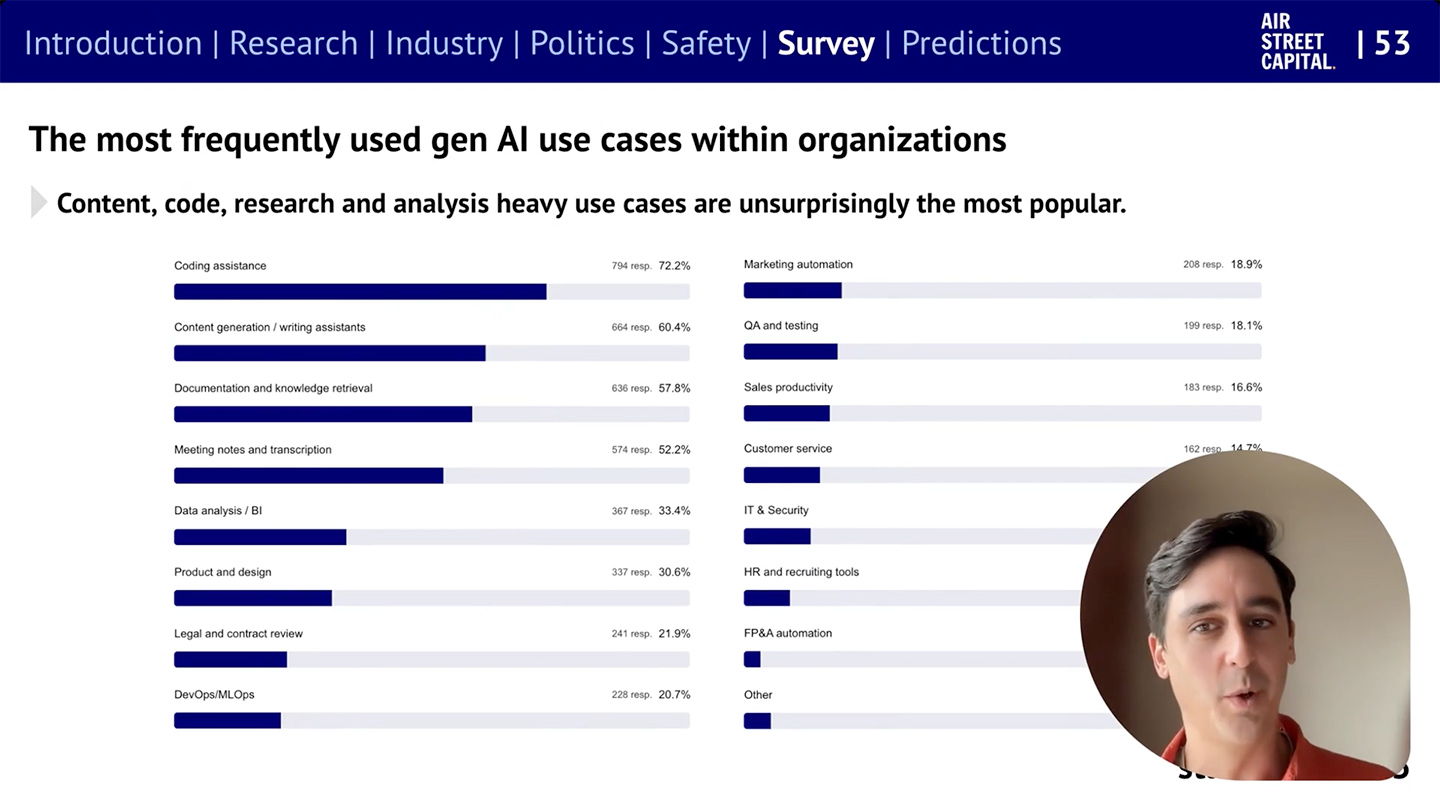

The State of AI Report 2025 — from nathanbenaich.substack.com by Nathan Benaich

In short, it’s been a monumental 12 months for AI. Our eighth annual report is the most comprehensive it’s ever been, covering what you need to know about research, industry, politics, and safety – along with our first State of AI Usage Survey of 1,200 practitioners.

From 70/20/10 to 90/10 — from drphilippahardman.substack.com by Dr Philippa Hardman

A new L&D operating system for the AI Era?

This week I want to share a hypothesis I’m increasingly convinced of: that we are entering an age of the 90/10 model of L&D.

90/10 is a model where roughly 90% of “training” is delivered by AI coaches as daily performance support, and 10% of training is dedicated to developing complex and critical skills via high-touch, human-led learning experiences.

Proponents of 90/10 argue that the model isn’t about learning less, but about learning smarter by defining all jobs to be done as one of the following:

- Delegate (the dead skills): Tasks that can be offloaded to AI.

- Co-Create (the 90%): Tasks which well-defined AI agents can augment and help humans to perform optimally.

- Facilitate (the 10%): Tasks which require high-touch, human-led learning to develop.

So if AI at work is now both real and material, the natural question for L&D is: how do we design for it? The short answer is to stop treating learning as an event and start treating it as a system.

My daughter’s generation expects to learn with AI, not pretend it doesn’t exist, because they know employers expect AI fluency and because AI will be ever-present in their adult lives.

— Jenny Maxell

The above quote was taken from this posting.

Unlocking Young Minds: How Gamified AI Learning Tools Inspire Fun, Personalized, and Powerful Education for Children in 2025 — from techgenyz.com by Sreyashi Bhattacharya

Table of Contents

- Highlight

- Gamified AI Learning Tools: The Future of Fun and Personalized Education

- Key Features of Gamified AI Learning Tools:

- Advantages of Gamified AI in Education

- Challenges and Concerns with Gamified AI Tools

- Emerging Trends in AI + Gamification for Learning

- Real-World Case Study: AI Games for Learning Math

- Conclusion: The Future of Gamified AI in Education

Highlight

- Gamified AI Learning Tools personalize education by adapting the difficulty and content to each child’s pace, fostering confidence and mastery.

- Engaging & Fun: Gamified elements like quests, badges, and stories keep children motivated and enthusiastic.

- Safe & Inclusive: Attention to equity, privacy, and cultural context ensures responsible and accessible learning.

How to test GenAI’s impact on learning — from timeshighereducation.com by Thibault Schrepel

Rather than speculate on GenAI’s promise or peril, Thibault Schrepel suggests simple teaching experiments to uncover its actual effects

Generative AI in higher education is a source of both fear and hype. Some predict the end of memory, others a revolution in personalised learning. My two-year classroom experiment points to a more modest reality: Artificial intelligence (AI) changes some skills, leaves others untouched and forces us to rethink the balance.

This indicates that the way forward is to test, not speculate. My results may not match yours, and that is precisely the point. Here are simple activities any teacher can use to see what AI really does in their own classroom.

4. Turn AI into a Socratic partner

Instead of being the sole interrogator, let AI play the role of tutor, client or judge. Have students use AI to question them, simulate cross-examination or push back on weak arguments. New “study modes” now built into several foundation models make this kind of tutoring easy to set up. Professors with more technical skills can go further, design their own GPTs or fine-tuned models trained on course content and let students interact directly with them. The point is the practice it creates. Students learn that questioning a machine is part of learning to think like a professional.

Assessment tasks that support human skills — from timeshighereducation.com by Amir Ghapanchi and Afrooz Purarjomandlangrudi

Assignments that focus on exploration, analysis and authenticity offer a road map for university assessment that incorporates AI while retaining its rigour and human elements

Rethinking traditional formats

1. From essay to exploration

When ChatGPT can generate competent academic essays in seconds, the traditional format’s dominance looks less secure as an assessment task. The future lies in moving from essays as knowledge reproduction to assessments that emphasise exploration and curation. Instead of asking students to write about a topic, challenge them to use artificial intelligence to explore multiple perspectives, compare outputs and critically evaluate what emerges.

Example: A management student asks an AI tool to generate several risk plans, then critiques the AI’s assumptions and identifies missing risks.

What your students are thinking about artificial intelligence — from timeshighereducation.com by Florencia Moore and Agostina Arbia

GenAI has been quickly adopted by students, but the consequences of using it as a shortcut could be grave. A study into how students think about and use GenAI offers insights into how teaching might adapt

However, when asked how AI negatively impacts their academic development, 29 per cent noted a “weakening or deterioration of intellectual abilities due to AI overuse”. The main concern cited was the loss of “mental exercise” and soft skills such as writing, creativity and reasoning.

The boundary between the human and the artificial does not seem so easy to draw, but as the poet Antonio Machado once said: “Traveller, there is no path; the path is made by walking.”

Jelly Beans for Grapes: How AI Can Erode Students’ Creativity — from edsurge.com by Thomas David Moore

There is nothing new about students trying to get one over on their teachers — there are probably cuneiform tablets about it — but when students use AI to generate what Shannon Vallor, philosopher of technology at the University of Edinburgh, calls a “truth-shaped word collage,” they are not only gaslighting the people trying to teach them, they are gaslighting themselves. In the words of Tulane professor Stan Oklobdzija, asking a computer to write an essay for you is the equivalent of “going to the gym and having robots lift the weights for you.”

Deloitte will make Claude available to 470,000 people across its global network — from anthropic.com

As part of the collaboration, Deloitte will establish a Claude Center of Excellence with trained specialists who will develop implementation frameworks, share leading practices across deployments, and provide ongoing technical support to create the systems needed to move AI pilots to production at scale. The collaboration represents Anthropic’s largest enterprise AI deployment to date, available to more than 470,000 Deloitte people.

Deloitte and Anthropic are co-creating a formal certification program to train and certify 15,000 of its professionals on Claude. These practitioners will help support Claude implementations across Deloitte’s network and Deloitte’s internal AI transformation efforts.

How AI Agents are finally delivering on the promise of Everboarding: driving retention when it counts most — from premierconstructionnews.com

Everboarding flips this model. Rather than ending after orientation, everboarding provides ongoing, role-specific training and support throughout the employee journey. It adapts to evolving responsibilities, reinforces standards, and helps workers grow into new roles. For high-turnover, high-pressure environments like retail, it’s a practical solution to a persistent challenge.

AI agents will be instrumental in the success of everboarding initiatives; they can provide a much more tailored training and development process for each individual employee, keeping track of which training modules may need to be completed, or where staff members need or want to develop further. This personalisation helps staff to feel not only more satisfied with their current role, but also guides them on the right path to progress in their individual careers.

Digital frontline apps are also ideal for everboarding. They offer bite-sized training that staff can complete anytime, whether during quiet moments on shift or in real time on the job, all accessible from their mobile devices.

TeachLM: insights from a new LLM fine-tuned for teaching & learning — from drphilippahardman.substack.com by Dr Philippa Hardman

Six key takeaways, including what the research tells us about how well AI performs as an instructional designer

As I and many others have pointed out in recent months, LLMs are great assistants but very ineffective teachers. Despite the rise of “educational LLMs” with specialised modes (e.g. Anthropic’s Learning Mode, OpenAI’s Study Mode, Google’s Guided Learning) AI typically eliminates the productive struggle, open exploration and natural dialogue that are fundamental to learning.

This week, Polygence, in collaboration with Stanford University researcher Prof Dora Demszky. published a first-of-its-kind research on a new model — TeachLM — built to address this gap.

In this week’s blog post, I deep dive what the research found and share the six key findings — including reflections on how well TeachLM performs on instructional design.

The Dangers of using AI to Grade — from marcwatkins.substack.com by Marc Watkins

Nobody Learns, Nobody Gains

AI as an assessment tool represents an existential threat to education because no matter how you try and establish guardrails or best practices around how it is employed, using the technology in place of an educator ultimately cedes human judgment to a machine-based process. It also devalues the entire enterprise of education and creates a situation where the only way universities can add value to education is by further eliminating costly human labor.

For me, the purpose of higher education is about human development, critical thinking, and the transformative experience of having your ideas taken seriously by another human being. That’s not something we should be in a rush to outsource to a machine.