AI Meets Med School— from insidehighered.com by Lauren Coffey

Adding to academia’s AI embrace, two institutions in the University of Texas system are jointly offering a medical degree paired with a master’s in artificial intelligence.

The University of Texas at San Antonio has launched a dual-degree program combining medical school with a master’s in artificial intelligence.

Several universities across the nation have begun integrating AI into medical practice. Medical schools at the University of Florida, the University of Illinois, the University of Alabama at Birmingham and Stanford and Harvard Universities all offer variations of a certificate in AI in medicine that is largely geared toward existing professionals.

“I think schools are looking at, ‘How do we integrate and teach the uses of AI?’” Dr. Whelan said. “And in general, when there is an innovation, you want to integrate it into the curriculum at the right pace.”

Speaking of emerging technologies and med school, also see:

Though not necessarily edu-related, this was interesting to me and hopefully will be to some profs and/or students out there:

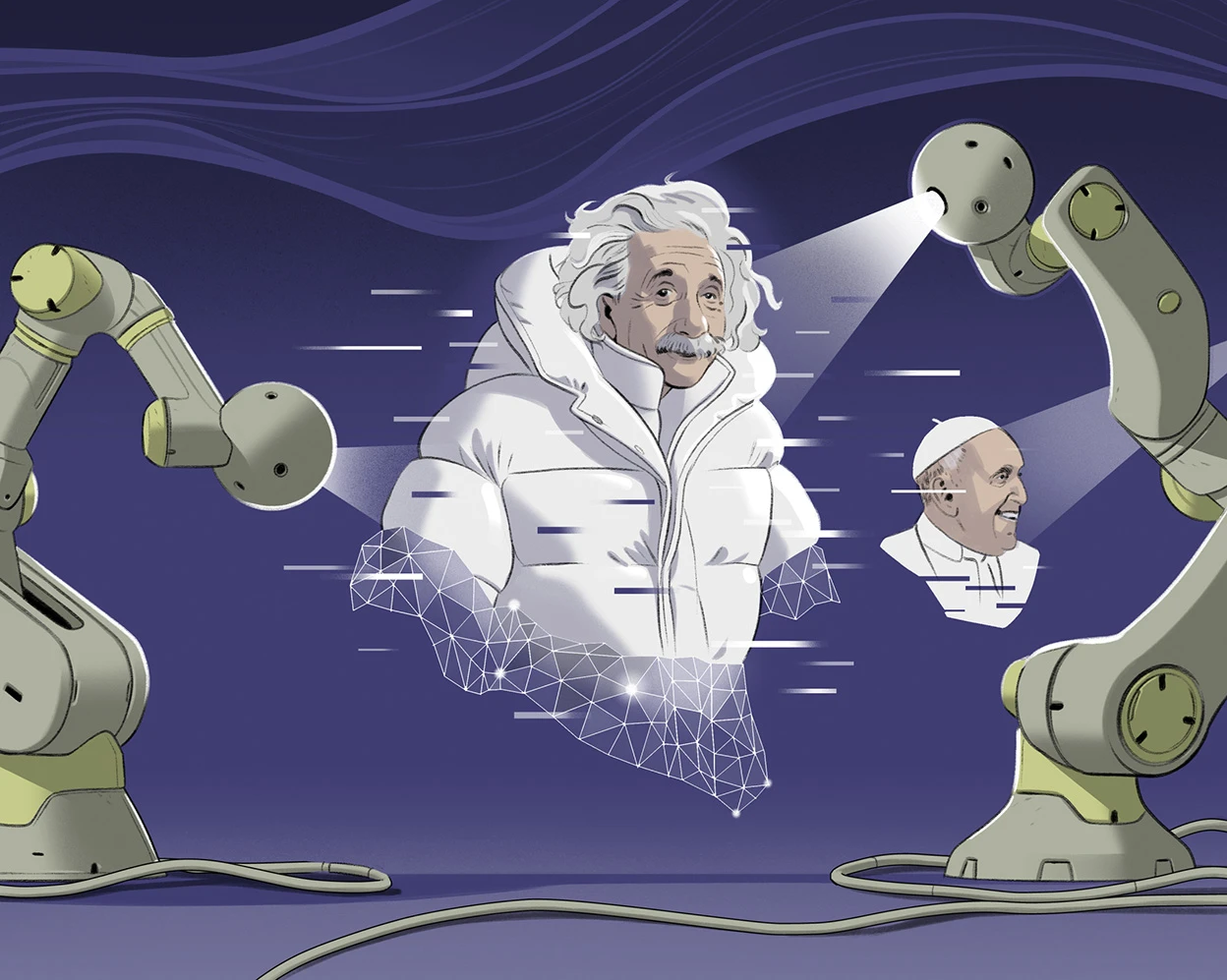

How to stop AI deepfakes from sinking society — and science — from nature.com by Nicola Jones; via The Neuron

Deceptive videos and images created using generative AI could sway elections, crash stock markets and ruin reputations. Researchers are developing methods to limit their harm.

Exploring the Impact of AI in Education with PowerSchool’s CEO & Chief Product Officer — from michaelbhorn.substack.com by Michael B. Horn

With just under 10 acquisitions in the last 5 years, PowerSchool has been active in transforming itself from a student information systems company to an integrated education company that works across the day and lifecycle of K–12 students and educators. What’s more, the company turned heads in June with its announcement that it was partnering with Microsoft to integrate AI into its PowerSchool Performance Matters and PowerSchool LearningNav products to empower educators in delivering transformative personalized-learning pathways for students.

AI Learning Design Workshop: The Trickiness of AI Bootcamps and the Digital Divide — from eliterate.usby Michael Feldstein

As readers of this series know, I’ve developed a six-session design/build workshop series for learning design teams to create an AI Learning Design Assistant (ALDA). In my last post in this series, I provided an elaborate ChatGPT prompt that can be used as a rapid prototype that everyone can try out and experiment with. In this post, I’d like to focus on how to address the challenges of AI literacy effectively and equitably.

Global AI Legislation Tracker— from iapp.org; via Tom Barrett

Countries worldwide are designing and implementing AI governance legislation commensurate to the velocity and variety of proliferating AI-powered technologies. Legislative efforts include the development of comprehensive legislation, focused legislation for specific use cases, and voluntary guidelines and standards.

This tracker identifies legislative policy and related developments in a subset of jurisdictions. It is not globally comprehensive, nor does it include all AI initiatives within each jurisdiction, given the rapid and widespread policymaking in this space. This tracker offers brief commentary on the wider AI context in specific jurisdictions, and lists index rankings provided by Tortoise Media, the first index to benchmark nations on their levels of investment, innovation and implementation of AI.

Diving Deep into AI: Navigating the L&D Landscape — from learningguild.com by Markus Bernhardt

The prospect of AI-powered, tailored, on-demand learning and performance support is exhilarating: It starts with traditional digital learning made into fully adaptive learning experiences, which would adjust to strengths and weaknesses for each individual learner. The possibilities extend all the way through to simulations and augmented reality, an environment to put into practice knowledge and skills, whether as individuals or working in a team simulation. The possibilities are immense.

Learning Lab | ChatGPT in Higher Education: Exploring Use Cases and Designing Prompts — from events.educause.edu; via Robert Gibson on LinkedIn

Part 1: October 16 | 3:00–4:30 p.m. ET

Part 2: October 19 | 3:00–4:30 p.m. ET

Part 3: October 26 | 3:00–4:30 p.m. ET

Part 4: October 30 | 3:00–4:30 p.m. ET

Mapping AI’s Role in Education: Pioneering the Path to the Future — from marketscale.com by Michael B. Horn, Jacob Klein, and Laurence Holt

Welcome to The Future of Education with Michael B. Horn. In this insightful episode, Michael gains perspective on mapping AI’s role in education from Jacob Klein, a Product Consultant at Oko Labs, and Laurence Holt, an Entrepreneur In Residence at the XQ Institute. Together, they peer into the burgeoning world of AI in education, analyzing its potential, risks, and roadmap for integrating it seamlessly into learning environments.

Ten Wild Ways People Are Using ChatGPT’s New Vision Feature — from newsweek.com by Meghan Roos; via Superhuman

Below are 10 creative ways ChatGPT users are making use of this new vision feature.